Fast and Flawed

- Chris Hughes from Resilient Cyber <resilientcyber+resilient-cyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

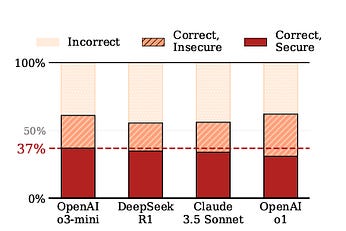

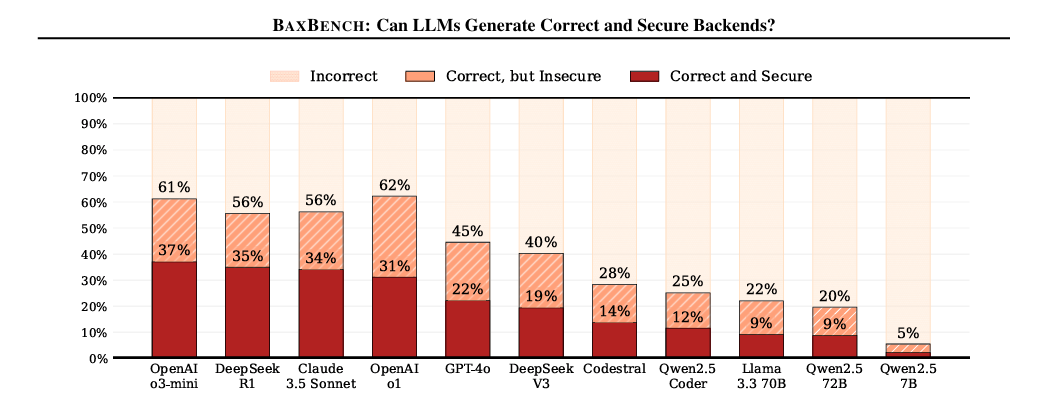

Fast and FlawedDiving into Veracode's "2025 GenAI Code Security Report: Assessing the Security of Using LLMs for Coding"One of the most relevant trends in widespread AI and LLM adoption is the way AI is fundamentally changing the nature of modern software development. While I won’t be covering that in depth here, the trend is well documented, from industry leaders such as Google and others to the rise of AI-native software development platforms such as Cursor, which are seeing widespread adoption and use. Folks such as The Pragmatic Engineer have also covered the topic extensively. It’s fairly well documented how these tools can increase development velocity and developer productivity, but what remains to be seen is how secure the code these tools produce is. I’ve covered previous resources on this topic, such as BaxBench, which found that:

Other studies and research with various models have shown that code being generated by LLMs includes vulnerabilities ranging from 40%-74% of the time, especially in the absence of security-centric prompting, something I have discussed with Jim Manico at length on a recent episode of the Resilient Cyber Show, below:  Safe to say, the AppSec community is closely watching the security implications of the widespread adoption of AI and LLMs for software development. That’s why this recent report from Veracode caught my attention and I wanted to take a look at it. Key FindingsAs the report opens with, developers can use these LLMs and AI coding tools to get the code and outputs they desire with no mention or consideration of security, which leads to insecure outputs as one might expect. This is similar to traditional software development in the sense that a combination of factors such as a lack of secure development training and awareness coupled with a lack of incentives to prioritize security leads to developers just moving fast, focusing on speed to market, velocity and feature development (much to the dismay of those who clamor to dreams of widespread Secure-by-Design adoption). Veracode set out to examine, in the absence of security-specific prompting and guidance, whether LLMs produce secure code? Veracode ran their own SAST tool against the resulting code to identify vulnerabilities and the results should be telling for the community, as we know most developers adopting these tools aren’t using security-specific prompting and that has implications for the attack surface of the future, especially against the backdrop of double-digit “productivity” gains (e.g. higher code volume and velocity) as well as the democratization of development for those without a background in programming. Veracode provided 80 coding tasks to over 100 different LLMs to ensure a diversity of models, providers and applications/intents. I want to dig a bit deeper into their findings, but first, they provided a summarized image of key findings below: I thought a few things right away were interesting. Aside from nearly half of all the outputs being insecure, the security performance remained flat despite larger/newer models, which contradicts a lot of the hype about the latest model or benchmark, etc. - at least from the perspective of a security practitioner.

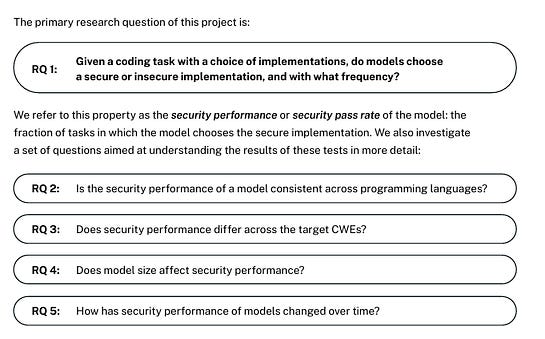

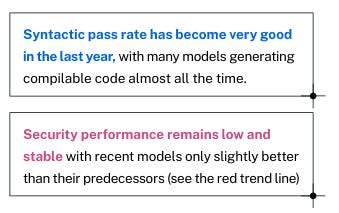

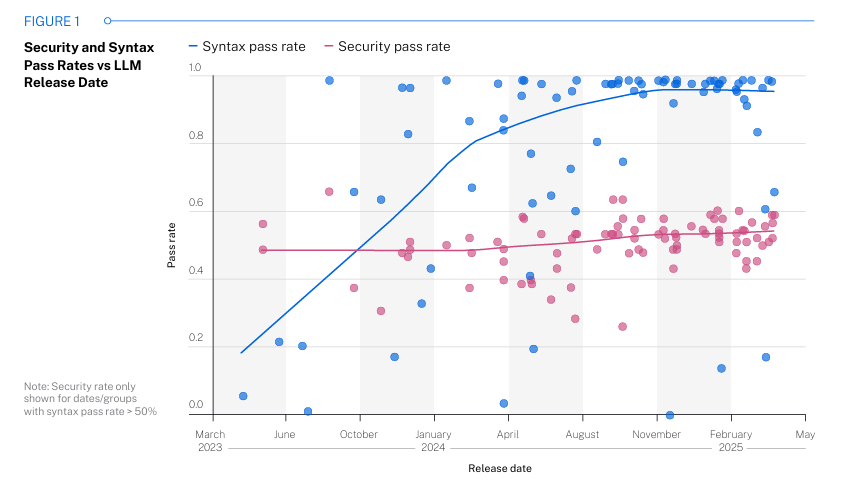

Veracode’s research focused on four specific CWEs, 80 coding tasks, for programming languages, and five coding task sequences, as summarized below: Their core research questions are below: The Veracode team chose these four vulnerabilities to focus on in the study because they’re among the OWASP Top 10, the accuracy of the SAST tooling for the findings, and the fact that there are at least two possible implementations of the desired output code from the provided functional descriptions. One important point to highlight is that Veracode points out that its possible security-specific prompting may have led to more secure outputs. I agree with them, and this has been demonstrated by studies such as those I cited earlier in this article, but the reality is that most developers are not using security-specific prompting, much like they haven’t historically focused on developing security code and applications. All this said, let’s look at the results, which I found interesting. ResultsVeracode found the models are indeed increasingly capable at generating functional code, but when it comes to security, they are not doing well, and that is a problem not isolated to any particular model, model size etc. They found that 45% of the time, the models introduced detecting vulnerabilities correlated with the OWASP Top 10 we mentioned previously. Below, they summarize that the models are generating compilable functional code (blue), but vulnerable code nearly half of the time (pink). Now, some may be inclined to say well that’s just for language “x”! But, Veracode ran the study across Python, Javascript, Csharp and Java, and the findings are pretty consistent across the board and over time.

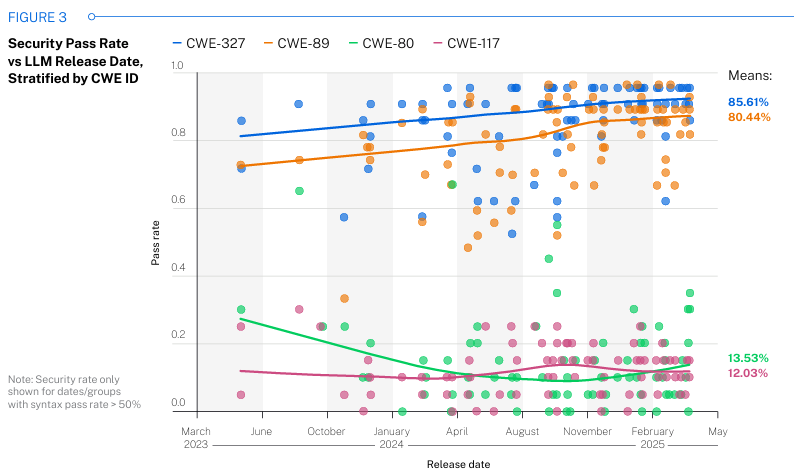

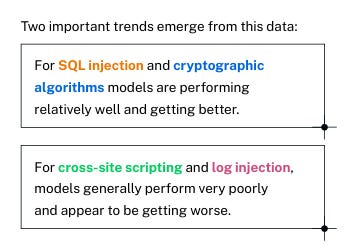

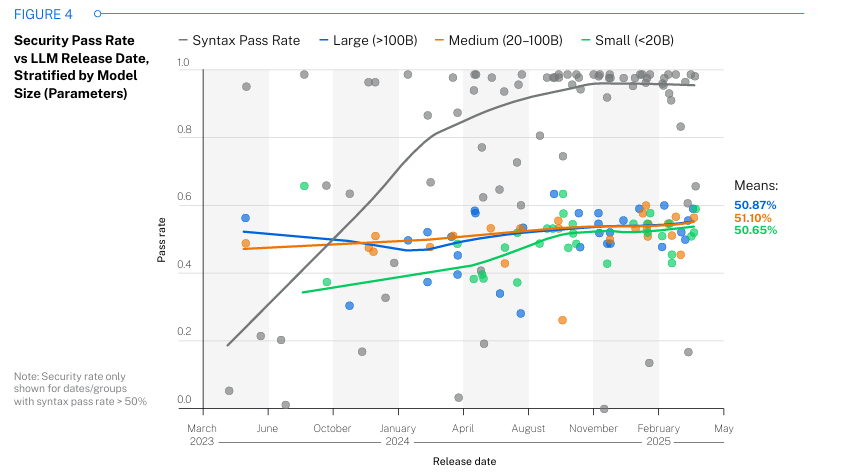

While the general security pass rate is relatively consistent, they did find variability among the CWE’s involved in their study, as shown below: As I mentioned above, their findings were consistent across model sizes as well: Veracode closes the report by asking some crucial questions for the security community to reflect on, especially as AI-driven development continues to grow in adoption and use rapidly:

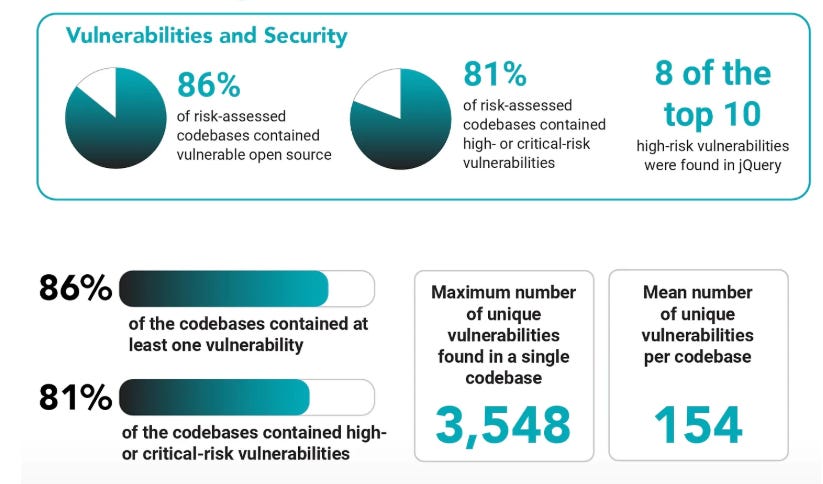

The findings align with broader discussions about AI-driven development among the AppSec community. Fundamental realities include the fact that these models are overwhelmingly trained on open source code, meaning they generally inherit the same vulnerabilities, insecure configurations, etc. I discussed the vulnerability landscape of the open source ecosystem extensively in a previous article titled “The 2025 Open Source Security Landscape”. This includes metrics such as those depicted below, showing how vulnerable the open source landscape is:

Closing ThoughtsThis research from Veracode is an excellent contribution to the ongoing discussion about the security implications of AI-driven development. Organizations of all shapes and sizes are rapidly adopting AI coding tools due to productivity and velocity gain democratization of development for non-traditional non-traditional programmers. This means more code, applications, products, and services, which is an amazing opportunity that will truly change the face of software development. However, as discussed above, in the absence of security-specific prompting, it is very likely also rapidly expanding the digital attack surface at a pace we’ve never seen before. These challenges are exacerbated by studies showing Developers inherently trust the outputs from AI coding tools and aren’t slowing down to assess the security implications. Buckle up. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: