Resilient Cyber Newsletter #34

- Chris Hughes from Resilient Cyber <resilientcyber+resilient-cyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

Resilient Cyber Newsletter #342024 Cyber Market Retrospective, State of AI in the Cloud, GenAI Data Leaks, New Frontiers in Vulnerability Management & Applying GenAI for CVE Analysis at Scale.Welcome!Welcome to another issue of the Resilient Cyber Newsletter. We continue to see a TON of activity and resources to cover, from U.S. administration changes impacting tech and cyber, DeepSeek ramifications continuing to rattle AI and supply chain concerns still lurking. So, let’s get to it! Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 16,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below!

Cyber Leadership & Market Dynamics2024 Cyber Market Analysis RetrospectiveIn this episode, we sit down with CISO turned Cybersecurity Economist Mike Privette, who is the Return on Security. We will look at Mike's recent 2024 State of the Cyber Market analysis report, including AI's role, funding shifts, and the investment trends shaping the cybersecurity industry going into 2025. We spoke about:

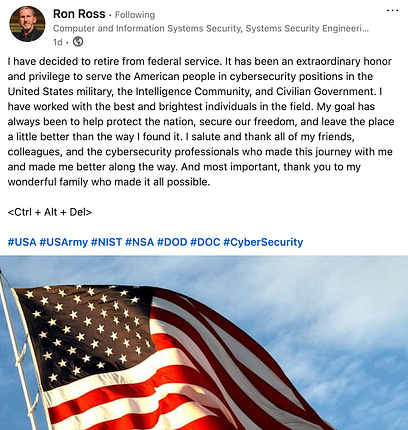

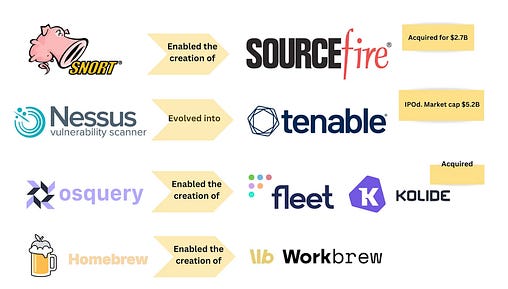

21% of CISOs Pressured NOT to Report Compliance IssuesA recent survey of 600 CISOs by Splunk revealed some concerning trends. Among other data the survey reported, it was found that one in five (21%) of CISOs reported being pressured by other executives or board members not to report compliance issues at their companies. This is of course unethical and potentially legally challenging as well, especially as we see the rise of legal liability for CISOs and finding themselves in the legal crosshairs depending on the nature of an incident, a programs security program (or lack thereof) and more. It puts CISOs in a challenging position, where on one hand they’re trying to shake being the “office of no” and a blocker for the business, but on the other, have a duty and ethical responsibility to raise compliance and security concerns when they see them. Tracking Federal CIO’s and CISO’s in Trump’s Second TermIf you’re like me and spend a fair bit of time focusing on the Federal and Defense communities, then it is helpful to understand the leadership landscape. This resource from GovCIO provides an update on the CIOs and CISOs in Trump’s second term across various U.S. Federal agencies. The U.S. Loses a Legend in Cybersecurity LeadershipIf you’ve been anywhere in U.S. Federal IT/Cyber policy, you likely have run into the name Ron Ross. Ron has spent literally decades driving the U.S.’s approach to cybersecurity at organizations such as NIST. He has been involved in critical publications such as NIST 800-37, 800-53, 800-171, and countless others. Ron took the time to share his retirement decision on LinkedIn. Federal Cyber “Experts” MIAEd Amaraso shared the above cartoons. It is a joke pointing out concerns some have about the current lack of cybersecurity expertise within the federal space. A successor to Jen Easterly at CISA hasn’t been named yet, and many have now raised concerns about some of the DOGE activities, those involved, and potential cybersecurity risks. The Biggest Breach of US Government Data is UnderwayTechCrunch and others have begun running partisan pieces raising concerns about Elon Musk and DOGE's activities regarding US government systems and data. This has been a politically charged topic over the past several weeks as DOGE has revealed incredibly high levels of spending on questionable activities across several agencies, most notably USAID. This has now led to many stories about how the activities are being carried out, those involved, the potential lack of adherence to Federal cybersecurity policies, data privacy, and more. Much like everything else in our society, everyone is taking one side or the other. I personally do think we should follow proper access policies and requirements, but we also should be having transparency around spending, pushing for efficiency, and most certainly eliminating fraud, waste and abuse. It’s a real shame it took this sort of situation for Government transparency around spending and rooting out questionable spending of tax dollars. I hope we can collectively agree that two things can be true at the same time, and follow proper security processes but also not bury and turn a blind eye to reckless spending and even potential fraud. Will the Next Wave of Cybersecurity Success Stories Originate in Open Source?Open Source 🤝 Cybersecurity The intersection between open source and cyber is an interesting one. While there are many great open-source security tools, the examples of companies taking the open-source path and building industry-leading companies/platforms aren’t as plentiful. This is a good read from Kane Narraway on some of the nuances, such as:

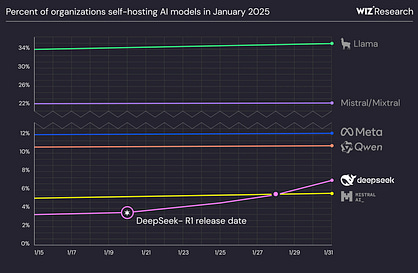

I’ll say in heavily regulated environments such as DoD, organizations have more success with the former than the latter, at least compared to the commercial industry, due to regulatory hurdles with cloud authorizations, trust around self-hosting, and other unique factors Whether we will see other breakaway examples emerge from open source to building industry-leading platforms and companies remains to be seen, but the intersections between cyber and open source aren’t going away anytime soon. AIState of AI in the Cloud 2024Wiz released their “State of AI in the Cloud” report, and it has some interesting findings:

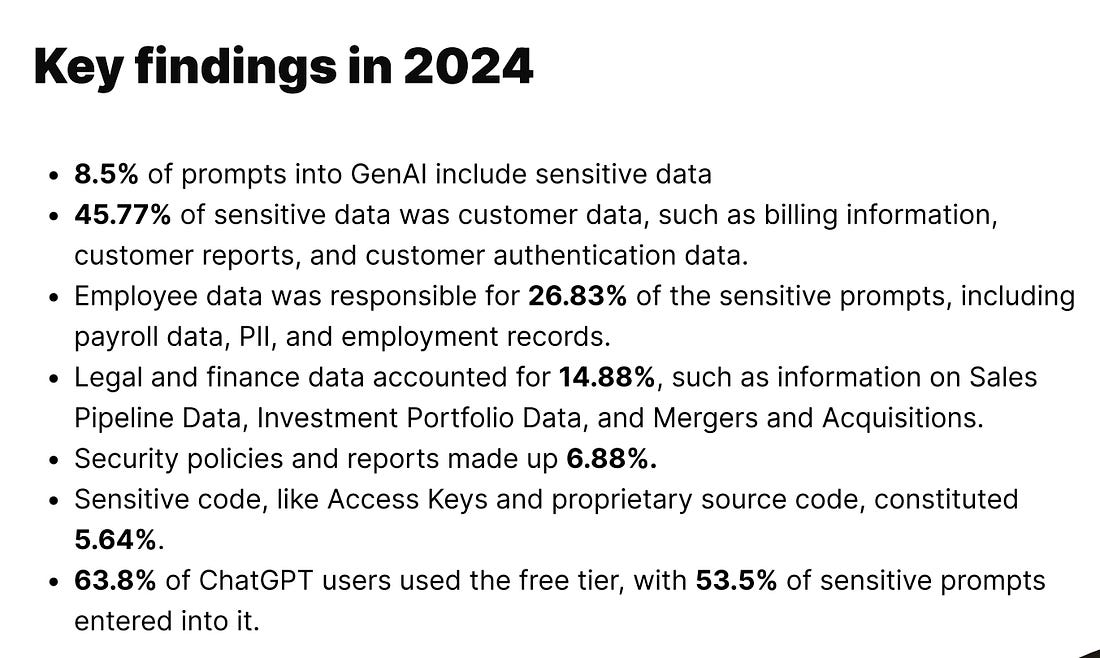

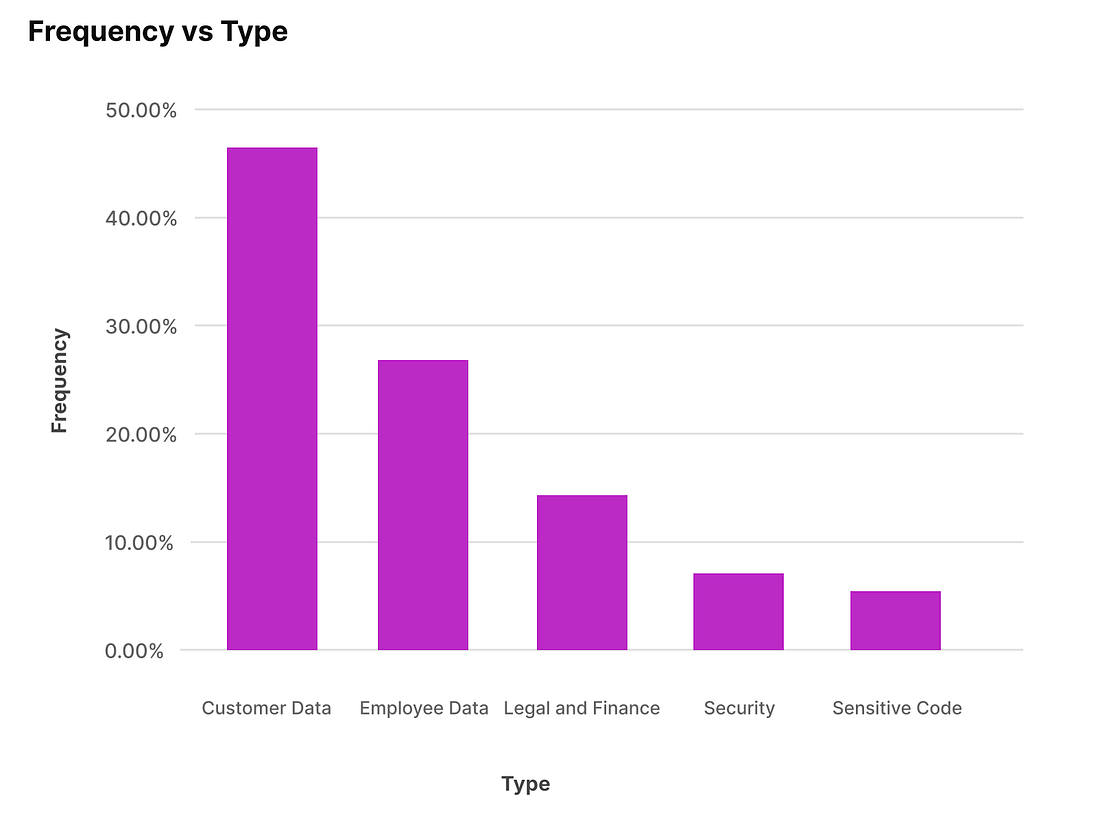

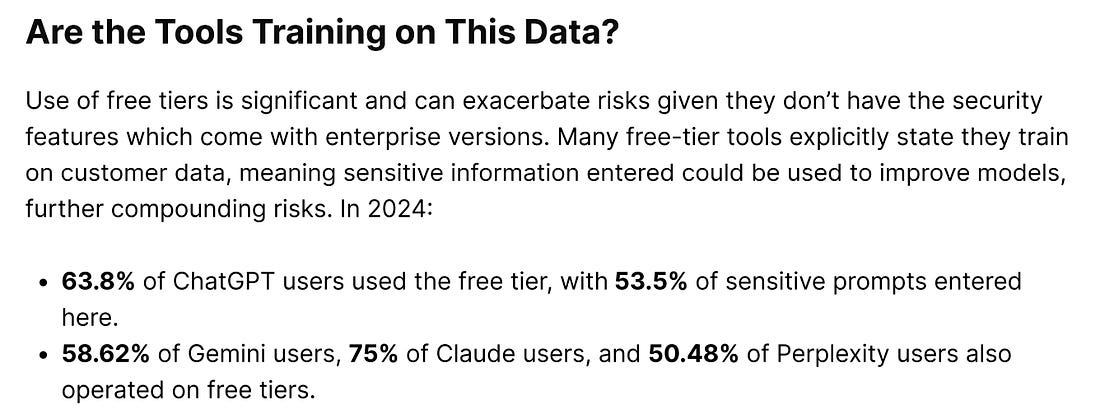

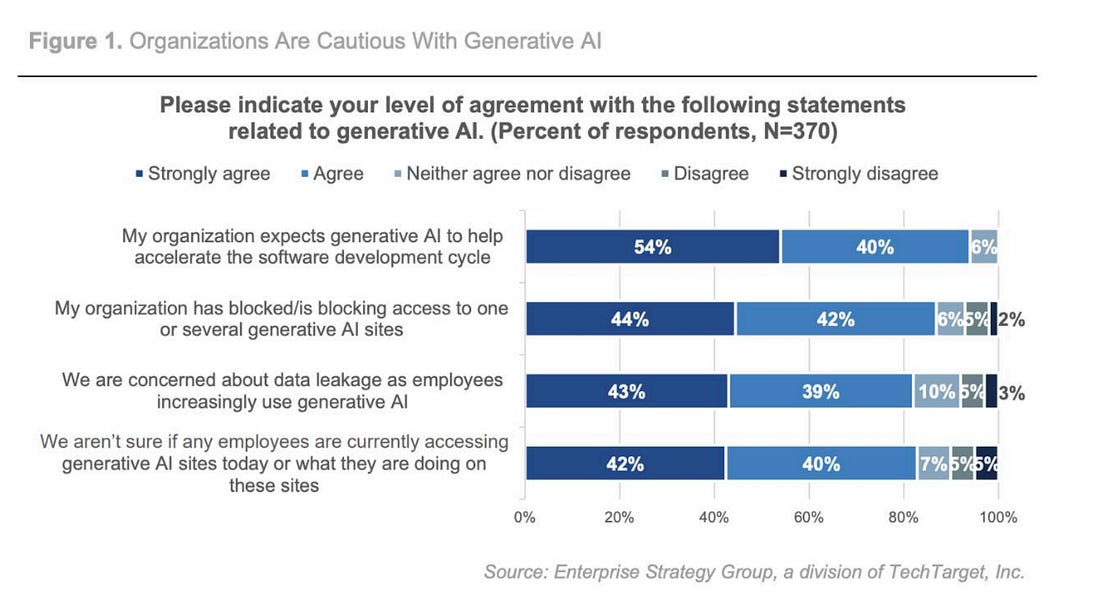

It is unfortunate in some ways to see how rapidly DeepSeek adoption is, despite many folks raising security concerns from failing security tests, bias, and more. But, as we all know, money talks and folks are looking for cost-optimized computing and consumption, and DeepSeek has indeed innovated on that front. At this point, U.S. alternatives must continue to innovate to wrest back market share from the China-based DeepSeek model. I also think we’re seeing the rise of self-hosting as organizations consider this option due to security concerns about data, how a provider may use it, and broader security concerns. Self-hosted is a potentially more secure alternative that gives organizations control while allowing them to still tap into this promising technology of GenAI and LLM. White House Seeks Public Input on AI PolicyThe White House recently released a Request for Information (RFI) seeking public input through March 15th on the U.S.’s approach to AI. As you’ll recall, I recently shared how President Trump revoked a previous AI EO from President Biden. Trump then released a new AI EO focused on ensuring American dominance in AI (most notably against nations such as China). Below is a quote from Lynne Parker, the Principal Deputy Director of Science and Technology Policy (OSTP), regarding the RFI. The inputs will be used to help craft the U.S.’s AI Action Plan. Nearly 10% of Employee GenAI Prompts Include Sensitive DataA recent report from AI security firm Harmonic Security found that nearly 10% of all employee prompts include sensitive data. Key findings are summarized below: This highlights that sensitive data is being provided to external vendors/services and their associated models. This data includes access keys, source code, and more. Historically, organizations have struggled with SaaS governance and the services they use. With the introduction of GenAI, those SaaS services are using models that may be siphoning up sensitive data via voluntary prompts from employees. The report highlighted that organizations are developing governance for GenAI usage but often lack visibility into what data employees enter into the GenAI tools. The report goes further, providing insights into the frequency of sensitive data leakage and its type. From the image below, it is clear customer data is falling the most victim, opening the door for ramifications from disclosing customer data, which brings both legal and reputational risks. Additionally, we can see sensitive data types such as legal/finance, security, and source code. When we think about data leakage, some may ask, “So what?” in terms of whether the vendor actually trains on the data disclosed. As it turns out, over half of all usage includes free-tier usage, which doesn’t include some of the fundamental security features that paid-tier services do. The report also highlighted the organization’s cautions with GenAI and survey insights seen below: What I found interesting is that organizations overwhelmingly anticipate GenAI accelerating the SDLC at 94%. From the AppSec perspective, we can expect this “productivity” boom to be associated with exponential growth in YoY vulnerabilities organizations need to wrestle with, as the GenAI coding tools and copilots do not produce perfect, infallible code. AppSec, Vulnerability Management, and Software Supply ChainTackling Vulnerabilities, Regulatory Shifts, and Root Cause AnalysisI recently had a chance to join Phoenix Security for a webinar on all things AppSec. We chatted about:

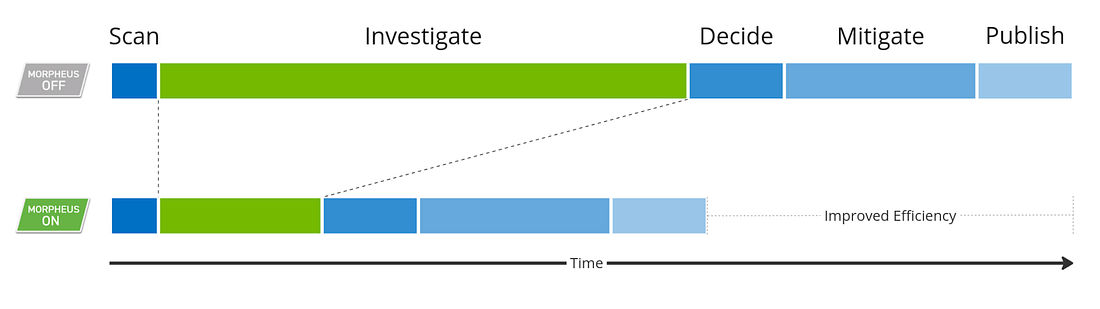

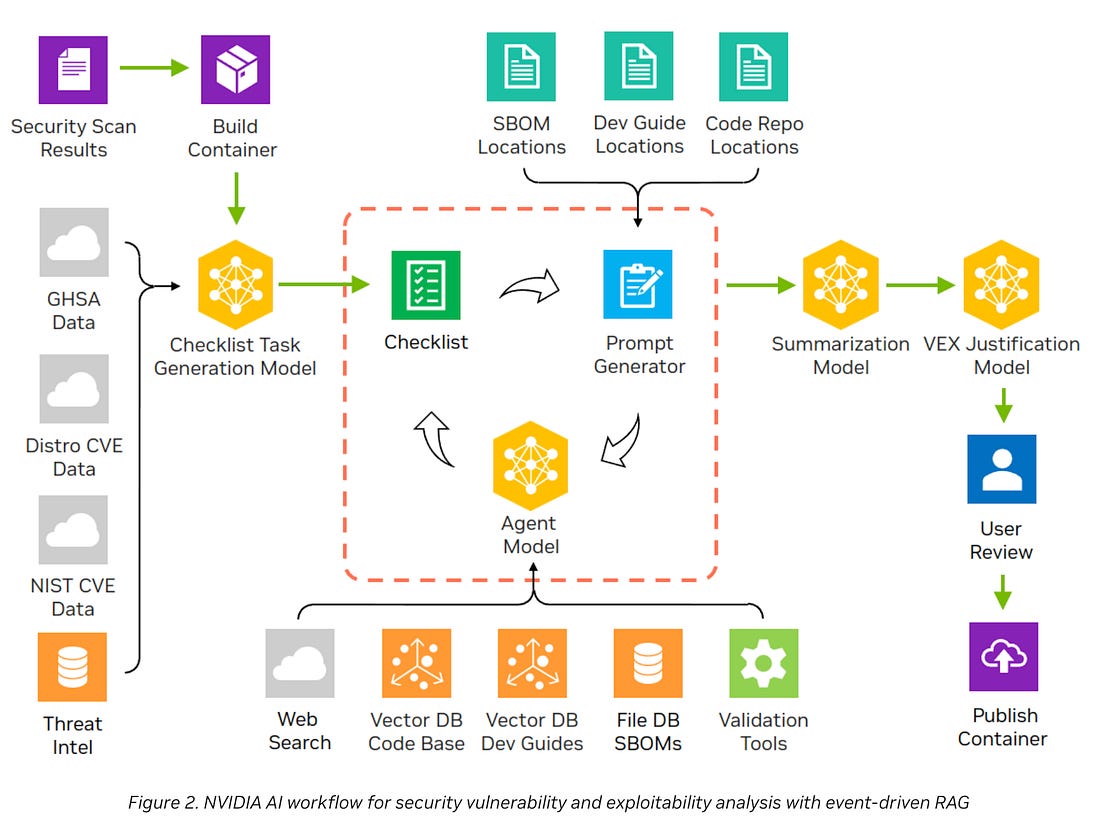

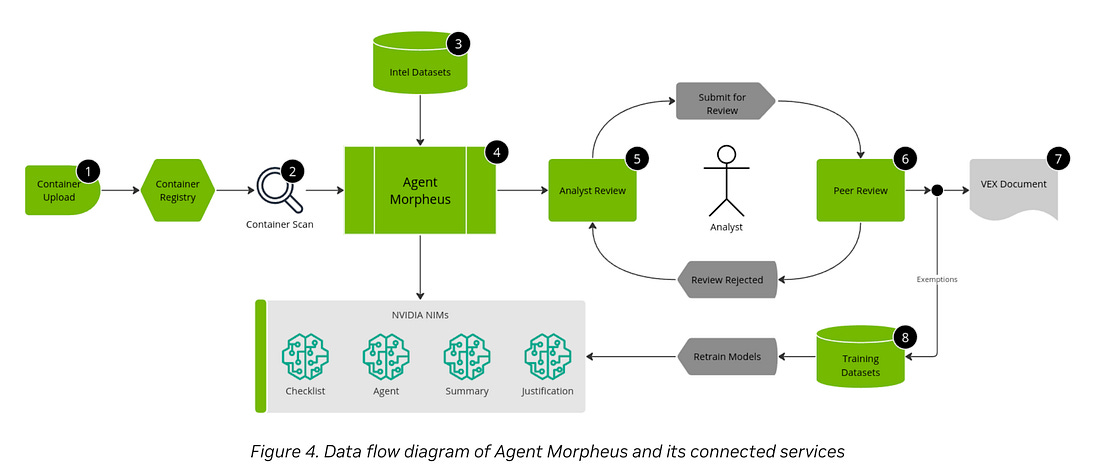

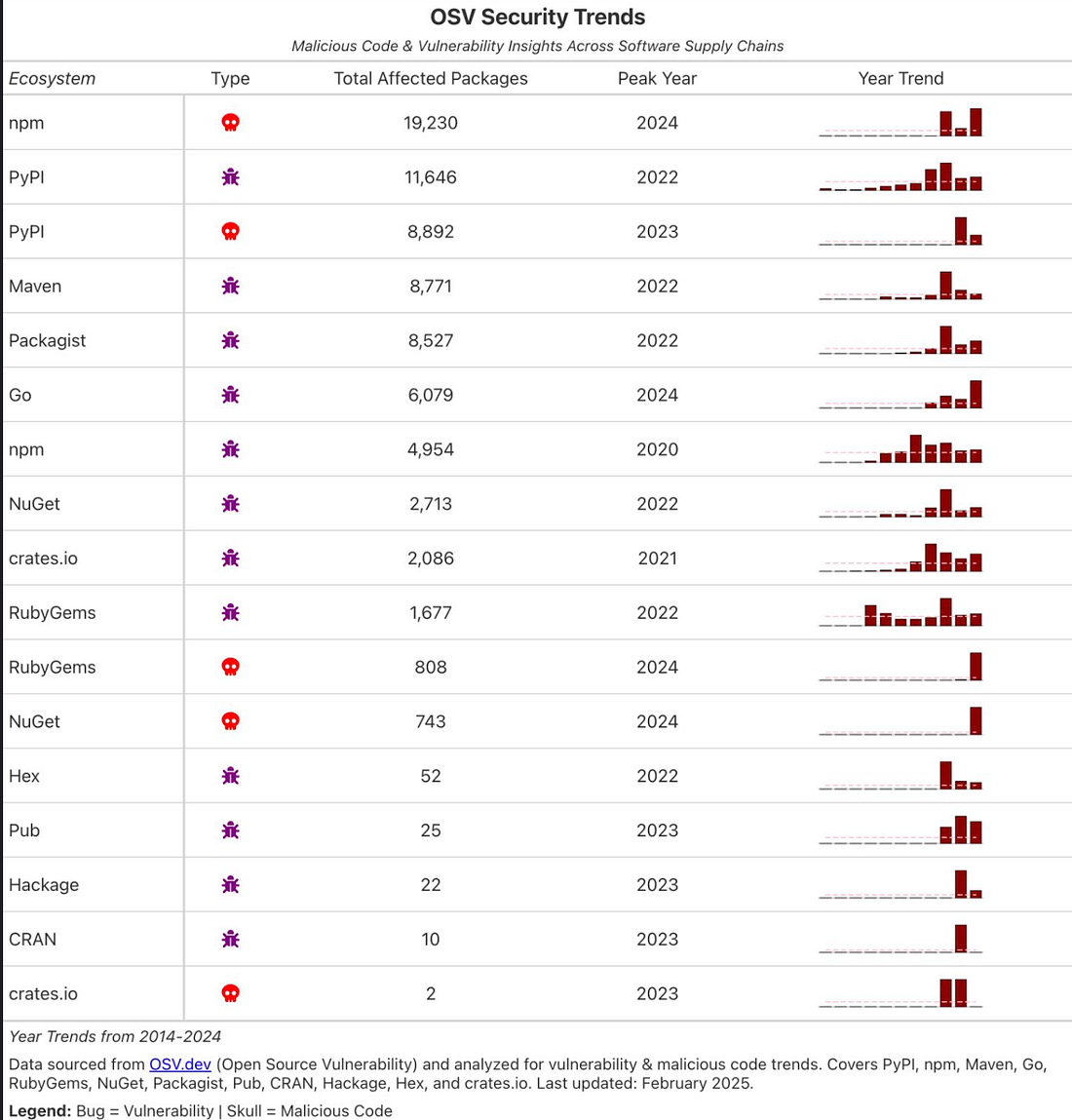

Applying GenAI for CVE Analysis at an Enterprise ScaleWe continue to see the exploration of GenAI and LLM for security use cases. A recent example is this technical blog from the NVIDIA team, which demonstrates using GenAI for CVE analysis in an enterprise. They demonstrate using a GenAI application they named Agent Morpheus to streamline traditional CVVE analysis activities. The agent performs a series of activities, including determining if a vulnerability exists, generating a checklist of tasks to investigate the CVE, and then determining if it is exploitable to minimize noise. What is promising is their use of agentic AI to run through the checklist of activities and do so without the need for direct human interaction or prompting. A human is only required when it reaches a point where a decision is needed. The full workflow of activities is captured in the image below: Another very cool aspect is that the workflow leads to the output of a VEX document, Vulnerability eXploitability Exchange, for those unfamiliar with the acronym. This can be used by software vendors to share with downstream consumers to communicate the exploitability of vulnerabilities within components of an application or product. This occurs in a feedback loop, where outputs are compiled into a new training dataset to retrain models and constantly improve the output. This is an excellent example of applying GenAI to existing cybersecurity challenges. It is easy to see how this can improve vulnerability management workflows and activities and free humans to focus on more critical tasks than analyzing vulnerabilities and their exploitability. We need CVSS, warts and allIf you spend any time digging into VulnMgt, you likely know what the Common Vulnerability Scoring System (CVSS) is and some of its challenges and shortcomings. To summarize, it is the predominant vulnerability severity scoring system used across the industry and CVSS scores are assigned to CVE’s in databases such as the NIST National Vulnerability Database (NVD). The problem is that it isn’t perfect and doesn’t account for factors such as known exploitation or exploitability among others. This is a good article with industry leaders weighing in about CVSS and some of its drawbacks, alternatives such as EPSS and the fact that for better or worse, CVSS is likely here to stay, as it has been for 20~ years. For those interested in a deep dive into CVSS and EPSS, I have articles on each: GitHub Advanced Security Integrates Endor Labs SCAThe number of CVE’s published over the last decade has grown nearly 500%. This leads to massive vulnerability backlogs as organziation’s drown in findings not knowing what to prioritize or why and adds massive friction to the Developer workflow as Security continues to beat our peers over the head with findings, often without context or value. I have been lucky to get to collaborate with innovators breaking this legacy mold, such as Software Composition Analysis (SCA) firm Endor Labs, where I serve as the Chief Security Advisor. We recently announced that Endor Labs will now be integrated with GitHub Advanced Security and Dependabot, letting Developers tackle findings right in their native workflows on the leading software development platform. This couples GitHubs massive global reach and features with Endor Labs unique capabilities to provide insight into vulnerabilities, including context such as known exploitation, exploitability and reachability analysis, including for transitive dependencies. Compromised Open Source Packages ContinueCaleb Kinney shared a great summary of the continued attacks and compromises of the open source ecosystem, leveraging insights from OSV.dev. He showed that in 2024 alone, 8,000 npm packages were impacted by malicious code but the other package manager and language ecosystems continue to be prime targets as well. While it is easy to be lulled by these figures and their continual climb, it is only a matter of time until another widely used package is compromised and it wreaks havoc across the software ecosystem. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: