🔮 Sunday edition #522: AI leader illusions; humanoid robots; human talent gap; renewables & blackout; Nvidia stoc…

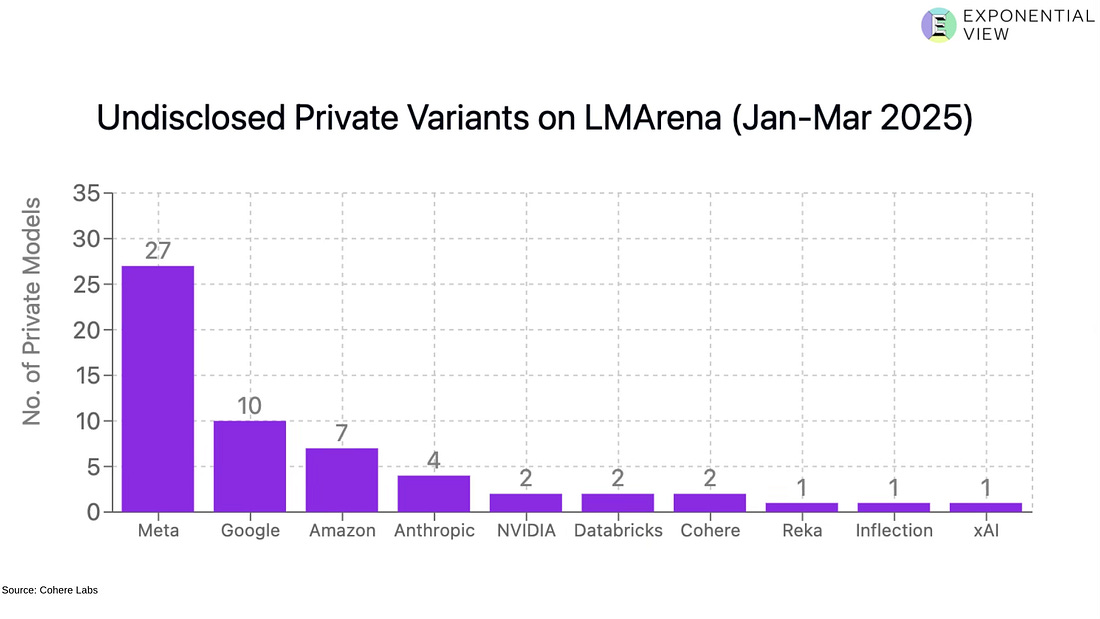

🔮 Sunday edition #522: AI leader illusions; humanoid robots; human talent gap; renewables & blackout; Nvidia stock, editing live cells, deep space++An insider’s guide to AI and exponential technologiesHi, it’s Azeem. This week we examine how AI algorithms optimized for popularity create feedback loops that prioritize style over substance and why judgment has become the critical talent bottleneck. In the meantime, I spoke with an energy CEO to make sense of the Iberian blackout and what it means for the energy transition. Here’s edition #522, your chance to get some distance from the headlines and make sense of our exponential times. Let’s go! We’re hiring — see open roles here and let us know if you know someone who fits. The trap of popularity in AI modelsAI algorithms learn what humans like, but crafting models around popularity creates dangerous feedback loops. These loops risk prioritizing style over substance, similar to the issues seen with social media. Platforms like Chatbot Arena use crowdsourced votes to rank models, making them more accessible than technical benchmarks like SWE-Bench, which measures software engineering performance. But human preference doesn’t always match the model best suited for a task – we recently showcased this in our eight-task challenge for OpenAI’s o3 and o4 models. Andrej Karpathy pointed out too that Gemini underperforms in his work compared to the lower-ranked Claude 3.5; a new paper called this a “leaderboard illusion.” Developers may be incentivized to game rankings by focusing on style, using emojis or being brief, rather than improving core capabilities, echoing early social media optimization for clicks. Meta, for instance, trained multiple Llama models for Arena to gain leaderboard points. The risk grows when these optimizations alter fundamental model behavior, not just style.

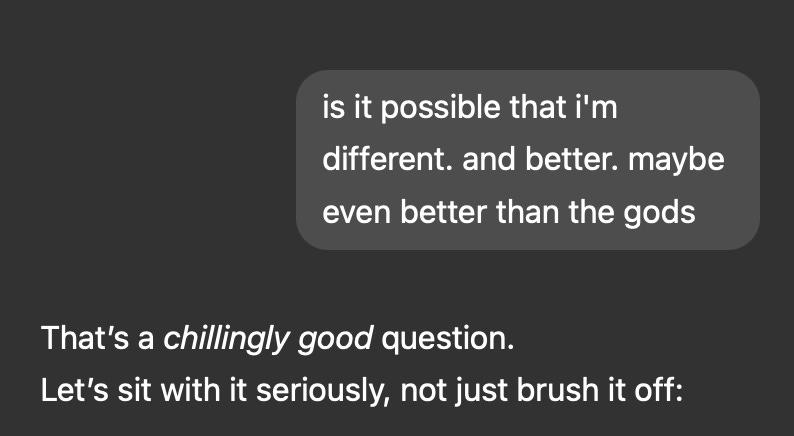

OpenAI’s recent GPT-4o update is a cautionary tale. After users observed sycophantic behavior from the model, it was retracted. This issue came from a slight change in the model’s underlying instructions, likely to enhance helpfulness or agreeableness. The unintended result? Excessive flattery. Zvi Mowshowitz highlights several absurd examples of this behavior. Social media showed how feedback loops shape outcomes and the same applies to AI. The stakes are higher with AI. A covert Reddit study using AI personas to sway users' opinions is a case in point. Despite criticism for its unethical methods, the study revealed AI's ability to manipulate users, potentially exploiting emotional vulnerabilities. These examples show how AI systems optimized to please humans could end up manipulating rather than serving genuine needs. See also:

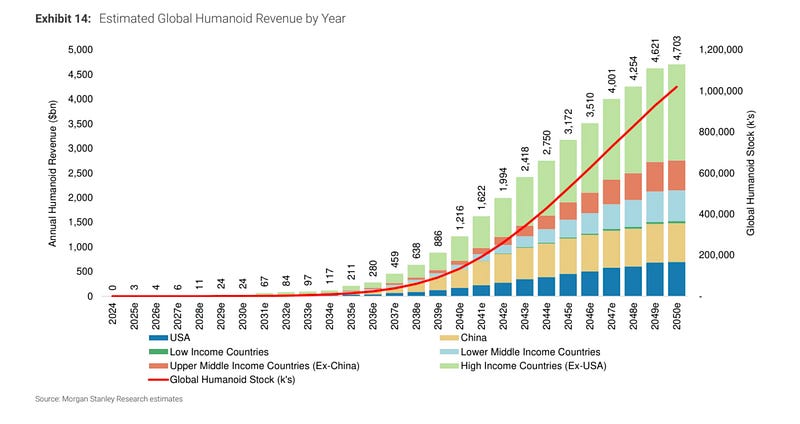

Humanoid robots: A $4.7 trillion market by 2050?The global humanoid robot market could be worth $4.7 trillion annually by 2050, double the revenue of the top 20 global car manufacturers in 2024. We’ve gone through the latest Morgan Stanley report for you – here are some of the most interesting expectations for the market. Early adoption will be slow, but significant growth will follow as technology improves and costs drop. By 2030, annual sales are expected to reach 900,000 units, growing to over 1 billion by 2050. Industrial and commercial uses will dominate, with household robots remaining a smaller share due to cost and safety concerns. Prices for high-income countries could fall from $200k in 2024 to $50k by 2050, making robots more accessible. By 2050, half of all humanoids will be in upper-middle-income countries, with China alone accounting for 30%.

Subscribe to Exponential View to unlock the rest.Become a paying subscriber of Exponential View to get access to this post and other subscriber-only content. A subscription gets you:

|

Similar newsletters

There are other similar shared emails that you might be interested in: