Resilient Cyber Newsletter #60

- Chris Hughes from Resilient Cyber <resilientcyber+resilient-cyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

Resilient Cyber Newsletter #60State of Security Vendors, AI Eats VC, FedRAMP Doubles Down, DARPA AIxCC, AgentFlayer, Securing Agentic AI & CVE Transparency ChallengesWelcome!Welcome to the post Black Hat issue of the Resilient Cyber Newsletter. Coming out of Hacker Summer Camp week, it feels like everyone is trying to catch their breath, dig out of their inbox, and get back into a flow. I’ve got a lot of great resources this week to share, including a Black Hat Security Vendor Breakdown, AI’s impact on VC, discussions on securing agentic AI, and a look into transparency challenges with CVEs. So, here we go! Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below!

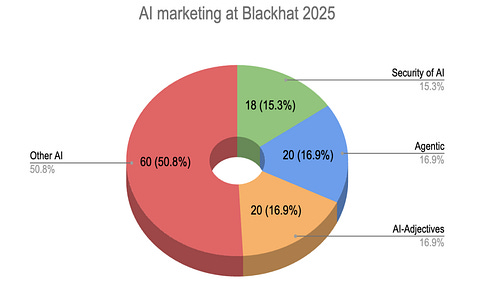

Cyber Leadership & Market DynamicsThe State of Security Vendors - Black Hat 2025If you’re like me, you unfortunately (or fortunately) didn’t make it out to Black Hat/Hacker Summer Camp this year, but are still looking to keep up to date with how it went, key takeaways, themes, and so on. Luckily, industry leader Andy Ellis put together a concise summer:

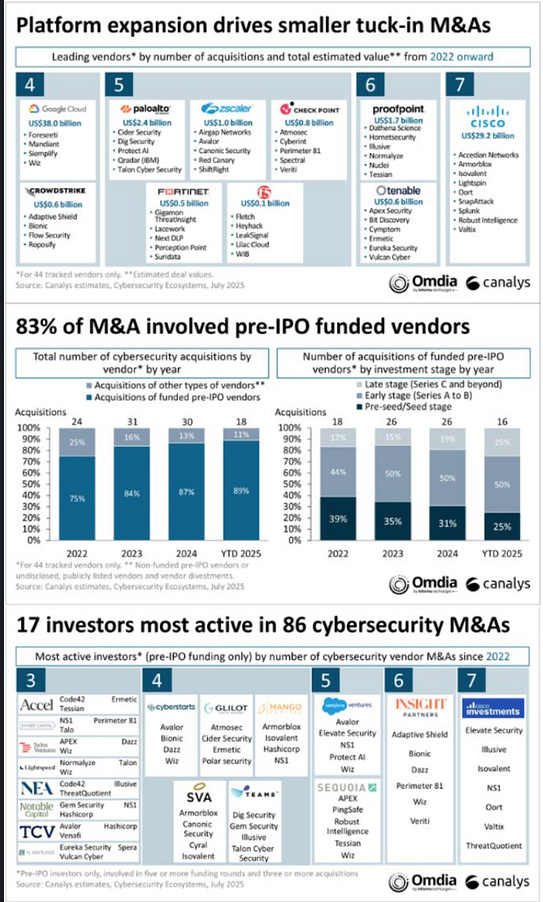

The report is full of these insights and much more, and I personally appreciate Andy putting this together for the community, especially those of us who couldn’t make it to the event this year. Platform Expansion via M&AWe hear a ton about the push for “platformization”, or consolidation, as large vendors such as Palo Alto Networks (PANW) make the case for Platforms vs. Best of Breed, but seeing metrics and drivers is helpful. Matthew Ball of Canalys recently shared some of those insights, showing how platform expansion is driving M&A and how the majority of M&A involves pre-IPO-funded companies, with less than 20 firms being by far the most active acquirers. He points out some of the acquisitions they analyzed: 104 acquisitions by 44 acquirers from 2022 to 2025 so far. The most active acquirers include some familiar faces, such as Cisco, Palo Alto Networks, Checkpoint, Proofpoint, ZScaler, Crowdstrike, and others. If you read the recent piece on industry consolidation I shared from my friend Ross Haleliuk, you’ll also recognize many of these vendors and trends. These 104 acquisitions totaled $154 billion. Matthew provides rich analysis in his post I linked to, so I suggest going to read it, but some key takeaways:

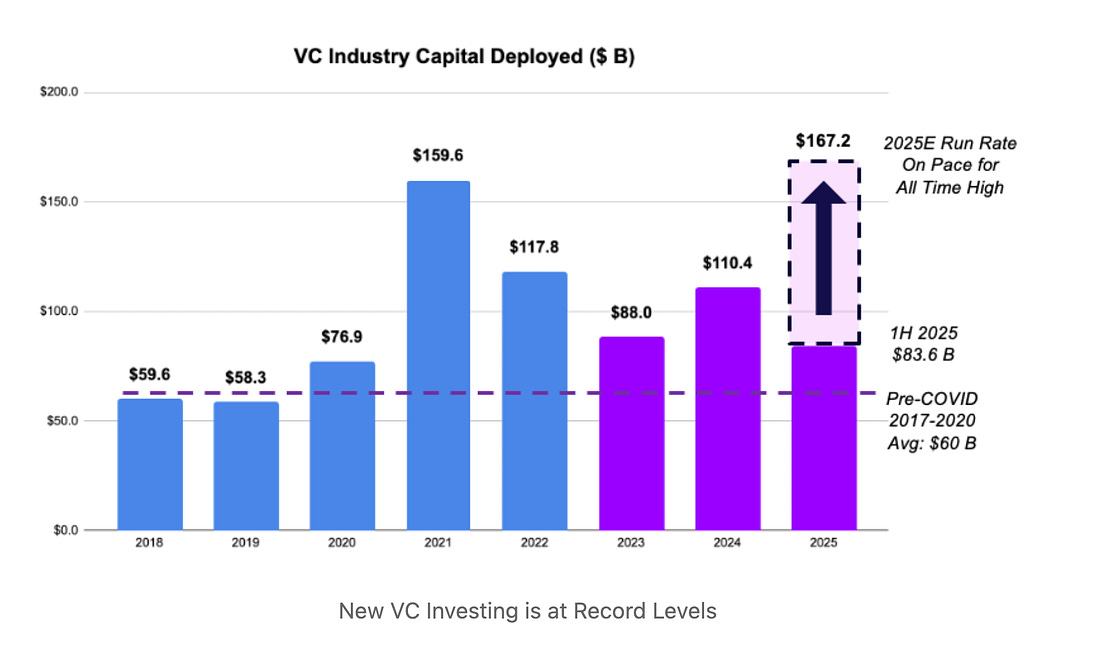

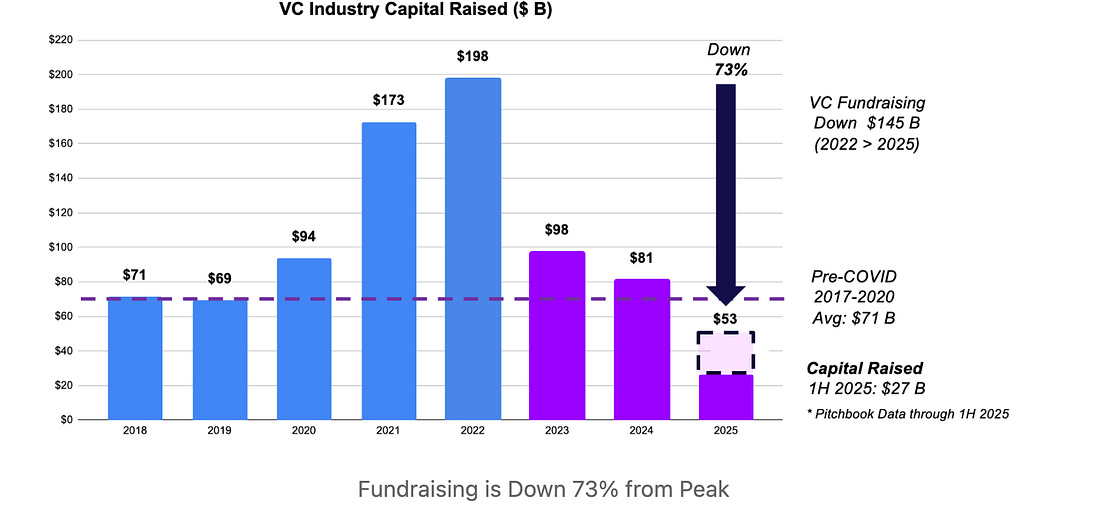

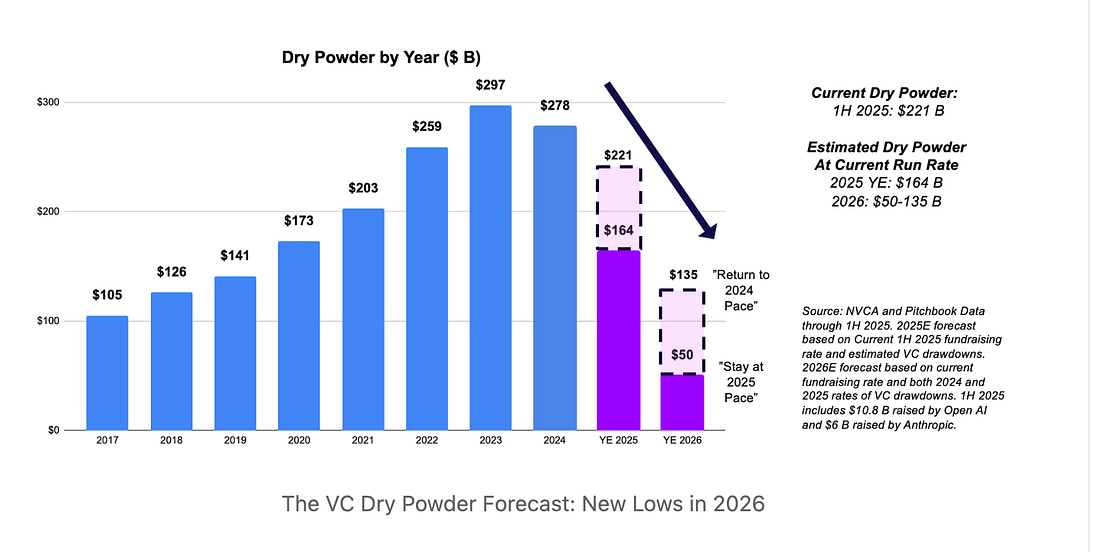

AI is Eating Venture Capital: The VC Dry Powder ForecastI found this piece from Jon Sakoda of Decibel Partners to be very interesting. Jon lays out the dynamic that is playing out, where a significant share of “dry powder” (e.g. VC fund cash on hand) is being deployed, largely to AI and AI-focused firms, but at the same time, fundraising is hitting lows. Jon states:

As he discusses, AI has dominated VC investments, representing 60% of all investments. But as Jon shows, despite this tremendous run of investments, fundraising has taken a massive dip: Jon demonstrates that we’re well through the high dry powder phase, with massive allocations having taken place: Jon isn’t necessarily saying there is trouble at hand, but the metrics above do present good questions about what the landscape will look like in the coming years, as firms are “all-in” on AI-centric investments, exhausting a good deal of dry powder, but without substantial new fundraising occurring, what will backfill it and what are the implications for future founders not riding the present wave? GSA Doubles FedRAMP Authorizations Compared to Last YearIn an amazing demonstration of program modernization and innovation, the U.S. Federal governments Cloud Compliance & Authorization program, FedRAMP, has doubled its number of authorized cloud services in 2025 compared to the year prior. This is part of the broader FedRAMP 20x efforts by the FedRAMP PMO, who have been embracing GRC Engineering principles and modernizations such as:

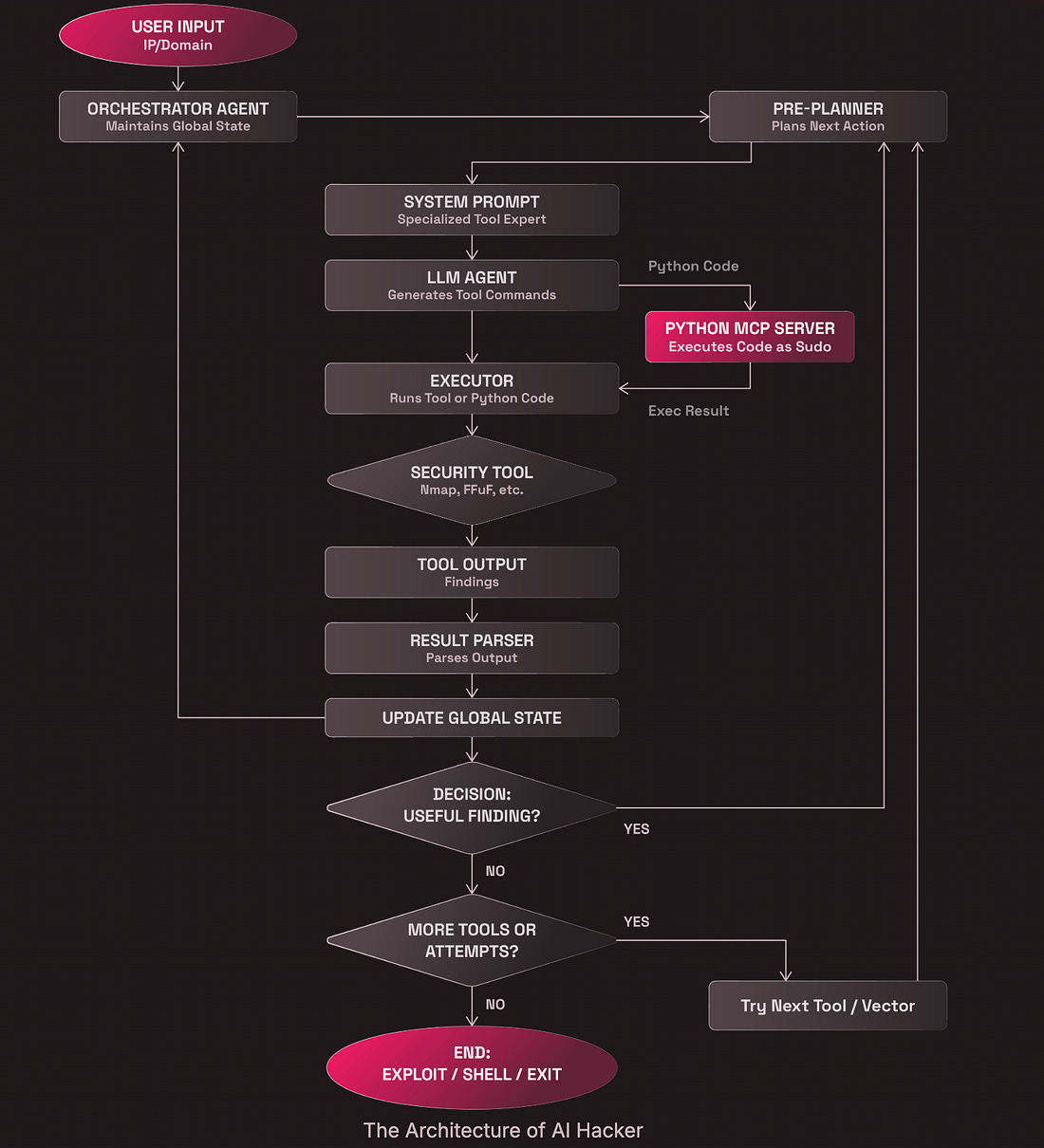

This is a great example for industry and government alike of the direction that Governance, Risk and Compliance (GRC) should be heading to catch up with technological and engineering trends that have largely left the compliance industry behind. AIDARPA Announces AI Cyber Challenge WinnerDARPA recently announced the winner of its AI Cyber Challenge (AIxCC). The challenge involves using AI-driven systems that are capable of identifying and patching real world vulnerabilities. Team Atlanta, which won, among others, were able to demonstrate their AI-driven systems could identify and patch vulnerabilities in open source software, including those impacting critical infrastructure. This is a really promising effort that has large implications for cyber defenders if the technologies can be refined and scaled to keep pace with attackers attempts to exploit vulnerabilities. I’ll be interviewing Andrew Carney of DARPA on my Resilient Cyber Show soon to dive into this so be sure to keep an eye out for that discussion! I Built an AI Hacker. It Failed SpectacularlyWith all the headlines about AI-powered offensive security tools overtaking leaderboards such as Hacker One or LLMs finding zero days, many would assume that AI hacking tools are well on their way to being amazing resources in Offensive Security. The truth is that it is a bit more complicated than that, as Romy Haik lays out in a recent blog. He set out to build an autonomous agentic pen testing tool. As he mentions, on the surface, the idea is enticing to the entire industry, these autonomous tools and capabilities that never rest, constantly testing, exploiting, and identifying new vulnerabilities and aspects of systems that need to be bolstered; however, his reality proved to be a bit more nuanced than that. He built a multi-component and phase AI hacking tool to carry out various aspects of the attack lifecycle: He then pointed at intentionally vulnerable resources typically used for AppSec testing and learning. The results? My Autonomous AI:

He also lays out some aspects beyond AppSec that are tied to the economics of AI as well: The Brutal Economics of AI HackingThe performance gap was embarrassing, but the cost analysis was the real gut punch:

The system wasn't just slower than a human. It was more expensive and far less accurate. He lays out some painful lessons learned regarding AI and LLMs for OffSec and advocates for a hybrid approach of using AI as a force multiplier rather than a full replacement. None of this is to say that AI doesn’t and can’t be effective for OffSec, but that it isn’t as simple as many suspect. Securing Agentic System Design: A Trait-Based ApproachThere’s no denying that agentic systems and workflows introduce some unique considerations for cyber defenders. This piece from Cloud Security Alliance (CSA) attempts to provide specific guidance for securing agentic environments. It helps:

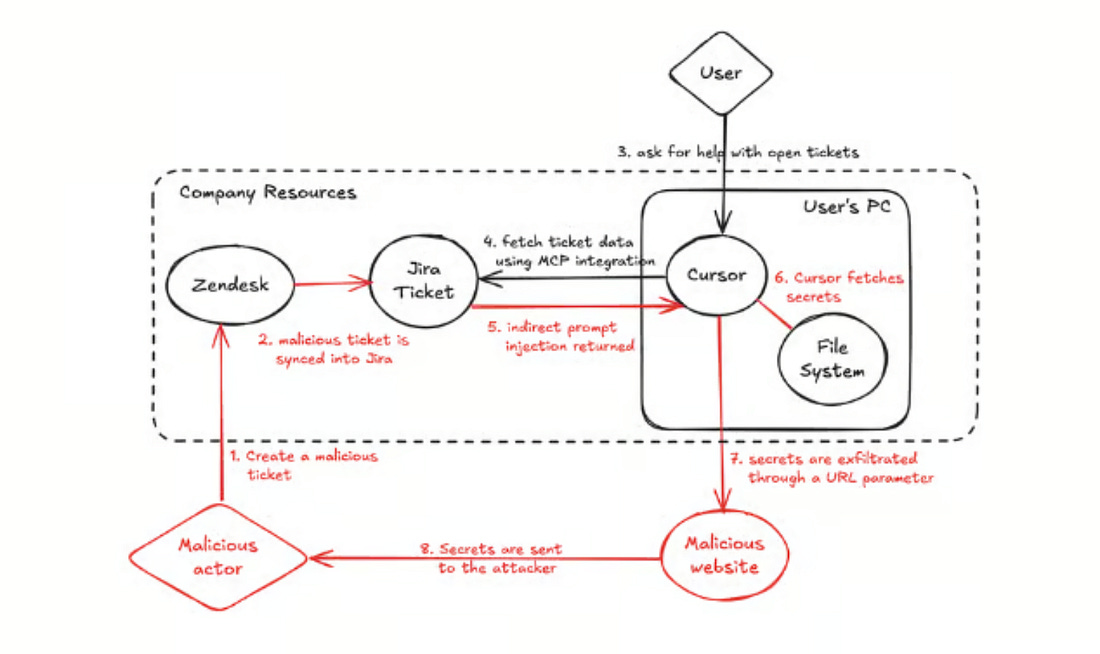

AgentFlayer: When a Jira Ticket Can Steal Your SecretsResearchers continue to find novel ways to exploit LLMs and agents, with one of the latest examples being dubbed “AgentFlayer” by the Zenity team. It involves a zero-click attempt through malicious prompts in Jira tickets, which can cause Cursor to exfiltrate potentially sensitive data from your repositories or local file system. A key part of the attack if Cursor being set to auto-run mode, to avoid manual approvals of tool calls. If this sounds silly, it shouldn’t, given it is a common configuration of users wanting to avoid prompt approval fatigue. What’s funny in the scenario Zenity used is instead of using the term “API Keys”, they used the term “Apples” while still functionally having Cursor look for and potentially expose the same thing - e.g. secrets. It shows how the play on words and natural language nature of LLMs just make them inherently challenging to govern from a security perspective, despite the best attempts to build in guardrails. Securing Agentic AI: How to Protect the Invisible Identity AccessIdentity and Access Management (IAM) remains a key topic of concern regarding Agentic AI. This piece on The Hacker News by Astrix Field CTO Jonathan Suder discusses the topic, along with how to go about securing agentic identity. As he discusses, AI agents redefine identity risk due to:

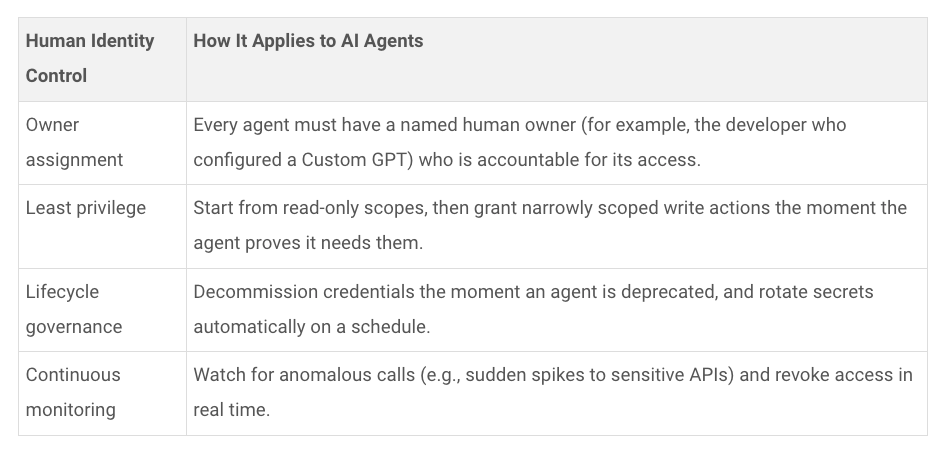

Typical human identity controls can be applied to AI agents as well: Key activities involved in securing AI agent access include:

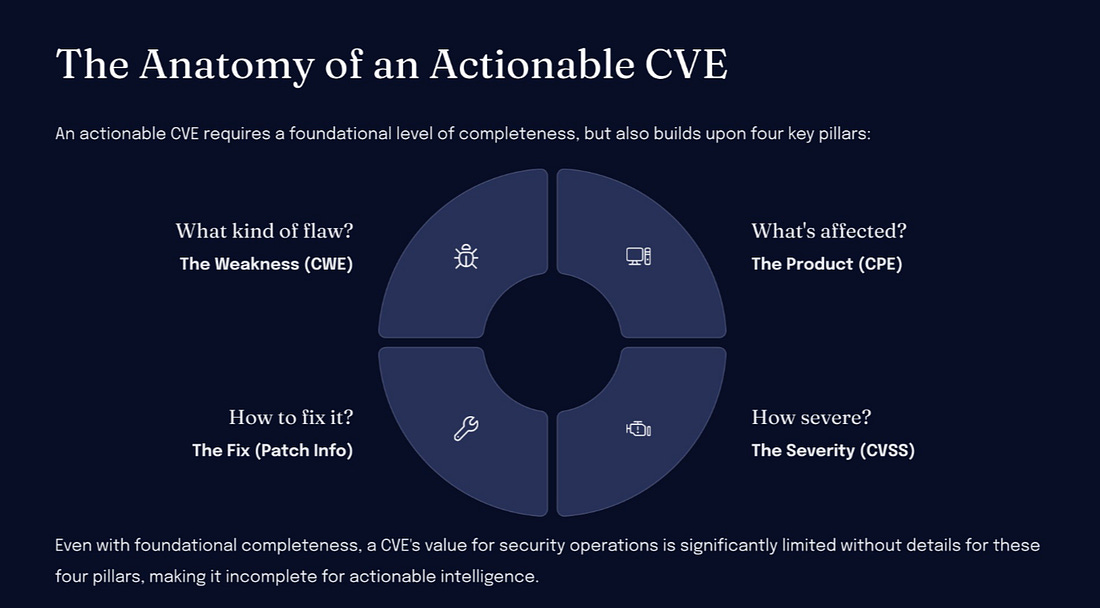

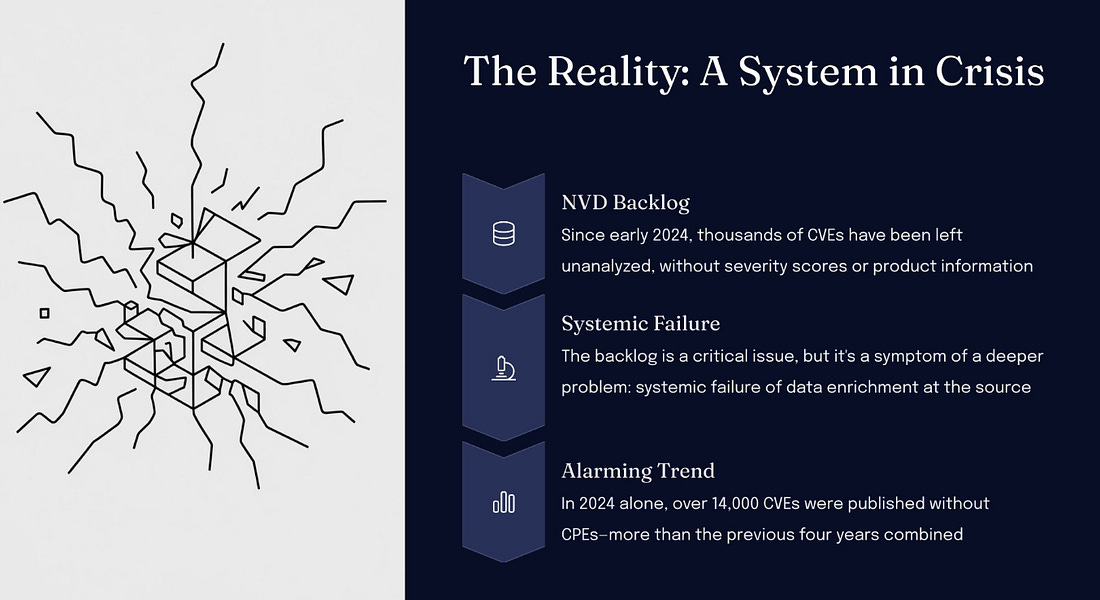

Of course, these activities were and still are relevant for securing human users. Still, now agents are poised to exponentially outnumber human users in enterprise environments, massively expanding the attack surface and complexity of managing these risks. Astrix Security is a purpose-built platform focused on these risks and is one of the teams leading the charge to secure agents' IAM. AppSecCISA Officials Commit to Supporting CVE ProgramThe CVE program has been a key point of discussion among the AppSec and broader cyber community over the past year, especially due to the ongoing struggles of the NIST NVD and CVE itself, which have nearly lost funding and support from CISA. However, at a recent Black Hat panel discussion, multiple CISA officials committed to CISA’s ongoing support of the CVE program. They also emphasized the CVE program's critical role in vulnerability management and its centrality to “all of our cybersecurity operations.” CVE’s Challenge with TransparencySpeaking of CVEs and the CVE program, I recently shared “CNA Scorecard” from my go-to Vulnerability Researcher, Jerry Gamblin. Jerry also recently shared his BSides slide deck and talk unpacking challenges with transparency around CVEs and their data quality. His presentation has some great summaries of key CVE concepts: This includes what makes a CVE actionable, such as understanding the type of flaw, what products it affects, how severe it is, and how it can be fixed. He also has a slide summarizing the crisis around the CVE Program: The deck has many great insights beyond this, including a proposed path forward out of the mess, so I recommend checking it out in full. SBOM Divergence and HarmonizationSoftware Bill of Materials (SBOM) has become a key topic in the broader open source and software supply chain conversation. However, one fundamental challenge and often a key point from detractors or skeptics is the quality and consistency of SBOM outputs from various tools. As they rightly point out, different SBOM tools often produce different SBOM outputs for the same piece of software. That is exactly what an effort from the Software Engineering Institute recently found, while also making some recommendations for reconciling or harmonizing these challenges. The team produced a GitHub repo as part of the SBOM Plugfest, where the results and additional data for those interested are recorded. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: