AI Adoption: Seatbelts, Air Bags & Oversight Optional

- Chris Hughes from Resilient Cyber <resilientcyber+resilient-cyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

AI Adoption: Seatbelts, Air Bags & Oversight OptionalA look at IBMs 2025 Cost of a Data Breach Report: The AI Oversight GapEverywhere we look, organizations are racing to adopt AI, LLM’s, Agents and more. AI has dominated the venture capital allocation, startup ecosystem and discussions among business and technology leaders. Want to know what’s alarmingly absent from those conversations?

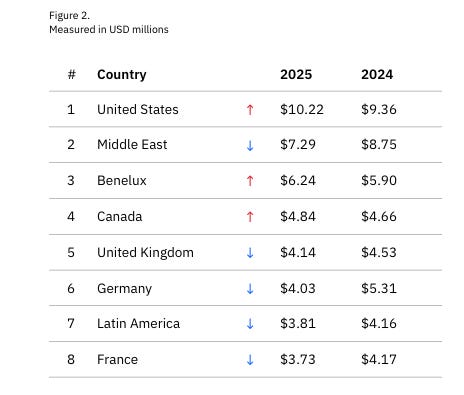

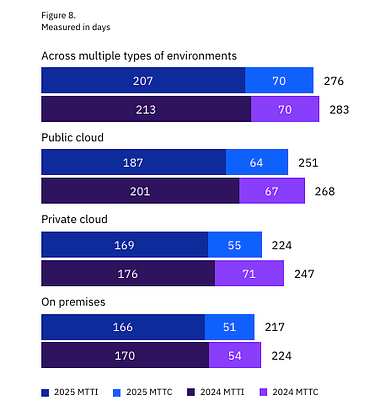

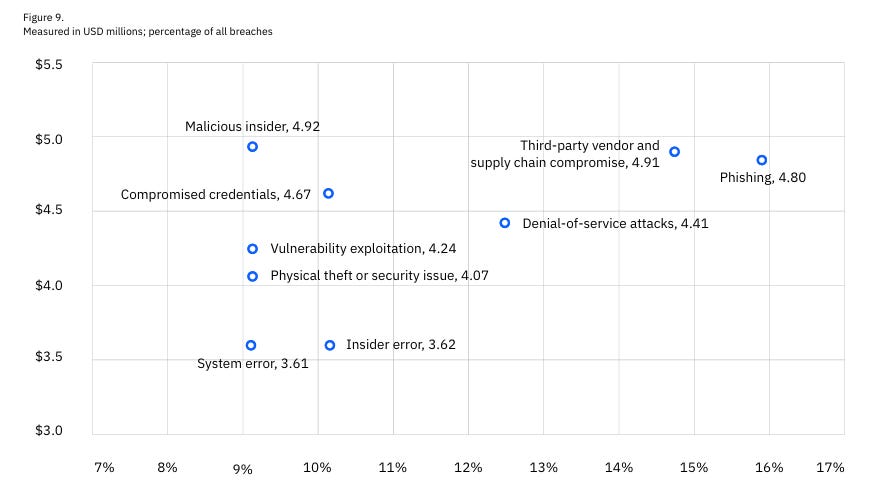

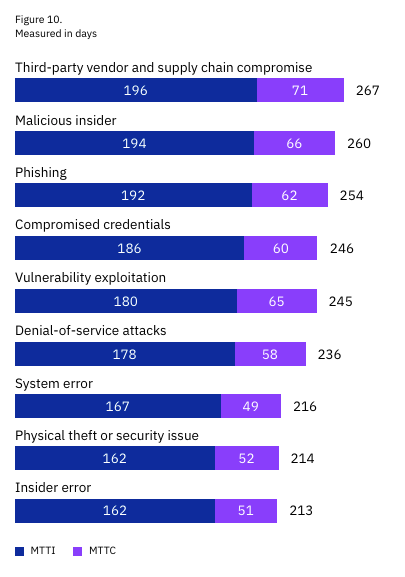

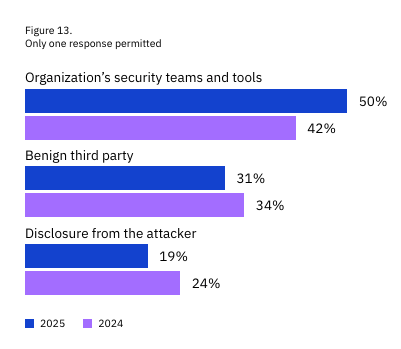

At least that is the key theme of the latest IBM Cost of a Data Breach Report (2025). Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below! While the report covers a variety of topics, I’ll be sticking with the reports theme and focusing on some of the findings that are AI-specific. Below are the key findings summarized by the report: One interesting stat from the report is that the average cost of a data breach fell globally, but broke a record in the U.S., with the average reaching $10.22 million, a 9% increase from the year prior. The report states this figure is driven by increased regulatory fines as well as higher detection and escalation costs. I thought this was a bit ironic of a statement, given we’re seeing the U.S. openly embrace a push for deregulation, while other regions such as the U.S. are seeking to increase regulation, especially around areas such as AI and Cybersecurity. The report goes into much more detail for specific industry verticals and so on, but I again want to focus on the reports theme, which is a neglect of oversight related to AI as well as claims of reduced incident costs, as teams embrace AI from the defenders perspective. Before we jump right to that though, there are a few painful things to call out, despite the global decrease in incident costs (not you U.S.!), it is taking several hundreds of days to identify and contain data breaches across various environments, whether they are cloud-native, hybrid or even on-prem. The most costly attacks include third-party vendor and supply chain compromises and phishing, as well as malicious insiders, although those were not as common. Those same attack vectors also took the longest to identify and resolve: One great thing to highlight is that organizational security teams are getting better are incident and breach identification, versus finding out from a third party or the attacker themselves: This seems to support the always elusive Return on Security Investment (ROSI) metric that many security leaders struggle with when demonstrating value to the business. AI Oversight - Not Even OnceNow, for the report's concerning part and key theme, The report specifically calls out shadow AI and states that AI security is lacking.

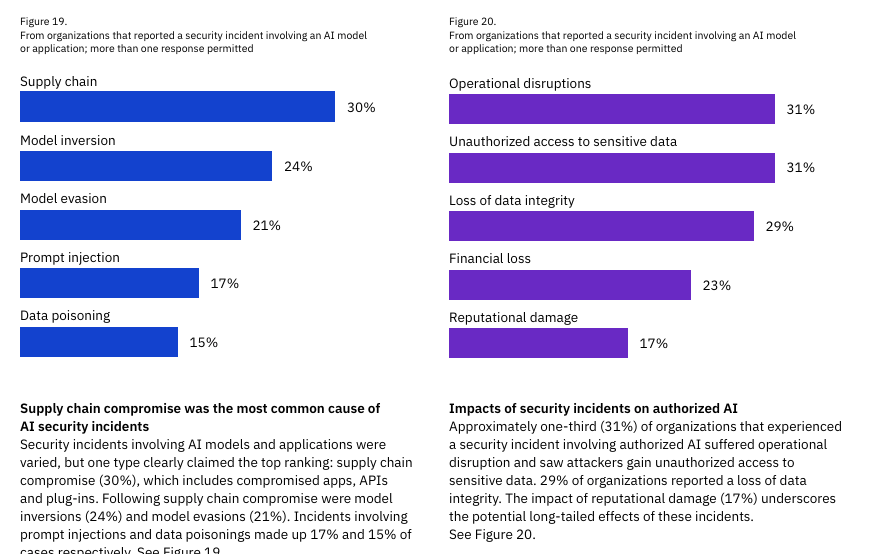

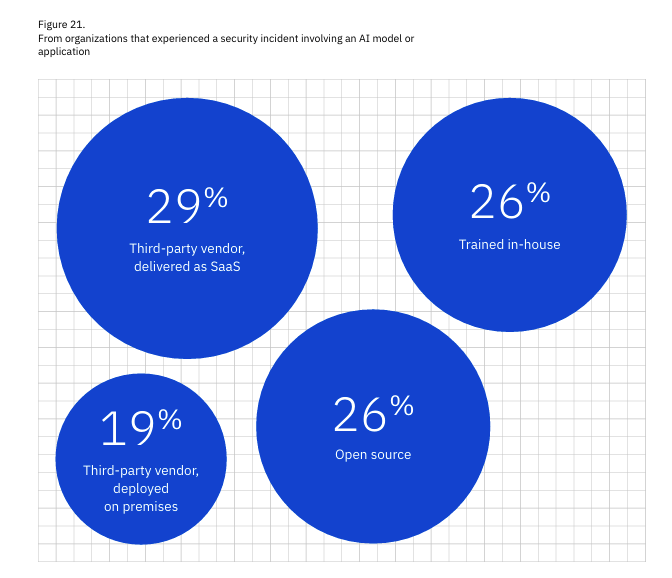

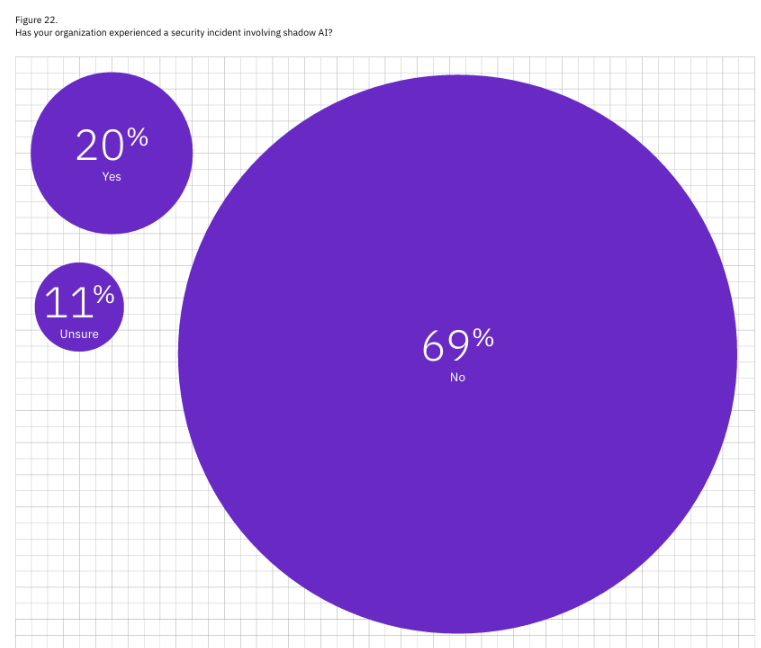

Among those incidents, the leading incident type was supply chain-related (e.g., external apps, APIs, and plugins), and these led to operation disruption and unauthorized access to sensitive data 31% of the time. This aligns with similar findings, which found that nearly 22% of all uploaded files and almost 5% of all prompts included sensitive data, often involving GenAI tools previously unknown to the organization to even be in use, let alone authorized for use. These findings from the IBM report also emphasize how quickly AI has been integrated into organizational workflows and the software, products, and services organizations are using, given that it often leads to operational disruptions. In terms of the type of AI systems involved in the incidents, 29% were external third-party vendors, while 26% were trained in-house. This shows that organizations aren’t just using external AI vendors but also routinely trying to train models in-house. They need to be responsible for their secure hosting and usage. To further emphasize how prevalent shadow AI usage is, the report highlights that unsanctioned AI security incidents are more common than sanctioned AI incidents. Shadow AI was involved in 20% of the breaches, where only 13% of the incidents involved AI usage the organization was already aware of. Most concerning of all though is the fact that:

Organizations literally have poor visibility into their AI consumption, echoing early days of Cloud and SaaS prior (which are still problems) The report highlights that shadow AI incidents cost more, up to $200,000 more than typical incidents, often involving customer and employee PII, IP, and other sensitive data exposure. In our era of fear-of-missing-out (FOMO) hype driven AI adoption, where organizations are afraid of falling behind peers, whether internal or external, organizational AI governance lags behind AI adoption.

The above line should alarm security professionals and excite attackers, AI security startups, and investors alike. Most organizations simply haven’t addressed AI governance despite the business and developers being off and running with AI services, products, models, and more. Two things also jumped out to me:

This inevitably leads to problems I have discussed in other articles, where legacy manual approval and assessment processes lead to shadow usage as the business and organizations work around these siloed, ineffective approaches. We’re literally repeating the painful mistakes of the past, including those tied to SaaS, such as:

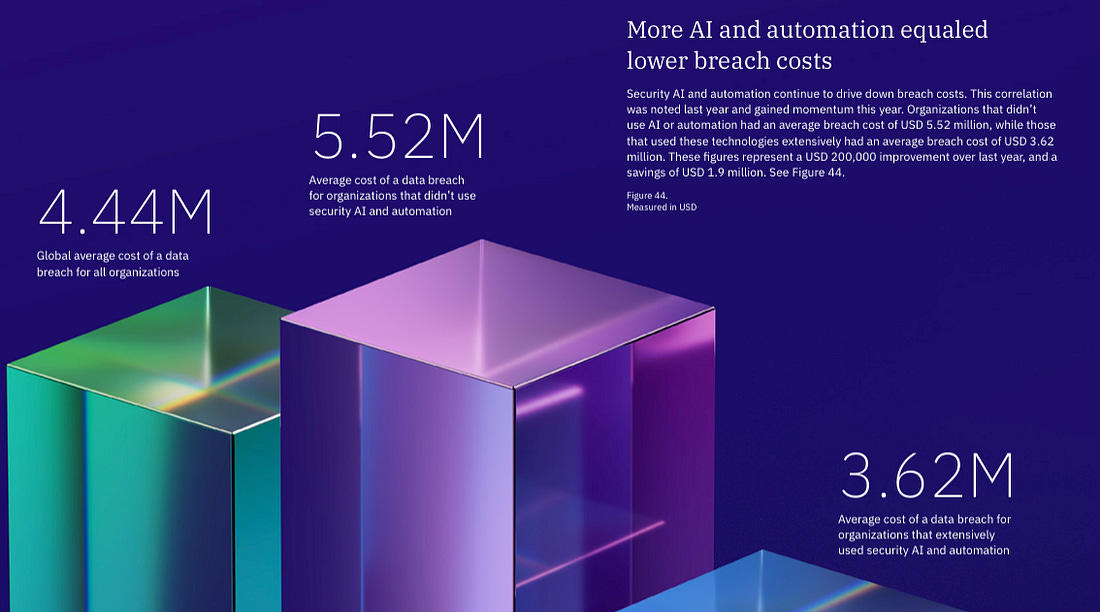

Security AI and AutomationTo be fair, I also want to highlight another interesting takeaway from the report. While organizations with poor AI governance, visibility and security in place are experiencing more costly and impactful incidents, those who are embracing AI for security are seeing lower average costs of a data breach as well. This reflects the dual nature of AI and security, where AI needs to be secured, but can also be used for cybersecurity, to address systemic challenges in key areas such as GRC, AppSec and SecOps among others. These reduced incident costs are being driven by factors such as:

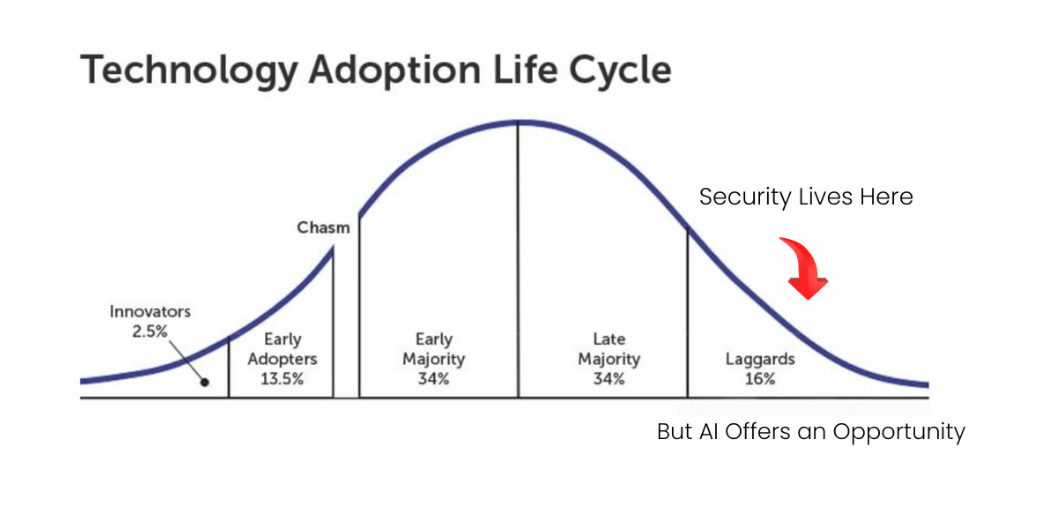

Another refreshing metric was that security teams are adopting AI at the same rate as other business functions:

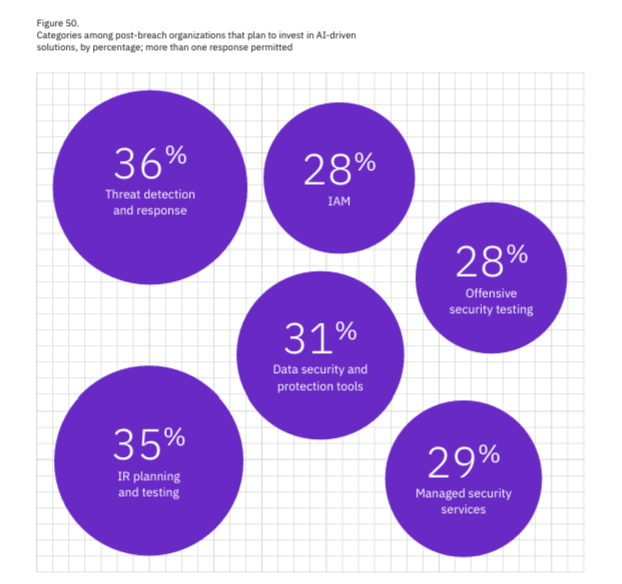

This is something I discussed deeply in my article “Security’s AI-Driven Dilemma: A discussion on the rise of AI-driven development and security’s challenge and opportunity to cross the chasm”, This demonstrates that the cybersecurity community is learning from its past mistakes, where it was a laggard and late adopter of emerging technology. Instead, it is looking to be an early adopter of AI and apply it to various cybersecurity use cases. This also highlights where we’re seeing a TON of both investments in cybersecurity startups leveraging AI and looking to tackle various cybersecurity use cases. The IBM report even provides insights into the areas organizations are planning to invest in AI-driven solutions (take note, founders, incumbents, and investors): Closing ThoughtsAI represents a unique time in our technological evolution within the cybersecurity space. On one hand, we’re perpetuating the problems of the past, with a lack of governance and oversight, manual review processes, and rampant shadow usage. On the other hand, we’re seeing security lean into being an early adopter and innovator with this emerging technology, rather than always being a late adopter and laggard. This dichotomy represents the double-edged nature of AI more broadly: It offers tremendous potential but also significant problems if adopted poorly. It remains to be seen which path we take in the long term, but I’m personally bullish on AI's potential to tackle longstanding cyber challenges or at least help. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: