What we’ve learned about building AI-powered features

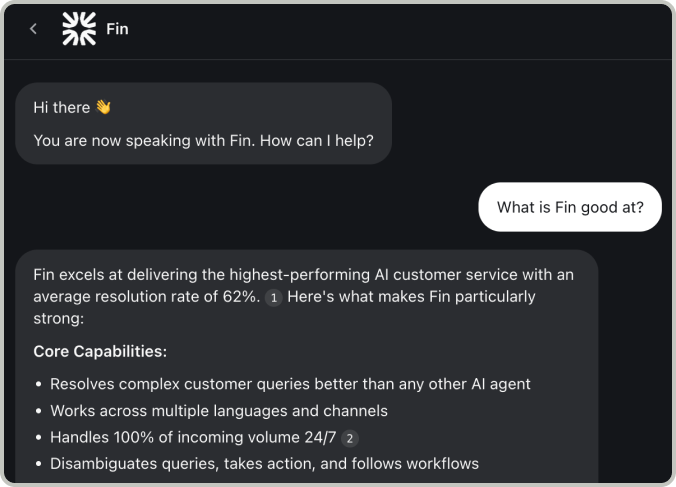

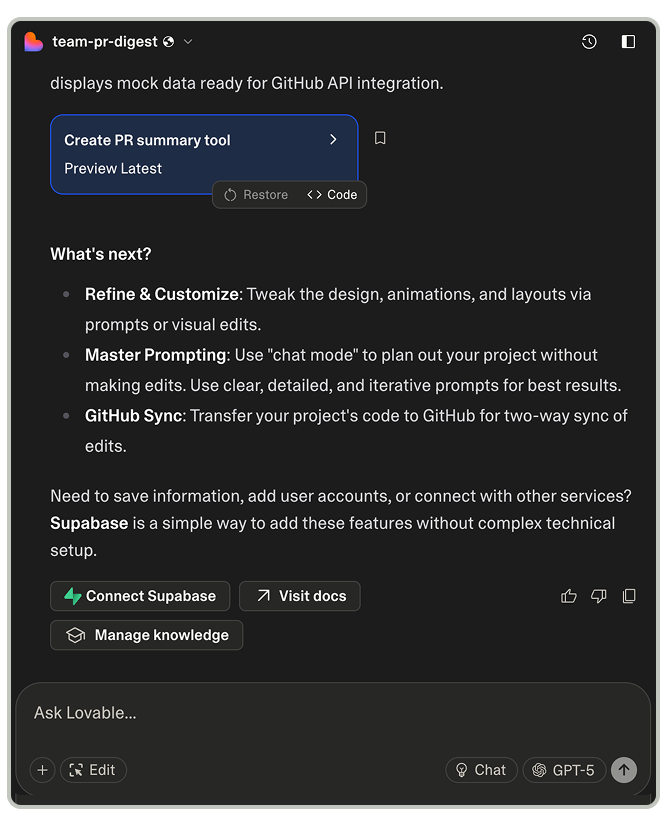

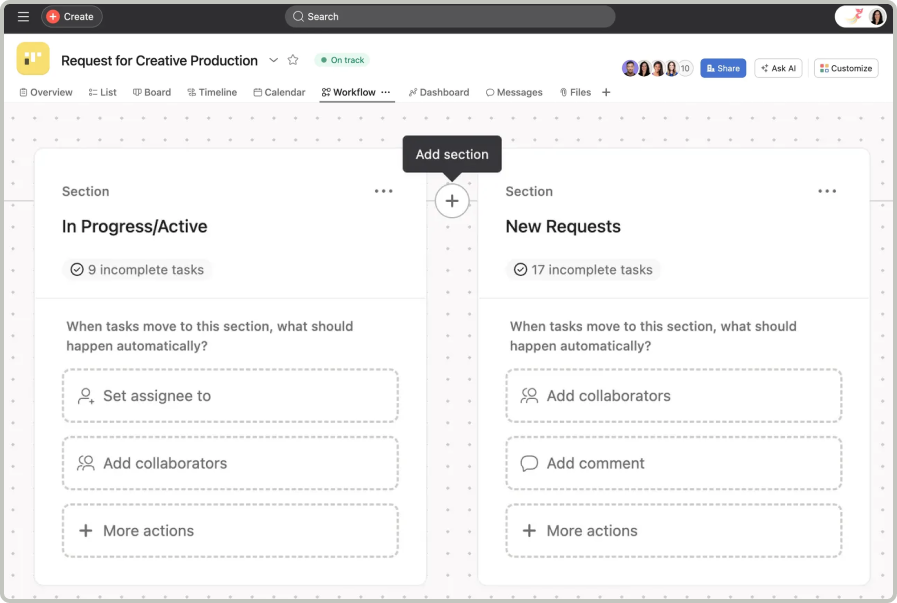

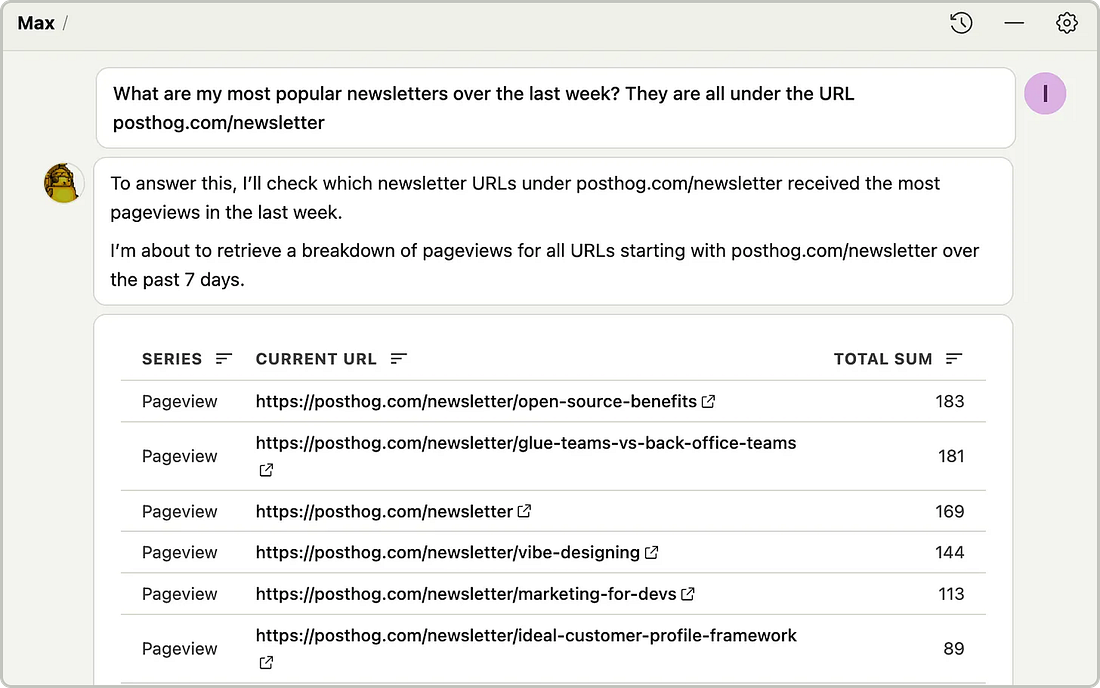

What we’ve learned about building AI-powered featuresHow to choose the right features, implement them the right way, and make them better (for real)AI feels like a gold rush. Everyone’s staking claims and panning for quick wins. A minority are building mines that create long-term value, but most of the shiny demos are fool’s gold: bolted-on, rarely used, and quick to tarnish. We took our time entering this gold rush. In 2023, when many started adding AI-powered features, we tinkered but felt the model capabilities weren't good enough. A year later, in an August 2024 hackathon, we built a trends generator agent and it was obvious things had changed. Given the proper context, models were now good enough to generate useful insights. That was 12 months ago. Since then, we shipped Max AI, our AI-powered product analyst, and we’ve learned a bunch of lessons along the way. The first one? Don’t make your product worse. Choosing what to buildYes, AI really can make your product worse if you choose to build the wrong thing, either because it’s too slow, unreliable, or it solves a problem no one cares about. There are three key lessons here: 1. Learn the patterns AI is good atYou don’t need to reinvent the wheel. A bunch of smart people have already figured out effective AI patterns you can copy. These have the advantage of being UX patterns that users are familiar with, while also being functionality AI is actually good at. First is the classic “chat with your docs/data/PDF.” AI is great at search and summarization, and can use this to build reports and recommendations. Second are generators of various kinds: titles, code, documents, SQL, images, and filters. App builders like Lovable and Bolt.new are the most notable examples, but numerous companies, such as Figma, Rippling, and Notion, have integrated generation features into their products. Third, and finally, is tool use. AI can use well-defined tools. This is what MCP servers are all about. Companies like Zapier, Atlassian, Asana, and many more have used this to automate and improve workflows. And yes, there’s a PostHog MCP server, too. Knowing the common AI patterns helps you identify where you can use them in your product. Max AI uses several of these, including:

Soon, Max AI will go further in its tool use by watching and analyzing session recordings for you, among other (somewhat secret) things. 2. Identify problems that AI might solveAsk not what you can do with AI, but what AI can do for you. — JFK (I think) With the patterns AI is good at in mind, go through your product and figure out the jobs to be done that AI could potentially do, such as:

As Stephen Whitworth of incident.io said in Lenny's Newsletter:

We’ve applied this thinking in many ways, such as:

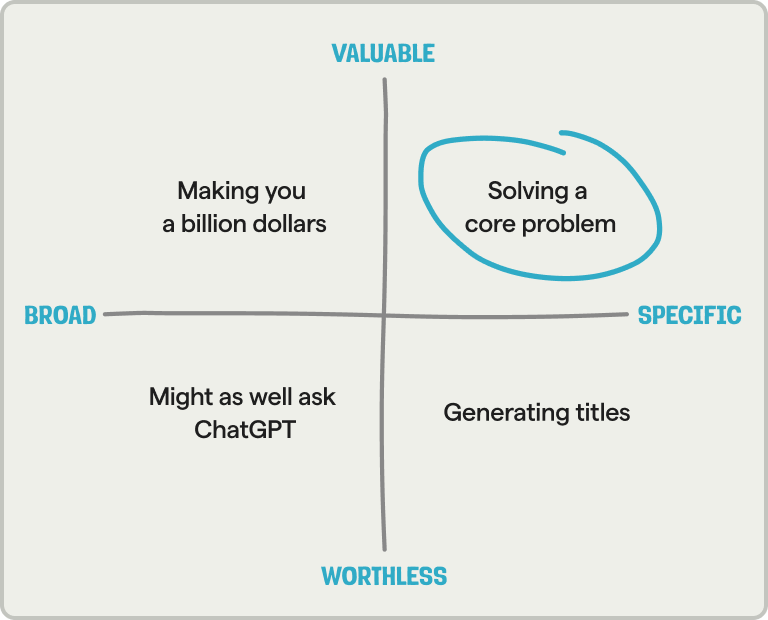

3. Validate the problem is specific and valuableNow that you have some patterns and problems, narrow them down to the ones that are both specific and valuable. Avoid these traps:

When building Max, we quickly realized answering questions like “How do I increase revenue?” were too broad. Instead, we focused on building specific functionality that leverages its advantage of being integrated into PostHog with your PostHog context. For example, Max can write better SQL because it knows which tables are available, and answer product questions with native visualizations because it’s built-in and understands the tools available. Implementing your ideaNow that you have an idea of what you want to build, you need to make sure it actually works. Here are some core bits to focus on getting right: 4. Your app’s context and state are criticalEveryone can call the OpenAI API, but your app's context is unique. This can include data like:

When a user asks Max why signups dropped last week, for example, the API receives information on the:

The code for this literally looks like this: You also need to handle “context” within the workflow (aka state). As the conversation progresses, you don’t want that context to be lost, and this is especially likely to happen when you have multiple sub-agents. To get this right, we store and include context through every part of the workflow like this: We find doing this, combined with optimizing our model choice, is more effective and useful than fine tuning a model would be. 5. Steer AI to success with query planning and conditional routingAI will run off and do all sorts of crazy things if you let it. It needs guidance to be successful. We do this by orchestrating and chaining together multiple steps like query planning → data retrieval → visualization. Beyond state management, this requires:

In PostHog, at the highest level, this functionality comes from a router like this: Each node of the router then has its own conditions to route through to get to the right data and tools for the job. This ensures the AI has the pieces it needs to complete the task and makes successfully completing that task more likely. 6. Plan for failure by adding monitoring, guardrails, and error handlingIdeally all the structure you’ve built up to this point prevents failure, but you still need to give the AI guardrails because it will inevitably smash into them. First, you need to know when something goes wrong, so implement monitoring from the beginning. Georgiy from our Max AI team relayed how important this is:

Second, anything an AI can hallucinate, it will hallucinate. To prevent this, we are explicit about the data that needs to be set directly, and the rules it needs to follow. In our AI installation wizard, for example, we include rules like:

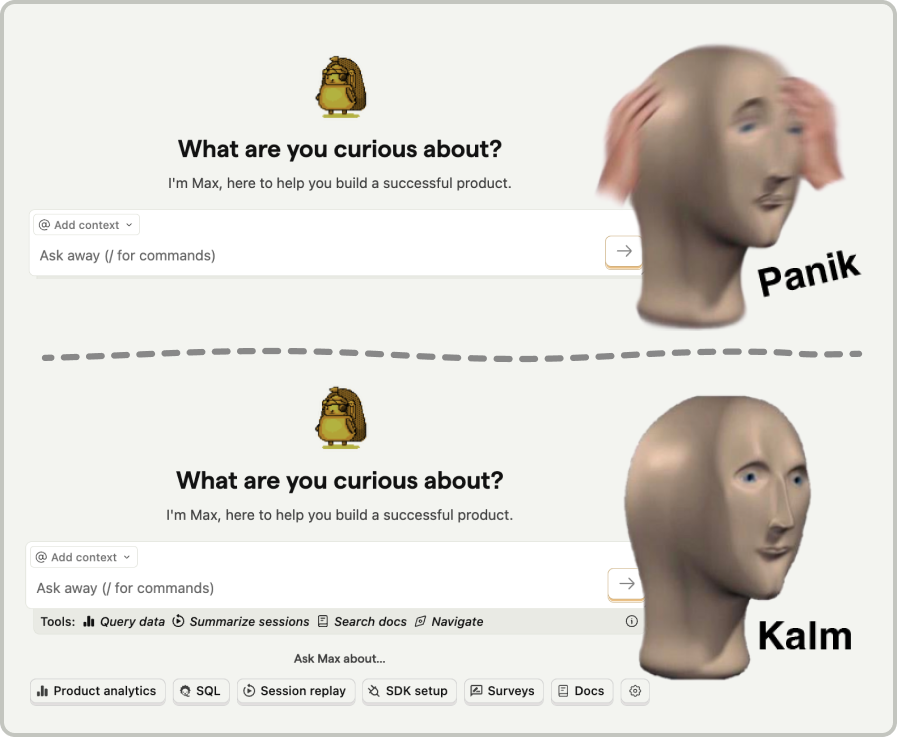

You also need guardrails for people. When people see an empty text box, they get scared and forget everything. The solution? Add suggestions for how they can use your AI-powered features, nudge them in the right direction, and help them remember what’s possible. Beyond issues with humanity and hallucination, sometimes your workflows just break. You need to be able to handle these gracefully with retries and rate limiting. For real pros, you can also set up LLM analytics, error tracking, and feature flags to help. Conveniently we provide all three, which is a weird coincidence. Improving your featureAI models are evolving rapidly and in unpredictable ways, so your AI-powered features require more maintenance and continual improvement than you might expect. From experience, here's what we've found is most important when trying to do this: 7. Avoid AI knowledge silosBuilding AI-powered features shouldn’t be the responsibility of some “AI guy” on your team. AI should be deeply integrated into your product and this means you need the expertise of the people talking to users and building something for them. There are a few ways you can encourage this:

8. Focus on speedOne of the big challenges with AI-powered features, especially complex ones, is that they are slow. A workflow can often mean multiple calls to LLM providers, which can add up to a lot of time waiting for responses. This can be especially frustrating when alternative ways to complete a task exist in an app or website. As the founder of Superhuman, Rahul Vohra, noted in Lenny’s Newsletter:

Some ways to improve this:

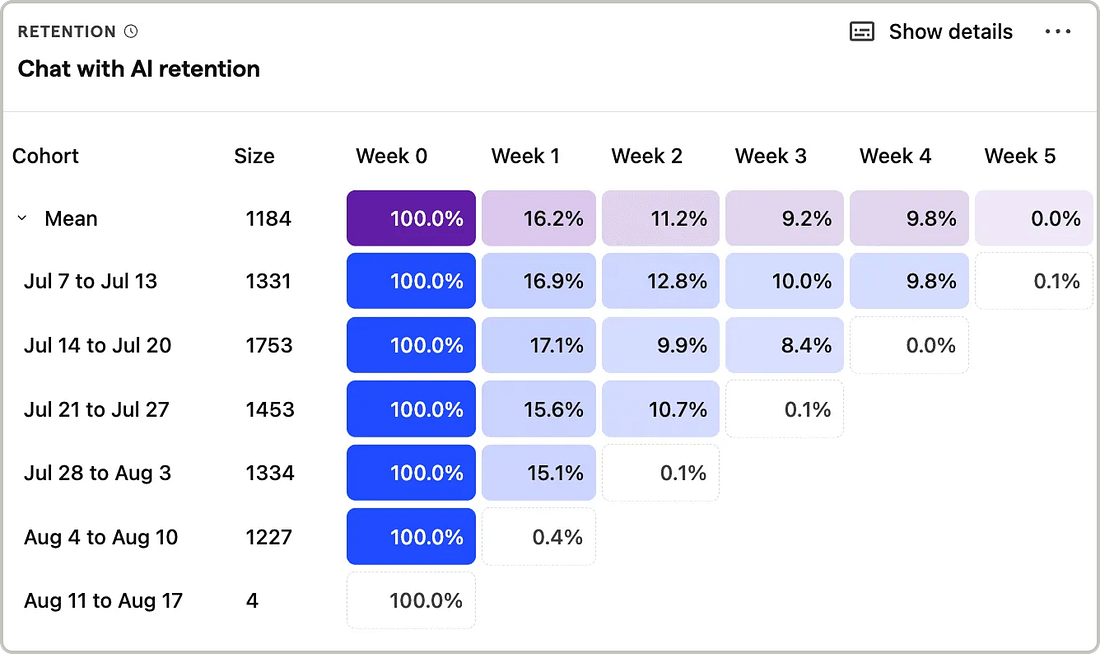

9. Constantly monitor and evaluate effectivenessYour new feature shouldn’t be judged less strictly just because it’s ✨ AI ✨. Not only can the wrong idea make your product worse, changes in models can negatively impact the experience without your knowledge. There are multiple methods we found work best for evaluating effectiveness:

Wrapping upThese nine lessons are not isolated takeaways, they work in tandem. It’s a mistake to skip to the end and think that optimizing evals = building a great product. Remember, you’re aiming to build something valuable to users, not shiny tech demos. Just because it’s AI does not mean users will find it valuable. All the lessons you’ve learned about building great products still apply. Talk to users, ship fast, run experiments, and repeat. Words by Ian Vanagas, who wrote this newsletter by hand as much as he would have liked to one-shot it with AI. 🧑💻 Jobs at PostHogDid this post get you nodding along? Looking to join a team that builds like this? We’re hiring product engineers and more specialist roles like:

|

Similar newsletters

There are other similar shared emails that you might be interested in: