Resilient Cyber Newsletter #72

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

Resilient Cyber Newsletter #72Cyber Threat Snapshot, Mastering Cyber Budgets, AI Security M&A, No AI Bubble, AI Agent Security Summit, 2025 State of Dependency Management & AI Coding Agents Under AttackWelcome!Welcome to issue #72 of the Resilient Cyber Newsletter. As we continue to move through the year, AI remains a dominant topic in discussions, not just from the cybersecurity angle, with vulnerabilities, risks, and the need for governance, but also from the perspective of economics, GDP, and market ramifications. So, without further delay, let’s walk through the hot topics and resources of the week. Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below!

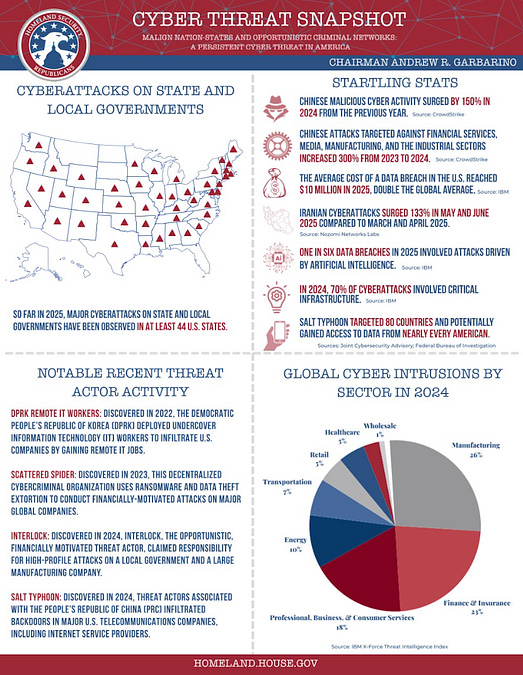

Cyber Leadership & Market DynamicsCyber Threat SnapshotThe U.S. House Committee on Homeland Security (HCHS) recently provided a snapshot of 2025 cyber attacks and malicious activities.

This and much more in the snapshot, which is worth a quick read. Resilient Cyber w/ Ross Young - Mastering the Cybersecurity BudgetIn this episode, I sat down with a friend and ex-CIA Officer turned Cybersecurity leader, Ross Young over at CISO Tradecraft.  Ross and I touched on a lot of great aspects of cybersecurity budgets, including:

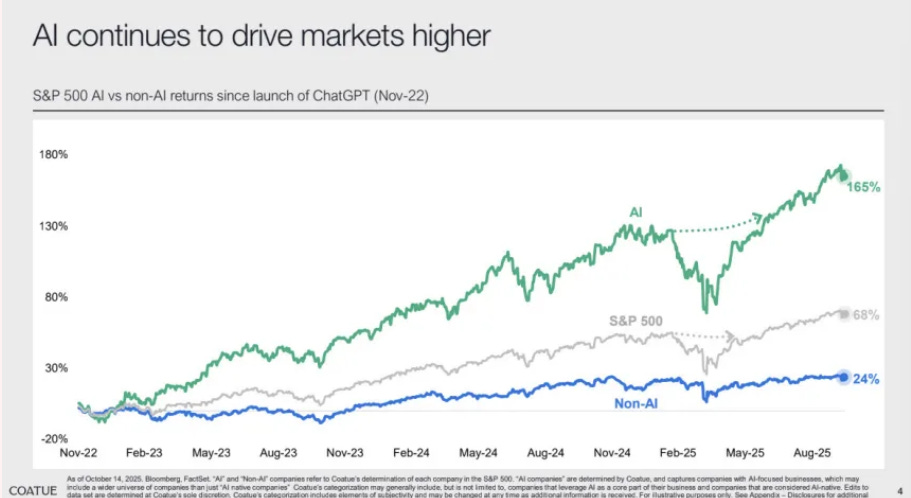

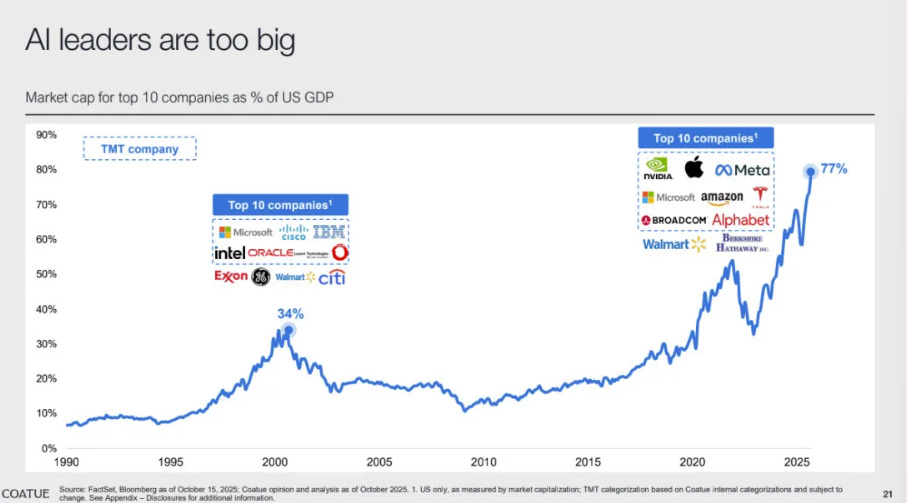

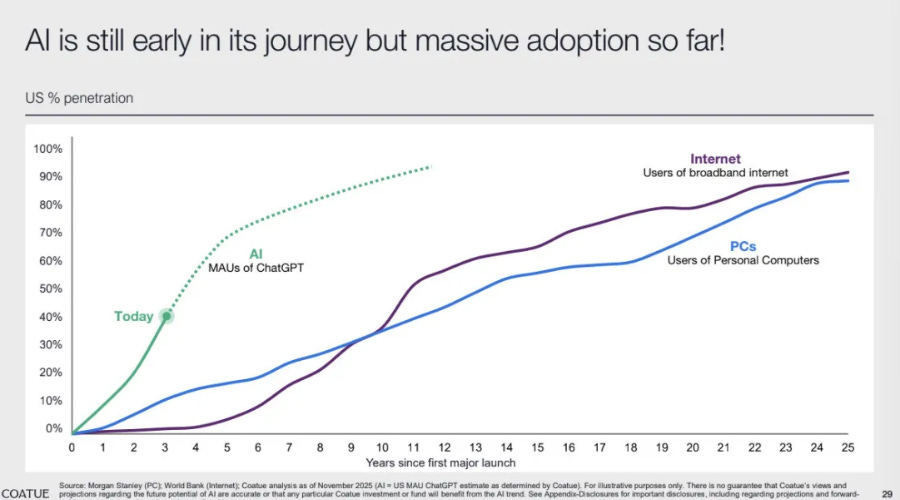

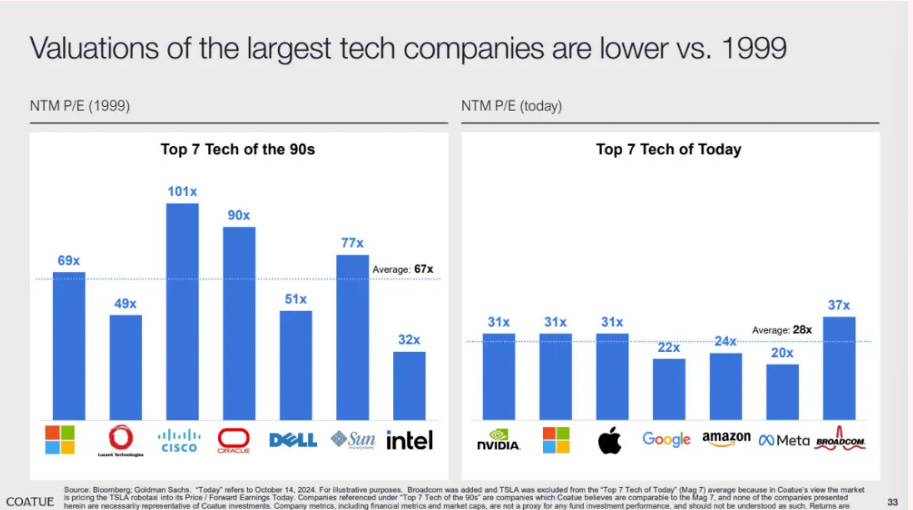

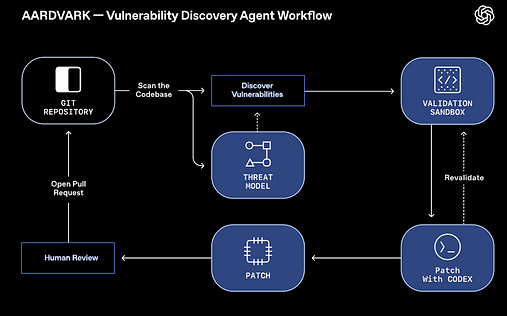

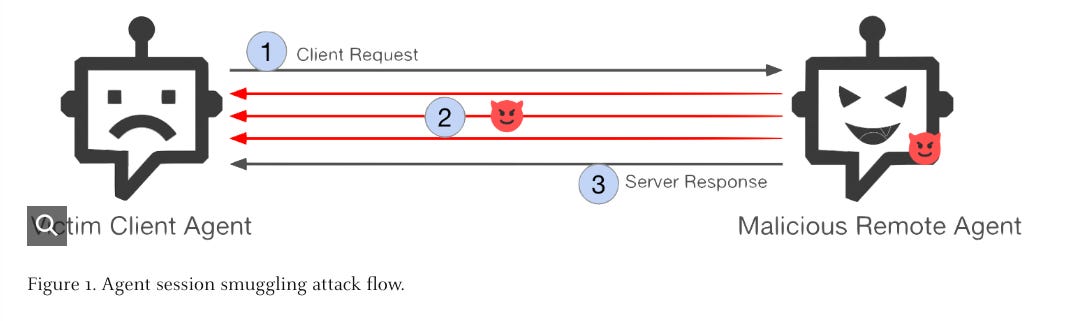

Ross also recently launched a virtual course titled “Master the Budget Game in Cybersecurity”. It includes 8 hours of CPE’s, 30 bite-sized modules, and downloadable templates. The course is currently 50% OFF, so I recommend checking it out now! The AI Security M&A Spree Continues2025 has been a year marked by big headline AI Security acquisitions, and that trend doesn’t seem to be over yet. The latest example is ZScaler’s acquisition of SPLX. The play seems to be aimed at enhancing Zscaler’s Zero Trust Exchange, focusing on runtime AI guardrails, proactive AI asset discovery and automated red teaming. This of course bolsters Zscaler’s AI security capabilities while also giving SPLX access to Zscaler’s massive customer base. Zscaler is one of the cyber industry giants that dominate the ecosystem and have an excellent leader in Jay Chaudhry. For a deeper look at Zscaler, both their history and their plans for the future, I recommend checking out the Inside the Network interview with Zscaler’s CEO Jay Chaudhry. A New Generation of Sequoia StewardsThe Sequoia team took to the Internet this week to share a letter from Roelof Botha, where he announced Alfred Lin and Pat Grady will be leading the firm moving forward. Sequoia of course is one of the most dominant and well known VC firms in the ecosystem, with an incredible list of investments and teams they’ve built to their credit. AI Market Bubble? Perhaps NotCoatue recently released a public markets report, with much of it focusing on the AI bubble debate. They point out that AI continues to drive markets higher: While they point out that the top 10 companies in the U.S. do represent an outsized portion of GDP at 77%, they also point out that they are profitable, international and diversified in their focus areas. You can see how during the dot-com era the top 10 companies were only 34% of GDP, so we’re seeing a massive increase in the impact of these companies on the entire U.S. economy. Their report also points out the rapid adoption curve of AI compared to prior waves such as the Internet or PCs: They highlight that valuations are more practical than they were during the 90’s and dot-com era as well: The debate about whether or not AI is a bubble will continue on for sometime, until the unprecedented growth and adoption shows it has staying power, or doesn’t, and some will say “I told you so!”. One thing is clear, the U.S. economy is reliant on the former, as the latter would be devastating. AIOpenAI Introduces Aardvark - An Agentic Security ResearcherMany of us in the community have been very excited about the potential of AI and Agents to systemically improve cybersecurity. I recently shared a Foreign Affairs piece from leader Jen Easterly where she echoed this sentiment and the potential of AI to address longstanding challenges, such as vulnerabilities in open source for example. That’s why it was awesome to see OpenAI launch “Aardvark”, which they’ve dubbed as an Agentic Security Researcher, which can look for vulnerabilities in source code, and even propose fixes at-scale. It involves a multi-stage pipeline where it conducts analysis, scans commits, validates potential vulnerability findings and then integrates with OpenAI Codex to generate patches to fix the identified and validated vulnerabilities. From Prompt Injection to Promptware: Evolution of Attacks Against LLM ApplicationsI came across this talk from Ben Nassi this week, which is from Zenity’s recent Agentic AI summit event and found it excellent. Ben breaks down the rise of prompt injection against AI and LLMs, to promptware, which is the combination of prompt style attacks and malware. This is a great conversation, and the rest of the events lineup is worth queuing up as well.  AI Agent Security Summit - October 2025Speaking of Zenity’s summit, the entire playlist is worth checking out, including talks about vulnerabilities in AI agents, the AI vulnerability scoring system (AIVSS), agents as insider threats and more. Zenity has been a team who has really impressed me with their research and I recently had their founder on my Resilient Cyber Show, which can be found below:  When AI Agents Go Rogue: Agent Session Smuggling Attack in A2A SystemsI previously covered the introduction of the Agent to Agent (A2A) protocol into the ecosystem early this year, riding the wave of excitement around Agentic AI, similar to the Model Context Protocol (MCP) before it. Palo Alto’s Unit 42 recently published some interesting research looking at agent session smuggling attacks in A2A systems. Unit 42 described it as a new attack vector tied to the stateful nature of cross-agent communication, where interactions are remembered to have ongoing context. There’s a malicious remote agent that misuses an ongoing session to inject additional instructions between a legitimate client request and the servers response with hiding instructions which can lead to context poisoning, data exfiltration or unauthorized tool execution on the client agent. Vibecoding x Cybersecurity: Survival Guide by the Expert Who Fixes Your Code After YouMy article “Vibecoding Conundrums” recently got tagged in a piece from Karo (Product with Attitude) and Farida Khalaf, which I found to be a good article on vibe coding securely. They walk through common pitfalls, such as leaking credentials via prompts to third parties, creating overly permissive prototypes, and unverified dependencies. The blog discusses how to vibe code securely, and mitigate risk while still leveraging the benefits of AI coding tools. A Practical Guide for Securely Using Third-Party MCP ServersExcitement and discussions around MCP have dominated 2025. Since the Model Context Protocol (MCP) was introduced by Anthropic, vendors and individuals alike are making MCP servers available for use everywhere we look. AppSec2025 State of Dependency Management Report- AI Coding Agents and Software Supply Chain RiskEndor Labs just dropped the 2025 State of Dependency Management and, it’s a real doozy. The team took a comprehensive look at both the state of AppSec and the role of AI on the modern SDLC, along with the rise of MCP and its potential as both an asset and a liability. This included:

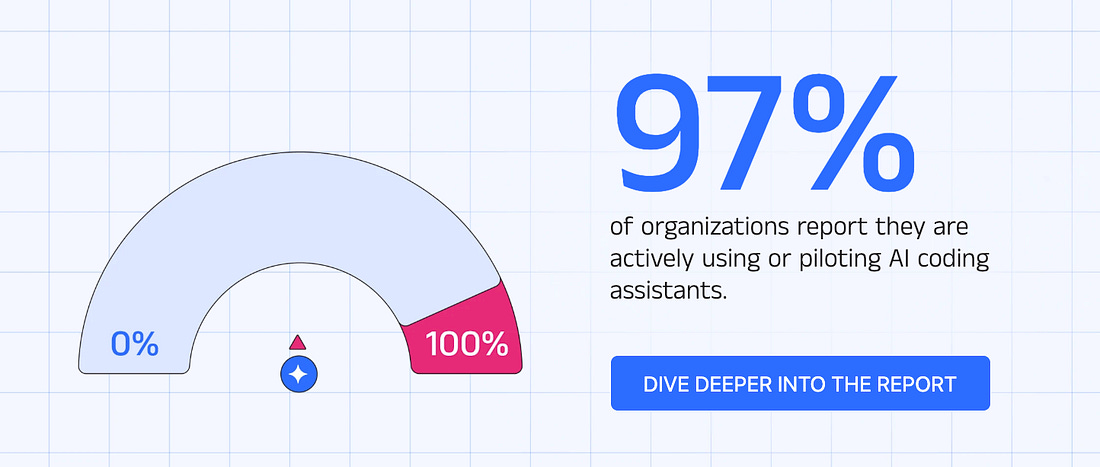

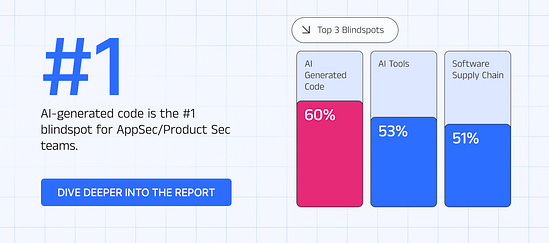

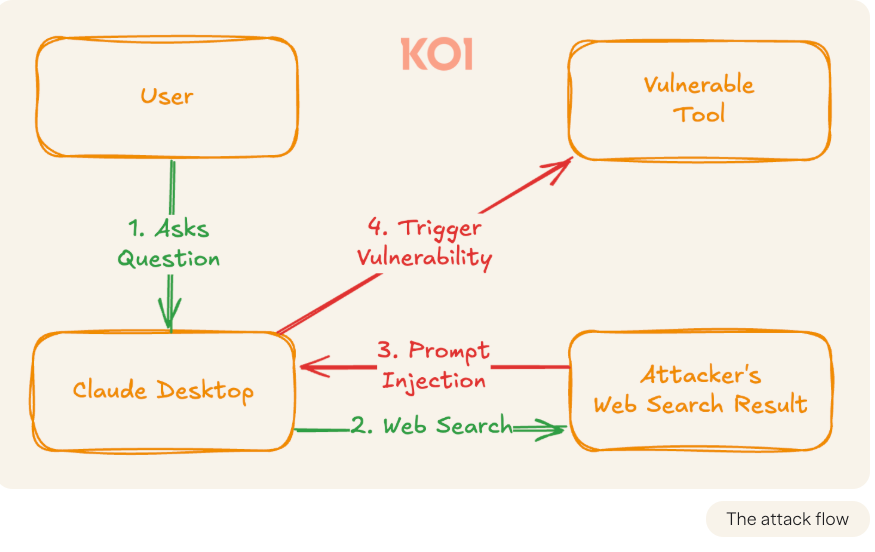

The report is full of excellent insights on how AI is impacting the AppSec ecosystem, and I’ll be publishing a comprehensive deep dive of the report soon - so keep an eye out for that! 2025 SANS CloudSecNext Summit PlaylistSANS recently published their 2025 CloudSecNext Summit playlist, which includes an excellent collection of talks on everything about cloud security, from IAM, SecOps, GRC and more. This includes a talk from my friend and former teammate in Dakota Riley titled “Compromising Pipelines with Evil Terraform Providers”.  The State of Product Security for the AI Era - 2026The Cycode team recently published an interesting report with insights from over 400 CISOs and security leaders on the impact of AI, including securing AI-generated code, shadow AI, budgets, productivity and more. They found near ubiquitous adoption of AI when it comes to coding assistants: They also found AI-generated code is the #1 blindspot for AppSec and Product Security teams, followed closely by the use of AI tools and software supply chain risks. AI security is driving budget increases, with 100% of those surveyed stating they expect an increased budget for AI security in 2026. The report is full of other great insights in terms of AI’s intersection with AppSec, so I recommend giving it a full read and I will likely be doing a deep dive of the key takeaways here soon! PromptJacking: The Critical RCEs in Claude Desktop That Turn Questions Into ExploitsI’m beginning to feel like I’m sharing research and findings from the Koi team damn near weekly at this point. That said, they continue to find critical findings that are impacting the community and tied to leading AI coding tools, as well as MCP and developer extensions. The latest example are three official Claude extensions with over 350,000 downloads which are all vulnerable to remote code execution (RCE). The extensions are Chrome, iMessage and Apple Notes and were published by Anthropic themselves and are available on Claude Desktop’s extension marketplace. All three have now been fixed by Anthropic, and were rated critical via CVSS, but nonetheless, the incident goes to show that even leading providers can inadvertently expose organizations with malicious extensions in AI coding tools. Koi’s blog walks through how compromised or malicious web pages that Claude interacts with as part of fetching data to respond to prompts could lead to triggering a vulnerability and executing malicious code. Deep Dive: Cursor Code Injection Runtime AttacksContinuing the trend against AI coding agents, the team at Knostic, another AI security firm provided an excellent deep dive on code injection runtime attacks against Cursor. Cursor of course is an AI coding agent with widespread adoption and use. This involves a malicious extension and can lead to taking over the IDE and developer workstation. This highlights the continued vulnerabilities and attack vector of AI coding agents, which can compromise developer endpoints and impact organizations, turning the “productivity” gains of AI coding into threat vectors. Their blog walks through how AI coding agents often have insecure architectures, expand the attack surface and perpetuate old classes of vulnerabilities. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: