Software Dependency Dilemma's in the AI Era

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

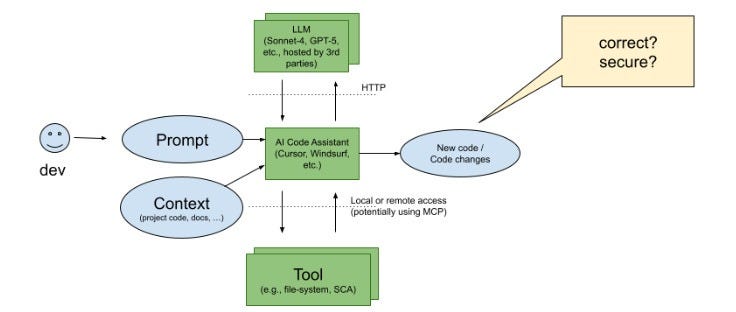

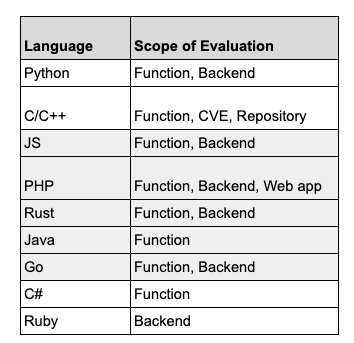

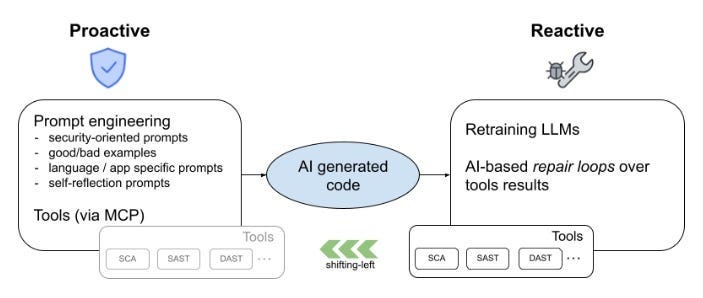

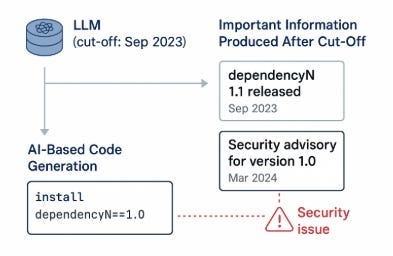

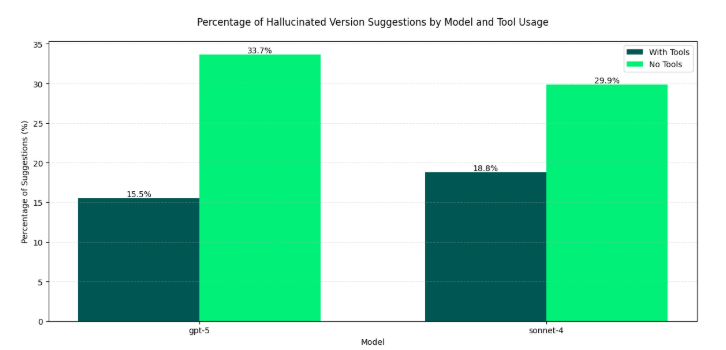

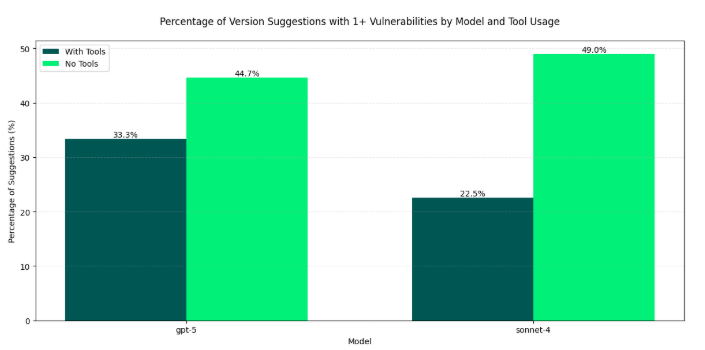

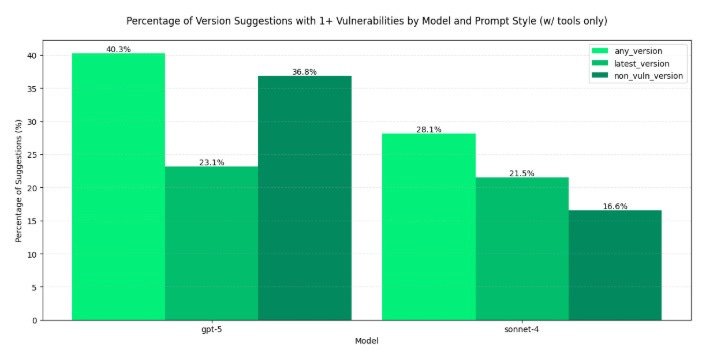

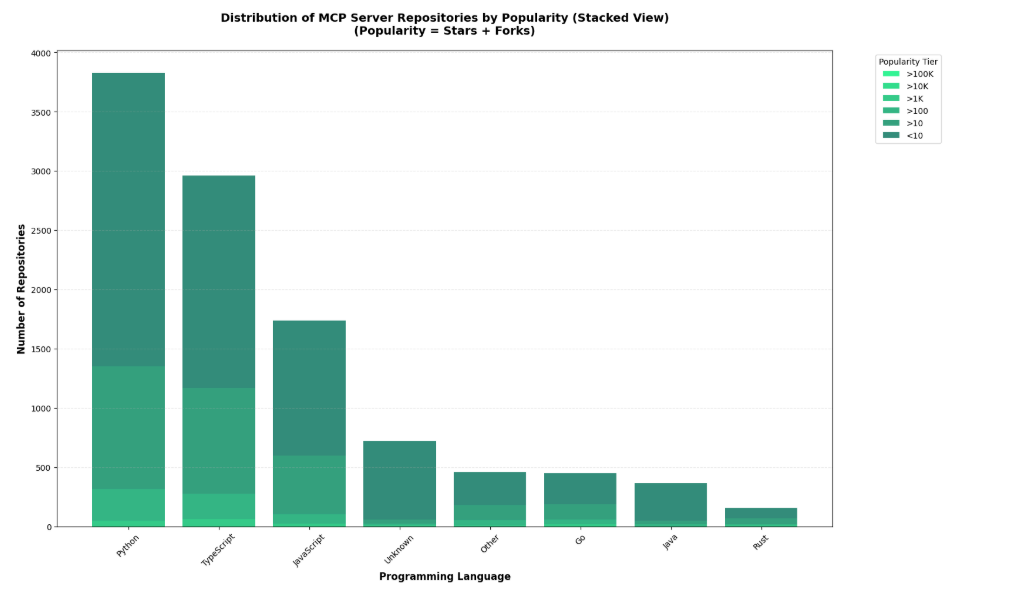

Software Dependency Dilemma's in the AI EraA look at Endor Labs 2025 State of Dependency Management - AI Code Generation & MCP ServersFor absolutely anyone observing, 2025 has been a transformative year for software development and the modern SDLC. Organizations have continued rapidly adopting AI-generated coding tools and platforms, and we’ve seen the introduction of the Model Context Protocol (MCP), which can facilitate agents and autonomous workflows. We’ve quickly watched the dominant programming language become English, as specification-driven development with prompt-based programming is becoming the norm, for better or worse. This democratization of development has both positive and negative implications, as developing software is something nearly anyone can do with modern tools, and the security and quality of what is produced are often suspect. Cybersecurity and AppSec practitioners have had to step back and rethink what AppSec looks like in an era where soon most of the code being written won’t be written by humans, where developers inherently trust generated code, and where IDEs and coding tools can now take actions beyond just committing code. That’s why I’m excited to share and dig into Endor Labs’ latest 2025 State of Dependency Management report. I’ve covered the report in prior years, and it is always full of great insights and takeaways regarding the state of AppSec. The report opens with a highlight of the challenges of AI-generated code, as studies and research demonstrate that AI-generated code is often vulnerable, with a range of 24.5% to 32.8% depending on the programming language. I’ve shared various reports myself, including a recent blog with Endor Labs, where I highlighted that not only is AI-generated code initially vulnerable, but it also becomes more vulnerable with each iteration, and bizarrely, even when using security-specific prompting strategies. All of this is further complicated by the addition of the Model Context Protocol (MCP), which has enabled agentic architectures and workflows, as well as semi- or fully autonomous activities accessing internal and external data stores, systems, and tools. MCP, much like AI itself, is a double-edged sword, offering opportunities for exposure, but also opportunities for enablement and embedding security tooling and capabilities organically into development workflows. The Endor Labs State of Dependency Management Report dives into this topic, examining the transformative impact of AI on modern development workflows and methodologies, as well as its broader implications for AppSec and vulnerabilities, including those related to MCP. So, let’s get into it! The State of Dependency Management 2025 reveals how AI coding agents and Model Context Protocol (MCP) servers are introducing a new layer of software supply chain risk as AI becomes an integral part of modern software development. As developers increasingly rely on AI tools to accelerate coding, Endor Labs researchers found that these agents not only produce insecure code but also import vulnerable or non-existent open-source dependencies at scale. In this year’s edition, to gain a comprehensive overview of the MCP server landscape, we analyzed 10,663 GitHub repositories that implement MCP servers and performed large-scale testing of AI-generated dependency recommendations across major ecosystems, including PyPI, npm, Maven, and NuGet. How has AI Changed Coding?The report begins by helping readers understand how AI coding has transformed modern software development. Industry leaders, including Cursor and Windsurf, have released their AI coding assistant platforms, which are coupled with LLMs, allowing developers to use plain language prompts, broader context, and specification-driven development approaches to generate code and applications. This is summarized in the image below: While claims of productivity have dominated headlines, Endor Labs cites extensive research published throughout the year, raising concerns about productivity, functional correctness, and security, which demonstrates that the hype may not match reality. I’ve written about this extensively myself, in other pieces such as “Fast and Flawed” and “Vulnerability Velocity & Exploitation Enigmas”, where I discussed the inherent vulnerabilities of AI-generated code and the implications as these tools and processes are codified across the industry. Endor Labs’ State of Dependency Management Report dives incredibly deep, examining over 40 academic and industry reports on AI code generation that focus on security. This included the introduction of 16 different benchmarks and also determining to what extent the studies covered various programming languages. They found the benchmarks over-prioritize Python while not covering other relevant languages as extensively, such as JS, PHP, Rust, and Go. Endor’s research also highlighted that the scope of evaluations of AI coding assistants was heavily focused on function-level tasks, as seen below: How about the (in)security?Overall, they found that AI-generated code is vulnerable 25-75% of the time, and the rate of vulnerability tends to increase as the complexity of scenarios and tasks increases in parallel, emphasizing the importance of well-defined tasks. Surprisingly, not only is AI-generated code better when narrowly scoped and defined, but there also appear to be gaps when focusing on backend rather than front-end development. Relevant studies have found that even the best models only reach 62% correctness, along with security flaws in most scenarios. One aspect I found concerning is that the team cites that AI coding tools tend to struggle with authentication and authorization in web apps. You may be thinking, What’s the big deal? Until you step back and remember that the dominant attack vector year-over-year (YoY) is credential compromise and identity-based attacks, it becomes clear that if we have applications increasingly coded by AI and making it into production with flawed, dysfunctional, and insecure authentication and authorization characteristics, it is a recipe for trouble. These challenges are exacerbated by factors such as high rates of hallucination, vulnerable dependencies, and the implicit trust developers place in AI-generated code, all of which the Endor Labs report cites with supporting research. One aspect that has been discussed extensively in the AppSec community is the concept of garbage in/garbage out, or the recognition that leading frontier models are trained on large public datasets, including open-source software. As I have discussed in articles such as “The 2025 Open Source Security Landscape”, open source is rife with vulnerabilities, flaws, and inherent risks that make it into training data and are subsequently passed down via AI-generated code. Endor Labs’ State of Dependency Management Report found the same, including common flaws such as missing input validation, injection flaws, and dependency bloat, the latter of which has been a frequent concern among developers using the tools as well. Known vulnerabilities are just the start, though. The report also cites research that found a 322% increase in privilege escalation paths, as well as a 153% increase in architectural design flaws. While vulnerability scanning tools may not detect these issues, they have significant security implications nonetheless. Last but not least, we see the rise of another “shadow” category of technology, this time in the form of AI. I explained why security tends to perpetuate these patterns in my article, “Bringing Security Out of the Shadows.” When it comes to AI, this involves various AI tools, models, and MCP servers, among other forms of technology. One aspect that makes AI unique, which Endor rightly points out, is the semi- or fully autonomous nature of AI and agents, where attacks are now leveraging AI coding assistants as part of the attack surface, and even their own toolchains. What do we do about it?Endor Labs’ report goes on to highlight numerous excellent recommendations, including proactive and reactive techniques, as well as security-centric prompting and the integration of security tools into agile workflows. One of the most exciting aspects of AI and Agentic AI is the ability to take a shift left from theory and noisy, low-context tools that beat developers over the head with findings, to embedding directly into developer workflows with emerging methodologies such as MCP servers that can position security as a copilot during development, rather than a gate on the path to production. These opportunities aren’t without their own obstacles, though, with the rise of vulnerable or even compromised MCP servers, along with the reality that even security-centric prompting isn’t foolproof and is part of an agentic AI security defense-in-depth strategy that must be employed. To help these mitigations succeed, implementing best practices is required, as highlighted by the Endor Labs report, including establishing a strong prompt culture, integrating security tools into the earliest stages of the development process (e.g., MCP), and strengthening code review and testing. The diagram below, from the Endor Labs report, illustrates how prompts can play a crucial role in integrating company-wide and project-specific context into agents and development workflows, leading to more secure outcomes. Software Dependency DilemmasThe attack surface and considerations for the software supply chain have also expanded in the AI era, moving beyond third-party components to include MCP servers, AI models, and other related elements. As the Endor Labs report notes, this is further complicated by training cut-off dates, leading to stale and vulnerable components and dependencies, as models utilize components before their training cut-off date, as demonstrated below: This increases the risk that AI coding assistants and platforms will perpetuate vulnerable dependencies and supply chain risks, especially when coupled with the implicit trust that developers place in them without verifying the outputs. Some have sought to mitigate these risks while extending the capabilities of LLMs and AI coding tools by utilizing MCP to obtain real-time data and insights, as well as enable external tool calls, autonomous workflows, and more. Endor Labs conducted some fascinating research with one of the leading AI coding platforms, Cursor, both with and without tool use, and using three different state-of-the-art (SoTA) models to examine package hallucination rates, and found that across the leading models, hallucination rates vary from 15.5%-33.7%, even with the use of tools for external data and context. The team also examined the percentage of package versions that Cursor suggested contained at least one vulnerability, and the results were similar. They were able to demonstrate that while asking for non-vulnerable versions does decrease risks, it doesn’t eliminate them either: This aligns with various research papers I’ve shared, both demonstrating the prevalence of phantom dependencies (package hallucinations) as well as vulnerable versions, even when using security-centric prompting. While the figures aren’t perfect, it does highlight the value of tool use, which is commonly facilitated via the Model Control Protocol (MCP). The Endor Labs State of Dependency Management Report digs deeply into MCP and its implications for both good and evil. It is one of the most informative resources I’ve found on the topic yet, which is why we will spend some time discussing its findings below. Model Context Protocol (MCP) - Asset or Liability?If you’re following my blog and writing, you’ve inevitably heard of MCP by now, so I won’t spare too much time discussing the basics of it. In short, think of it as a protocol that enables AI to interact with systems, data, tools, and more, and give LLMs “arms and legs”. If you’d like a deeper breakdown of MCP basics, I recommend reading the full report, which provides an excellent breakdown. Endor Labs conducted a comprehensive review of the MCP landscape to inform this report, extending beyond what I have previously shared in excellent resources, such as the “2025 State of MCP Server Security”. The team analyzed over 10,000 GitHub repositories with implications for MCP servers, leveraging resources such as MCP.so and MCP Market. They found that Python and JavaScript dominated the ecosystem, with 80% of implementations. Additionally, they found that 40% of MCP servers lacked licensing information or had non-permissive licenses, which can impede enterprise adoption. The findings also have some supply chain ramifications, such as the fact that 75% of the MCP servers indexed are from individual user GitHub repositories. This emphasizes the need to govern MCP integration and consumption, particularly with the rise of malicious activities, such as the discovery of intentionally malicious MCP servers in the wild. While there may already be tens of thousands of available MCP servers, consumption is more nuanced, with metrics such as GitHub stars indicating that 66% of MCP servers have fewer than 10 stars. In comparison, 26% have between 10 and 100. It continues to dwindle to a small number of available MCP servers, which remain the most popular. Despite the majority of available MCP servers in repositories being contributed by individuals, the most popular MCP server repositories are from organizations, comprising 12 out of the top 20 MCP servers. These servers feature implementations from major names, including GitHub, Microsoft, and Figma, as well as popular tools such as Code2Prompt and Inbox Zero, among others. MCP Security LandscapeWe’ve used the report to discuss the prevalence of MCP server repositories and their adoption, but what are the security implications? This is where the Endor Labs report offers additional valuable insights. The Endor Labs report breaks down MCP servers into three components:

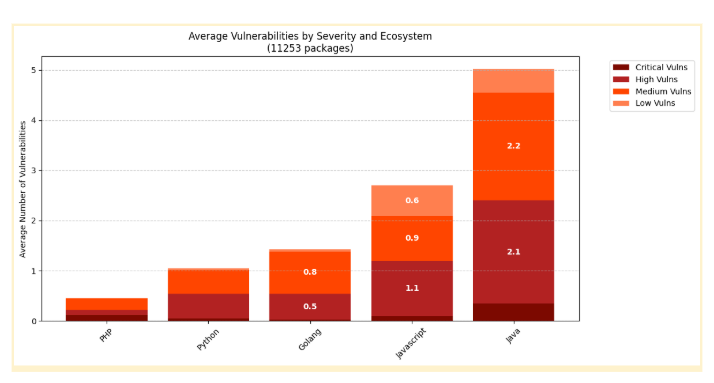

The report highlights that MCP alone doesn’t create new classes of attacks, but rather amplifies existing ones, such as supply chain compromises, API abuse, and the manipulation of prompts into a unified layer. These threats and risks are outlined in the report, along with industry-leading resources, such as the OWASP Top 10, which is currently an incubator project. Threats such as typosquatting, remote third-party service compromise, prompt manipulation, and data exfiltration through tools, among others. Endor Labs recommends key activities, including package management, enforcing prompt integrity, and limiting privileges, as well as leveraging tools such as MCP Inspector to evaluate potential malicious runtime behaviors of MCP servers. However, just like broader open source, we’re seeing widespread adoption and consumption of MCP servers with little to no security rigor. The State of Dependency Management report highlights various security findings from the teams’ scans of MCP servers, including first-party vulnerabilities, dependencies, and risks in the SDKs and MCP servers themselves. This includes widespread sensitive APIs that are prone to weaknesses such as Path Traversal, Code Injection, and Command Injection. Additionally, they identified bloated dependencies, especially among languages such as JavaScript, which, as I mentioned above, is one of the dominant languages for MCP server implementations, along with higher vulnerability counts where there are higher dependency counts, as seen below: Closing ThoughtsEndor Labs’ 2025 State of Dependency Management Report sheds light on the dichotomy that AI presents in cybersecurity, from proliferating vulnerable code on one hand, to opening new attack vectors with MCP, and on the other, truly enabling the shifting left of security into development workflows and IDEs via MCP. While AI can enhance longstanding AppSec tools, such as SAST, to reduce false positives, it can also accelerate development and expand the attack surface, especially without security-centric prompting and other necessary guardrails. This report lays the groundwork for the secure adoption of agentic coding and AI-driven development by highlighting key risks and mitigations that organizations and AppSec practitioners should be aware of. Be sure to check out the full report for more! Invite your friends and earn rewardsIf you enjoy Resilient Cyber, share it with your friends and earn rewards when they subscribe. |

Similar newsletters

There are other similar shared emails that you might be interested in: