🔮 Exponential View #545: Rethinking scale; GPUs are the new turbines; stuck graduates, robots & AI companions++

🔮 Exponential View #545: Rethinking scale; GPUs are the new turbines; stuck graduates, robots & AI companions++A weekly briefing on AI and exponential technologiesGood morning from London! Software demands a new worldSilicon Valley spent decades abstracting away from physical reality. But AI is so computationally intensive that it’s dragging tech back into the world of concrete and copper wire. Software is no longer eating the world; it’s demanding a new one. Banks and other forecasters think there’s $4-5 trillion in building and power needed to run AI. Meta, by its own admission, wants to build a data center the size of Manhattan. This is all demanding massive changes to our energy system.

Microsoft foresees a consistent data center crunch through 2026. As Kevin Scott said

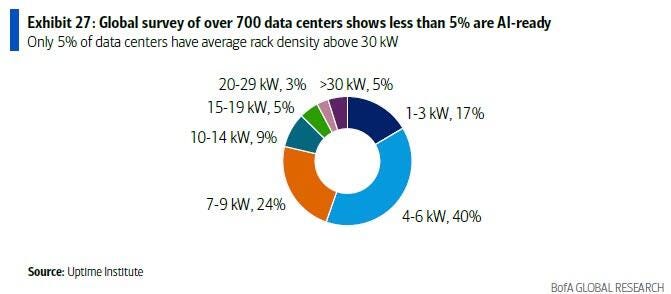

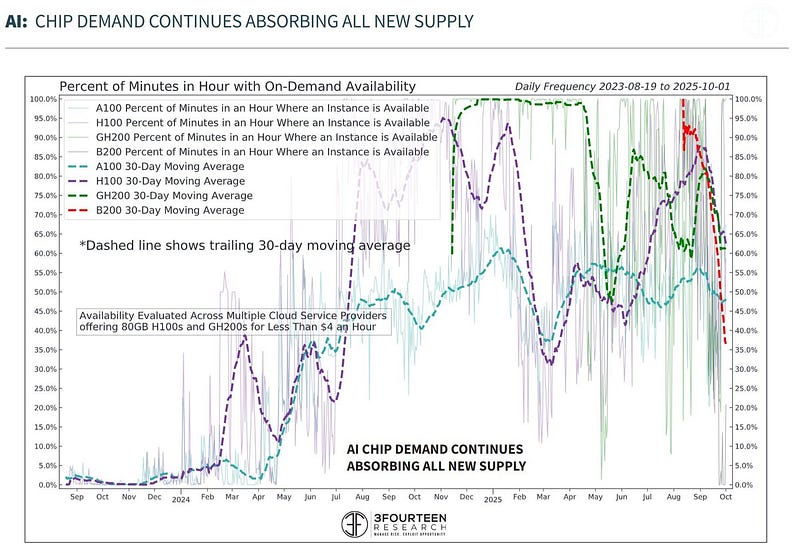

But as many as 95% of data centers built for cloud computing may struggle to meet the power demands of new generation AI chips, according to BofA. A single AI rack will draw up to 600kW by 2027 (up from ~50-120 kW/rack today). This boom is creating new scarcities. Electricity now costs 267% more than it did five years ago in areas near a major data center in the US, fueling backlash as residents subsidize corporate power demand. Another one is the ongoing demand for close-to-obsolete GPUs. Take Nvidia’s A100, five years after it was first launched, and even after its successor has begun shipping, is harder to get today than it was in 2020. Prices are rising and depreciation schedules are extending from three years to six years. New supply is being snapped up.

As the bottlenecks shift onto the physical infrastructure, it’s no wonder that companies building this new world are doing well. Stocks in nuclear power company Constellation Energy are up almost 5x since ChatGPT launched, outperforming even Microsoft. Abundance needs to feed abundance though, and we’ll need new solutions. On the Open Circuit podcast this week, I argued that communities around data centers need a fair way to participate in the build‑out. Data centers need to be better grid citizens, perhaps by providing grid services – offering demand response and stability and paying when they strain the system. Others, like electrification nonprofit Rewiring America, suggest that hyperscalers co-fund a mass deployment of heat pumps, rooftop solar, batteries, weatherization and other technologies to cut residential peak load in data center alleys. Done at scale through 2029, these measures could offset the AI-driven load growth and lower household bills. Recursive intelligenceAfter a spree of scaling, the frontier may be curving back toward elegance. Samsung’s 7-million-parameter tiny recursive model (TRM) scores well on two important benchmarks: 45% on ARC-AGI-1 and 8% on ARC-AGI-2¹. The TRM edges past much larger reasoning LLMs including DeepSeek R1, o3-mini and Gemini 2.5 Pro with less than one-ten-thousandth of their parameters. The trick is thinking in loops, as the model recursively critiques and revises its candidate answer until it improves.

This matters because ARC-AGI is designed to be easy for humans, hard for AIs. Most frontier models score in the low single digits on ARC-AGI-2 even with “thinking” modes enabled. Samsung’s tiny recursive network roughly doubles that mark. Still far from human level, but a striking efficiency frontier. The broader pattern is now visible in that compute spent at inference (“test-time compute”) often beats raw parameter count. If these gains migrate from puzzles to real-world tasks, they’ll create a new family of small, recursive, edge-capable systems that run locally rather than in distant data centers. This could reshape deployment economics, especially where energy and memory are scarce. Scale isn’t finished. Nor would this suppress compute demand or slow adoption. Data centers will remain supply-constrained, particularly around memory, while hundreds of millions of modern phones and edge devices can now host TRMs to open new deployment paths. For a certain class of tasks, inference could be distributed to billions of devices. See also:

An update on graduate jobs and AIGrads are having a terrible time getting jobs. The unemployment rate for US grads has averaged 6.6% over the past year (the national average is 4%). Many blame AI, but my working assumption is different. Labor markets are complex and slow to change. They tightened after Covid, and now, amid genuine economic uncertainty, firms are focused on cutting costs. It’s simpler to pause hiring than to fire, and easiest of all to skip inexperienced candidates. If you’re running a company in this environment, you can spin a hiring slowdown as progress by pointing to AI wins. Two data points I came across this week back it up. When the FT compared young graduates’ unemployment with other first-time entrants into the job market regardless of age, they found that “those without a degree are actually having a much harder time of it,” no matter their age. The slowdown is about (in)experience rather than age. Yale’s Budget Lab’s latest report on AI and the job market further shows that there’s been no macro evidence that AI has disrupted employment levels, unemployment composition, or job transitions since 2022 when ChatGPT was released. Effects on the job market are coming from elsewhere, not AI, for now. See also:

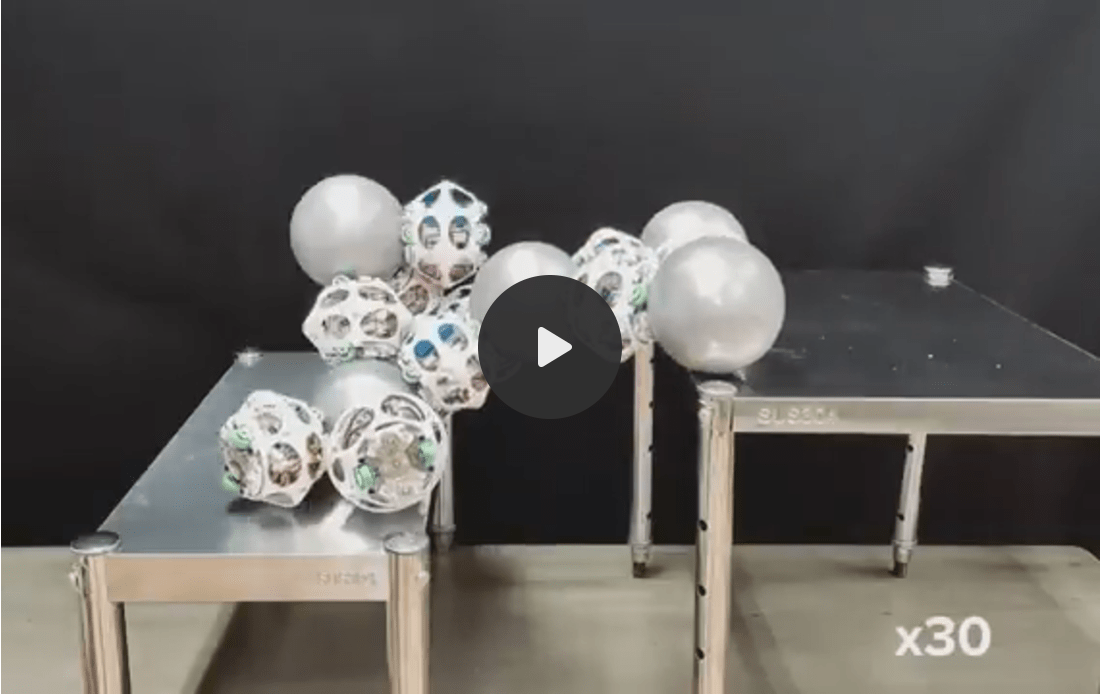

Robots in the storm… and in the kitchenFigure unveiled its third-generation humanoid this week and the first it claims can be manufactured at scale for real homes. Nearly every component was re-engineered for mass production and the company says they’ve rebuilt their supply chain with the goal to manufacture up to 100,000 humanoid units in the next four years. The focus is no longer on proving a robot works, but on proving it scales.

Meanwhile, China’s DEEP Robotics introduced an all-weather humanoid built for outdoor industrial use, rated to operate from –20°C to 55°C in real-world conditions. Last week, we wrote about the robotics flywheels and how China built a robotics ecosystem over the past decade that the West may have a hard time matching. See also:

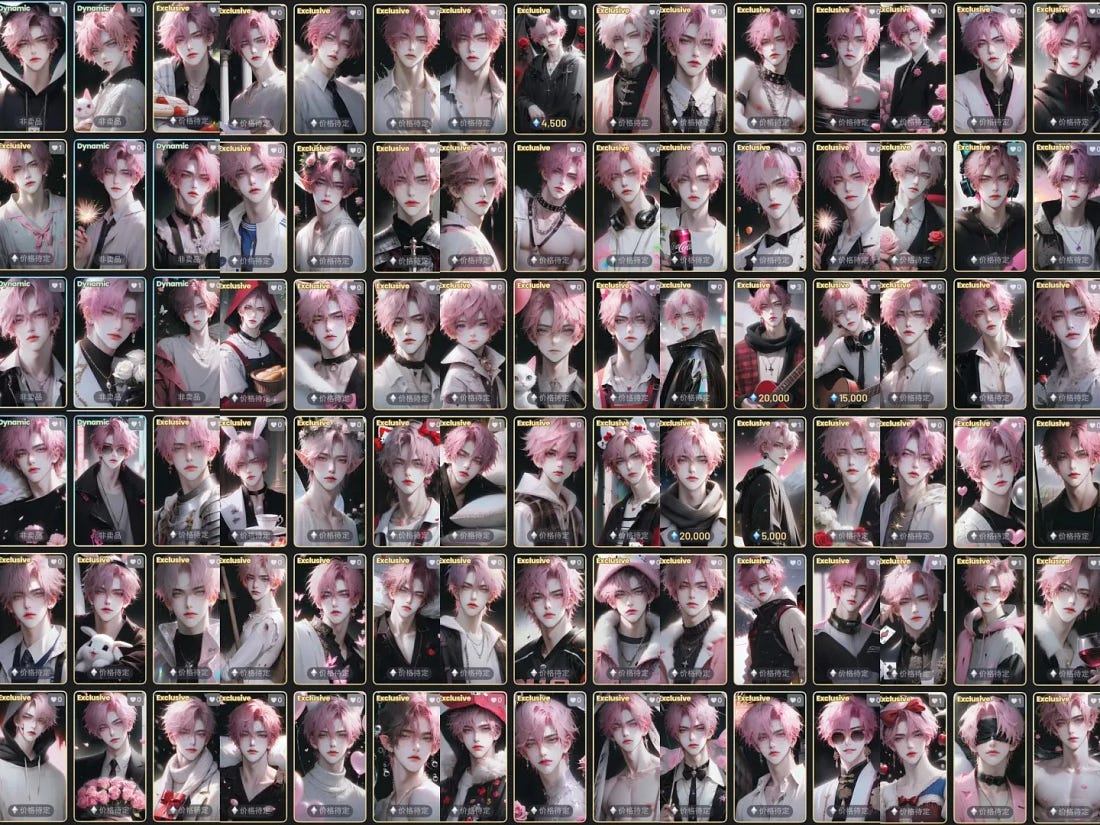

ElsewhereAcross AI, technology, science and culture…

1 ARC-AGI-1 and ARC-AGI-2 are subsets of the Abstraction and Reasoning Corpus (ARC) benchmark designed to test whether models can infer abstract rules and generalize from very few examples – tasks that humans typically solve through pattern reasoning rather than memorization. |

Similar newsletters

There are other similar shared emails that you might be interested in: