State of AI in SecOps - 2025

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

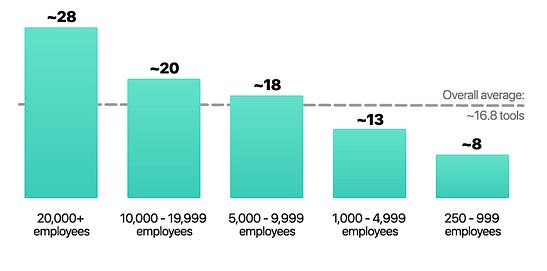

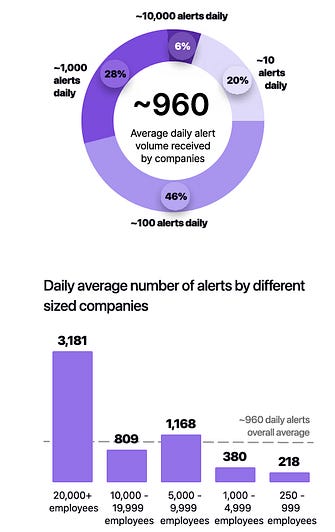

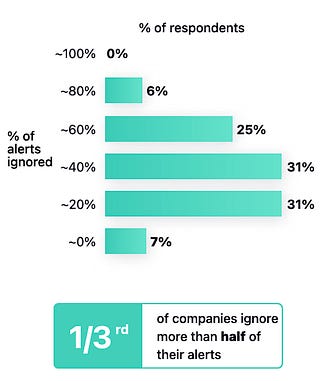

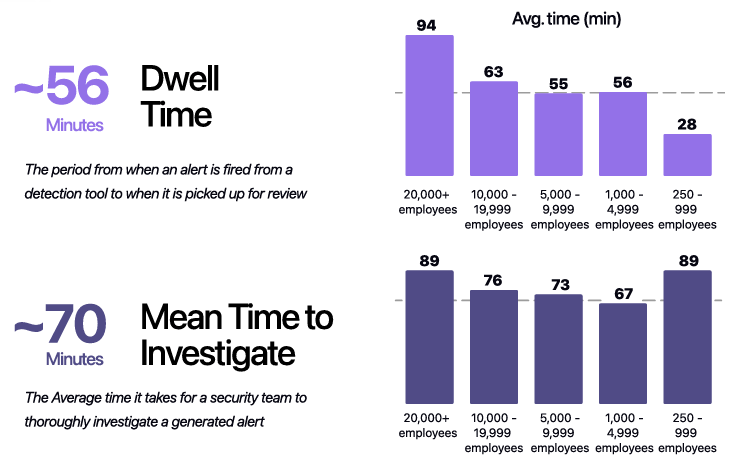

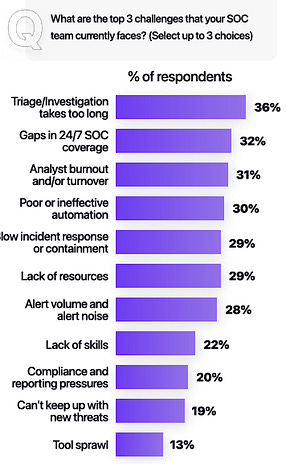

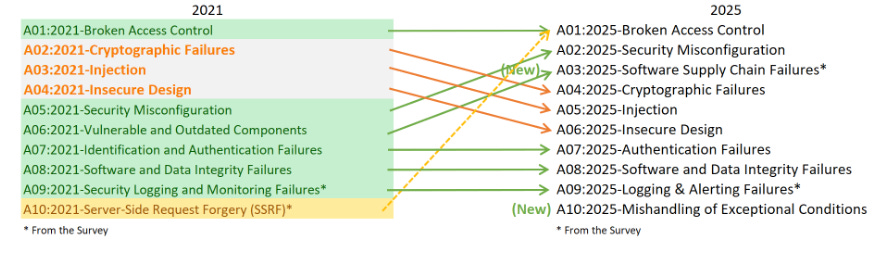

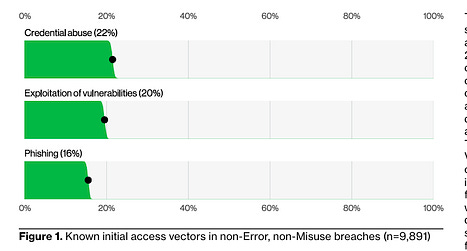

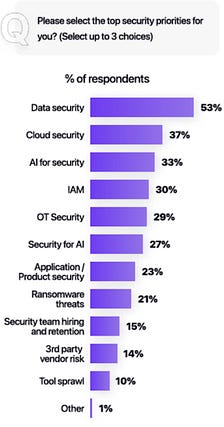

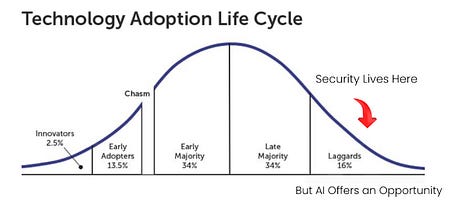

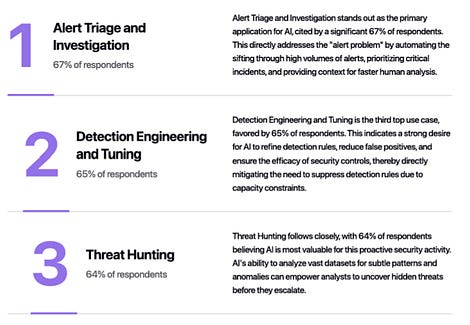

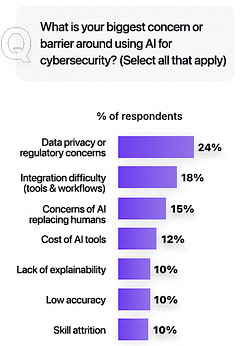

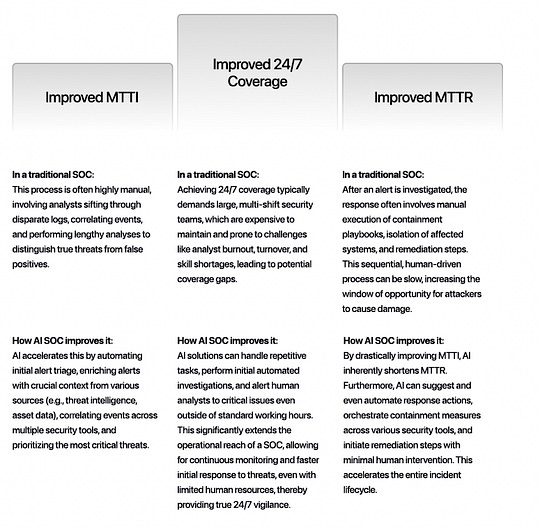

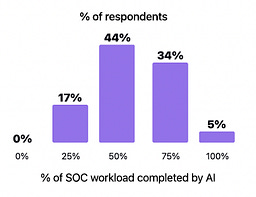

I’ve written extensively about the excitement and potential of AI across various cybersecurity categories, including GRC, AppSec, and others. However, no cyber category has seen as much investment or attention when it comes to AI as Security Operations (SecOps). Which is why I wanted to check out AI SecOps startup Prophet Security’s “State of AI in Security Operations” 2025 report. It brings real-world insights from nearly 300 security leaders, looking at the current state of SecOps right now when it comes to alert, investigations, incidents, and more, as well as the future of an AI-driven SecOps paradigm, where and how security leaders are making changes, and what the future of the SOC may look like. Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below! Bottom Line Up Front (BLUF)Before we dive into the specific findings, I would like to highlight the key points the report emphasizes. As you can see, key themes, which we will discuss in more detail below, include teams being overwhelmed with alerts and noise, leading to teams ignoring notifications, suppressing detections, and potentially missing critical incidents. Meanwhile, security leaders are very optimistic about the future of AI and cybersecurity, including increased key investments, utilizing AI for alert triage, and anticipating that over half of SOC workloads will be completed by AI in just 36 months. Drowning in NoiseOne common theme throughout the Prophet report is what they refer to as “the alert problem,” which is tied to a drain on SOC resources, most notably our teams, with implications for increased organizational risk. The report highlights that the larger an organization is, the more alert-generating tools it has, with the average organization having over 20 alert-generating tools. This to me read like a downstream impact of the overarching security tool sprawl problem, with organizations reporting having several hundred security tools, leading to various issues from struggling to justify return on security investment (ROSI), an expanded attack surface as tools get exploited, shelfware, as tools are not truly implemented and optimized and as we see here, fatigue on the staff, as they drown in alerts and struggle to determine what actually to focus on and what matters to the organization from a risk perspective. As you can expect, when you have tens of tools generating alerts, you end up with a large number of alerts, with the daily average number of alerts received by companies exceeding 1,000. Prophet’s report cites other similar reports and surveys, such as the SANS SOC 2021-2025 survey, which showed that alert volumes continue to grow year-over-year (YoY), while teams are being asked to do more with less, cut costs, and see stagnant head counts and budgets. When teams are faced with this much noise, they act rationally based on real-world constraints. This includes implicitly accepting risks by not investigating all alerts, due to a lack of bandwidth and capacity on a given day. The Prophets' report found that, on average, 40% of alerts are never investigated, and this rate is higher in some cases, depending on the organization. And what does ignoring alerts lead to? You guessed it, potential incidents, with the report stating that 60%~ of security teams have experienced incidents tied to ignoring alerts that turned out to be critical. Capability MismatchMuch like AppSec, where there are more new vulnerabilities per month than organizations have the capacity to remediate, or where it takes attackers less time to develop exploits than it takes defenders to apply patches, a similar capability mismatch exists in SecOps as well. For example, Prophet’s report found that it typically takes organizations 56~ minutes to be acknowledged by internal SecOps teams and 70~ minutes to investigate generated alerts. Contrast that with reports such as CrowdStrike’s 2025 Global Threat Report, where attackers carry out common attacks, such as phishing, in shorter times, or with the use of AI, where researchers have demonstrated creating vulnerability exploits within 15 minutes of vulnerability disclosure, which is a recipe for disaster. The Prophet’s report highlights how this extended dwell time and higher mean time to investigate (MTTI) have consequences for organizations, as attackers can potentially exploit vulnerabilities, move laterally, exfiltrate sensitive data, and more, all before defenders have had a chance to investigate any associated alerts. Upstream CausesDigging deeper into the root causes of the metrics above, related to lengthy timelines for investigation and alerts being outright ignored, Prophet’s report sheds light on some of the root causes of these issues. Prophet asked those surveyed what their top 3 challenges are for their SOC team, and the findings are below: Key themes are evident, such as long timelines due to a lack of effective automation, teams being overwhelmed with the volume of tools, alerts, and threats, as well as the staff being outright burned out, which is a well-documented issue associated with the SOC. Leading the pack of issues cited are investigations that take too long, which is understandable given that analysts need to stitch together insights across complicated digital environments, coupled with threat and vulnerability information, to make actionable insights. Following close behind is the practical reality that many teams don’t work 24/7, forcing them to catch up on alerts and notifications the next workday, or feel compelled to stay later to make sense of them, all of which leads to the third-ranked issue: analysts getting burned out. Teams go on to intentionally suppress detection rules in an effort to keep pace. In practice, this involves deliberately disabling or not activating specific detection rules and notifications, based on the understanding that their team doesn’t have the time or capacity to review them all, even if they are potentially legitimate. Prophet rightly points out the dangers here, which include teams thinking they’re more secure than they are, due to a lack of alerting, or flying blind to real risks, which they’ve now suppressed visibility into. This is where things get really interesting for me, though, when it comes to the report. The two leading detection gaps companies are Cloud and Identity. You may be saying yeah, and? The “and” is that both of these are crucial leading risks and attack vectors that are routinely highlighted by industry-leading resources and reports. For example, OWASP has just released its 2025 OWASP Top 10, its first significant update since 2021. “Security Misconfigurations” jumped from 5th to 2nd, and upon closer examination, OWASP emphasizes that this is primarily due to cloud infrastructure, including secret leaks and misconfigured cloud environments. How about identity?First, as I mentioned above, secret leakage is a critical issue across the industry, including credential exposure. Secondly, leading reports such as Verizon’s DBIR still list “Credential Abuse” (e.g., Identity) as the leading initial attack vector in the industry. Prophet’s report highlights the uncomfortable reality that 57% of organizations are suppressing detections precisely where they are needed most, in cloud and identity, due to alert fatigue, a lack of capacity, and staff overload. Another aspect of Prophet’s report that really should resonate with all security practitioners and leaders is the economic impact of these suppression behaviors. The Prophet report points out that organizations continue to invest in tooling but are running them at reduced capacity, failing to take advantage of their features, capabilities, and value, which has both budgetary waste and inefficient risk reduction implications. In an era when organizations commonly run hundreds of security tools, struggle with security tool sprawl, and face challenges justifying ROI, this doesn’t bode well for security leaders allocating budgets. So what do security leaders think?We used the report’s insights to talk about the state of SOC and deficiencies in the ecosystem, but how do security leaders envision the future of the SOC’s intersection with AI? I was incredibly relieved to see AI for security ranked as a top 3 priority for security leaders, which applies to AI for the SOC, as well as numerous other categories of use cases being explored by startups, innovators, and practitioners across the ecosystem, such as AppSec, Vulnerability Management, and GRC. In my prior article “Security’s AI-Driven Dilemma”, I pointed out how historically, cybersecurity is a late adopter and laggard in the technology adoption lifecycle. However, we seem to be learning our lesson and flipping that paradigm, looking to be an early adopter and innovator with AI for security, while still acknowledging that we must secure AI as well. When the survey dug deeper to identify the primary use cases that security leaders are looking to leverage AI for in the SOC, the three dominant use cases were Alert Triage and Investigation, Detection and Tuning, and Threat Hunting. This makes sense given that some of the most problematic activities for SOCs include having the bandwidth to triage and investigate their volume of alerts, optimizing detections, which, as we mentioned above, are often being suppressed due to a lack of capacity, and then proactively threat hunting, moving security from reactionary to proactive. The areas align with where organizations that are using AI in the SOC are actively applying it too, with the survey finding 55% of them are “using AI in some capacity to triage, investigate, or remediate alerts”. Even those who aren’t currently using AI SOC capabilities found that 45% of the survey respondents have an appetite for adoption in the coming year. As the survey points out, we are past the point of “if” organizations intend to adopt AI SOC capabilities, and it is now just a matter of when and how. One of the key factors potentially delaying the adoption of AI for security is data privacy and regulatory concerns, which are understandable, as this space is still evolving. We also have complex diversity and regulatory approaches between major nations and regions, such as the U.S. and the EU. We discussed ROI earlier in the context of security tools, and the need to measure the ROI of AI SOC tools is no different. The Prophet’s survey asked how organizations measure the ROI and/or effectiveness of AI-driven SOC tools. As anticipated, leaders rallied around standard SecOps metrics, such as mean time to investigate (MTTI), mean time to recover (MTTR), and overall improved coverage (e.g., 24/7). The report also highlights how AI SOC helps improve these metrics compared to the traditional SOC. Teams are reporting significantly improved performance in key metrics, such as MTTI of alerts and investigation completion, compared to their traditional SOC capabilities. One of the most surprising findings (for me) was that an astounding 83% of security leaders believe that over 50% of the SOC workload will be completed by AI within the next three years. This demonstrates a combination of how quickly the technology is evolving from a capability perspective, as well as the optimism of security leaders about its application. To gain insight into what security leaders consider when evaluating AI SOC solutions, the report sought to gather those insights, and key themes emerged, including coverage, Accuracy, Quality, Workflow Integration, Time-to-Value, and Data Privacy and Security. Closing ThoughtsProphet’s 2025 “State of AI in Security Operations” provided a comprehensive look at one of the most dominant and hopeful applications of AI for security in the industry. This is an area of cybersecurity that has not only seen a ton of optimism but also significant investment and exploration, which makes sense given that SecOps is one of the most problematic and challenging areas of cybersecurity, both from a technical and workforce perspective, all of which manifest into decreased capabilities to detect and respond to risks for organizations. Be sure to check out the full State of AI in Security Operations Report for the full analysis! Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: