The Evolution of AppSec - From Shifting Left to Rallying on Runtime

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

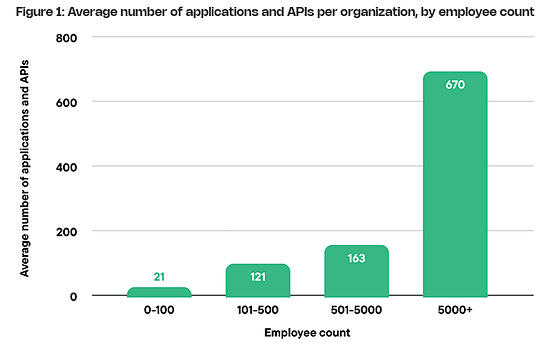

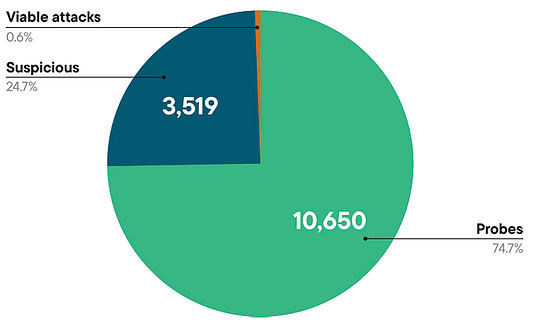

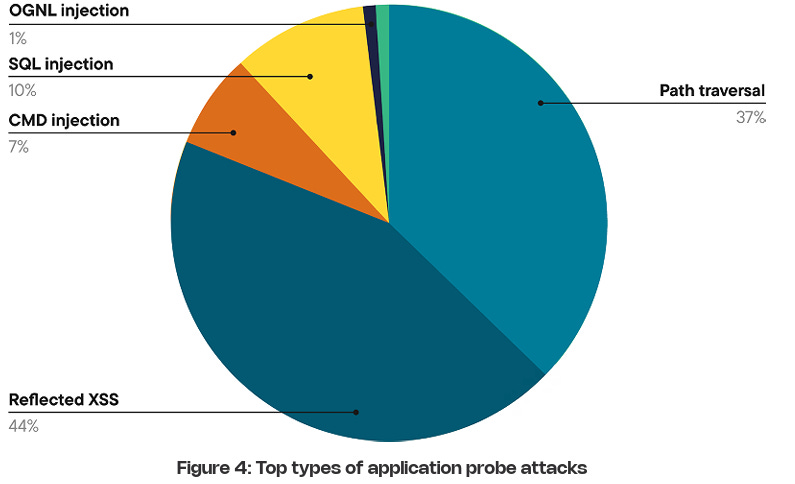

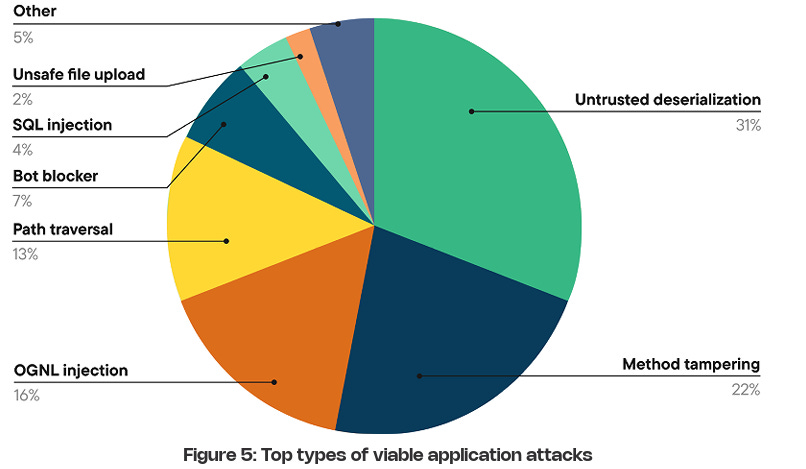

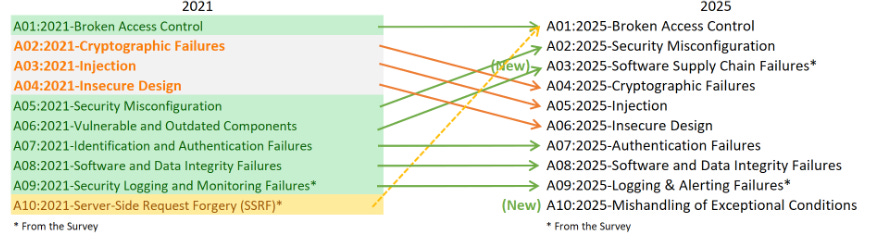

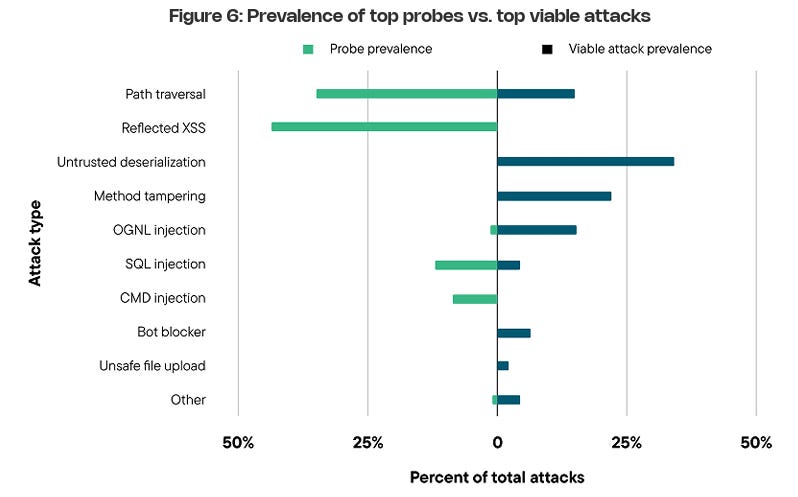

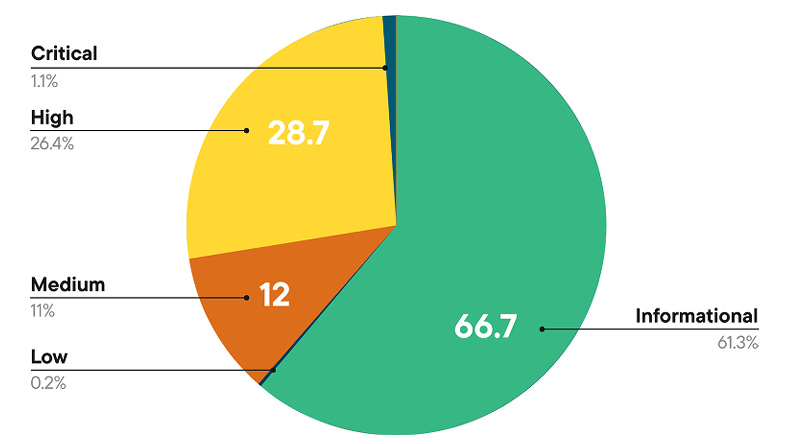

Suppose you’ve been around AppSec for some time. In that case, you inevitably have heard phrases such as “shift left”, which dominated the popular dialogue within the security industry and became the catchphrase for everyone from vendors to nation-state cybersecurity leaders and everyone in between. The theory was that we needed to “bake” security into the software development lifecycle (SDLC), and the sooner bugs are caught, the cheaper it is to fix them. These theories indeed have merit, but the way the industry went about implementing “shift left” and “DevSecOps” had some major missteps, which led to noisy tooling, findings that lacked context, and security tools overwhelming developers with a lengthy list of “findings” whose risk is debatable. I previously discussed some of the challenges of Shift Left in an article titled “Shift Left is Starting to Rust”, and organizations such as Gartner have even come around to my point of view, as it is now widely shared among various AppSec leaders. As phrases such as “shift left” lost their luster, industry leaders and practitioners began to focus on the real threats in production environments, knowing that not everything can be caught before deployment, and attackers are ultimately targeting what’s running in production environments. This has led to the rise of a new category of AppSec tooling, often referred to as “Application Detection and Response (ADR).” If you’re unfamiliar with ADR, I have written about it previously in articles such as “How ADR Addresses Gaps in the Detection & Response Landscape.” In that article I discussed how traditional D&R tooling was overly endpoint-centric and failed to account for the application layer, despite reports such as Verizon’s DBIR and Mandiant’s M-Trends showing attackers were increasingly focusing on application-level exploits as a primary attack vector, and organizations struggled due to legacy detection and response tooling, as well as visibility gaps in their organizational functions such as their Security Operations Center (SOC) team. With that context out of the way, I wanted to take a chance to dive into the topic further, looking at some research and insights out of a longtime industry AppSec leader in Contrast Security, including their recent “Software Under Siege” report, as well as gaps in traditional runtime AppSec tools such as Web Application Firewalls (WAF) who some still seem to conflate with ADR. Contrast, building on its deep expertise and experience in the AppSec space, introduced ADR to help identify and block attacks, including zero-day exploits on production applications. Its latest report sheds light on the threat landscape facing applications and why ADR tooling is necessary. Their report examines key topics, including attack volume, attack breakdowns, and the continued speed asymmetry between attackers and defenders, which necessitates a shift to a more proactive approach to application security (AppSec). Let’s take a look at some of those findings and explore why they matter. Resilient Cyber w/ Jeff & Naomi - The AI-Driven Shift to Runtime AppSecIn this episode of Resilient Cyber, I sit down with longtime industry AppSec leader and Founder/CTO of Contrast Security, Jeff Williams, along with Contrast Security’s Sr. Director of Product Security Naomi Buckwalter, to discuss all things Application Detection & Response (ADR), as well as the implications of AI-driven development.  Software Under SiegeAs the report highlights from its opening, defenders are faced with a multifaceted dilemma: increased attack activity, along with an exponentially growing vulnerability backlog that they’re unable to keep pace with. Each year, the number of CVEs grows, up to 131~ in 2025 and almost 1,000 week, along with the complexity of environments, including the potential for factors such as misconfigurations. At the same time, attackers become more organized, efficient, and even autonomous as they leverage the emerging technology of AI to maximize economies of scale in exploitation. Due to Contrast’s unique role in the industry and its AppSec-centric platform, they gained real-world insights into attack techniques, as well as how they unfold within applications and APIs. They utilize a lightweight sensor to gain complete visibility into the runtime context of applications, providing insight into activities such as control and data flow, as well as backend interactions and external service calls. To give an idea of the scale of insights Contrast used to create the report, it involves thousands of live applications and more than 1.6 trillion security-critical observations daily. I mentioned above how reports, such as the DBIR, highlight the rise of application exploitation as a primary attack vector, and Contrast validates this, finding that the average application is exposed to 81 confirmed viable attacks each month, coupled with over 10,000 probes and unsuccessful attempts. These attempts wouldn’t be so problematic if it weren’t for the fact that applications are increasingly vulnerable, with an average of 30 serious and exploitable vulnerabilities per application, which outpaces their remediation capacity. In comparison, they gain an average of 17 new vulnerabilities per month, resulting in exponential growth of security technical debt and a ripe playing field for malicious actors. Attackers are capitalizing on this challenge too, dwelling for months in enterprise environments. At the same time, defenders and teams, such as SecOps, are blind to application-layer attacks due to a lack of telemetry and insights from their SOC. These key points highlight why ADR is gaining momentum, as security and AppSec leaders recognize this reality and scramble to address the modern attack surface, which necessitates a shift in paradigm from legacy detection and response tools and processes. Digging DeeperContrast opens the reporting, citing that application exploits were among the top 3 types of reported incidents per the DBIR in 2024, as well as reports from IDC showing that 35% of surveyed organizations reported experiencing application-related incidents, with an average cost per ransomware incident, for example, of $4.91 million, according to IBM. These trends are also observed in other reports, such as M-Trends, which I mentioned above, stating that in 2025, 33% of breaches began with application exploits. The same report found that SOCs are indeed flying blind, as more than half of all incidents are discovered by organizations from an external source. It makes sense that application exploits are one of the dominant attack vectors, too, given that the Contrast report highlights that the average number of applications and APIs per organization, by employee count, is into the hundreds for most, but the smallest enterprises. It’s both a large attack surface for malicious actors to target and a daunting environment for practitioners to defend, as every organization is increasingly becoming a software company, utilizing software to provide value to customers and enhance their core competencies. AI is also exacerbating the issue, with Contrast citing reports from Forrester that show a 807% increase in AI-related APIs in 2025 and an astronomical rise in AI-related CVEs from 2023 to 2024, at 1,025%. Couple these metrics with the rise of “vibe coding” and enhanced “productivity” gains from AI-generated code, and it is clear to see the path we’re on as an industry. These aren’t just random figures either, with headlines having no shortage of application-level incidents from MOVEit to Veeam, MSPs, and more. These incidents have, and continue to, have a significant financial impact, affecting thousands of organizations and millions of users worldwide. Specific InsightsContrast’s report goes on to highlight key insights that I wanted to unpack here as well. This includes attack volume, with the average application facing 14,250 attacks per month, which equates to attackers interacting with production applications every 3 minutes. These include various categories such as Probes, Suspicious Attacks, and Viable Attacks. The initial category of course involves automated attempts to discover vulnerabilities and what can be compromised. Suspicious attacks include attempts at exploitation and evasion that aren’t confirmed as successful. The final category consists of confirmed attacks against reachable and exploitable vulnerabilities, which is what organizations should be most concerned with. Contrast breaks them down per application per month by type below: As they lay out, 75% of attack activity consists of probes and reconnaissance, with the remaining attempts including suspicious and viable attacks, and less than 1% of activity involving true viable attacks. This is a similar trend we see in other areas of AppSec, such as Vulnerability Management, where < 5% of all CVEs are ever known to be exploited, but with legacy tooling and approaches, organizations don’t know what to prioritize, and the same goes here for runtime. Discerning between these attack types and activities could mean the difference between chasing down noise and frustration, and further burning out SOC teams, or truly catching real exploitation attempts and either denying them or mitigating their potential blast radius. Neither of these is possible without deep application runtime visibility and context. Defenders can also take a threat-informed approach to defensive activities by using attack data to prioritize their backlog of vulnerabilities, focusing on those that pose the most significant risks to organizations based on real-world attack insights. But how are these attacks occurring? Contrast’s report provides some excellent insights here as well, demonstrating two extremes: 30% of applications experience fewer than 3,000 attacks per month, while over 40% of applications experience more than 30,000 attacks per month. This may seem strange at first, until you recognize that those 40% of highly targeted applications are likely an organization’s critical assets, such as sensitive data, vulnerable components, and publicly exposed systems, making them both more vulnerable and more enticing targets. This also validates that exposure leads to more interactions from malicious actors, particularly those that are Internet-facing or have public account registrations. Much like broader industry-wide high-level reports such as Verizon’s DBIR or Mandiant’s M-Trends, it is helpful to understand the type of attacks that are occurring. This informs us of the focus areas and activities of malicious actors, as well as leads to a threat-informed defensive approach, where organizations can prioritize fixing highly targeted vulnerability types. Contrast provides that insight as well, starting with probe types, see below: That said, we know that probes are often benign or unlikely to cause harm to an organization initially; much like CVSS base scores when it comes to vulnerabilities, they don’t necessarily tell us where our true risks lie. Contrast follows up by providing insights into the top types of viable attack types, as demonstrated below: If these sorts of attacks seem familiar, they should, as several of them have been recognized in industry-leading sources, such as the OWASP Top 10, for years. However, as the diagram from Contrast’s report above demonstrates, they are still highly problematic for organizations, leading to real-world incidents and impacts. Below is the OWASP Top 10, for example. The above image is from the newly released 2025 OWASP Top 10. For a comprehensive deep dive into major updates and changes, you can find my recent account covering it all, titled “The OWASP Top 10 Gets Modernized”. One of the most insightful aspects of the Contrast report for me, and one that really emphasized the need for ADR and deep runtime application context, is when they put probes and viable attacks side by side. Examining the above diagram, it is evident that some attack types account for a significant portion of probes but are not necessarily viable attacks. Organizations that lack tools such as ADR, which provide deep runtime context, will be unable to distinguish between signal and noise and provide critical insights to SecOps and SOC teams regarding actual incidents and their ongoing impacts on the organization. Much like vulnerability management, knowing what to focus on is almost, if not more critical, than knowing a problem exists, especially given that most AppSec and SecOps teams are dealing with burnout, alert fatigue, cognitive overload, and an overall sense of futility due to the never-ending onslaught of malicious activities. Contrast’s report continues to provide deeper insight, breaking out the top 5 viable attack types by industry as well as program language, demonstrating that not all attacks are uniform depending on your organization’s unique environment. Vulnerability ObservationsIf you know me, you know I geek out over Vulnerability Management, which is why it was really awesome to see Contrast’s report take things deeper, examining metrics associated with software vulnerabilities in contrast (pun intended) with the attack activities we discussed above. As the report emphasizes, on the other end of attack activities are vulnerabilities, including first-party and third-party code (e.g., open source). Contrast provides a breakdown of the average vulnerability severity per application: Only 27% of the observed vulnerabilities on average are Critical or High, again highlighting the futility of teams that use legacy methods for vulnerability prioritization, such as CVSS base scores, which, I must admit, is still the dominant method of prioritization across the entire industry. Most aren’t stopping to account for context such as known or probable exploitation, reachability, compensating controls, organizational context, and more. This means they’re wasting a significant amount of time, time we know they don’t have, when attackers are already exploiting vulnerabilities and dwelling in environments for quite a while before being discovered and remediated. This isn’t just Contrast’s perspective, either, but is substantiated by reports and research from others, such as Google, Mandiant, and CrowdStrike, showing the speed at which attackers are moving from vulnerability to exploit, as well as pivoting laterally once they conduct an initial vulnerability exploitation. The speed from vulnerability disclosure to exploit is shrinking rapidly, too, with the introduction of AI, as researchers demonstrate that they can now develop working exploits in as little as 15 minutes for just a few dollars.

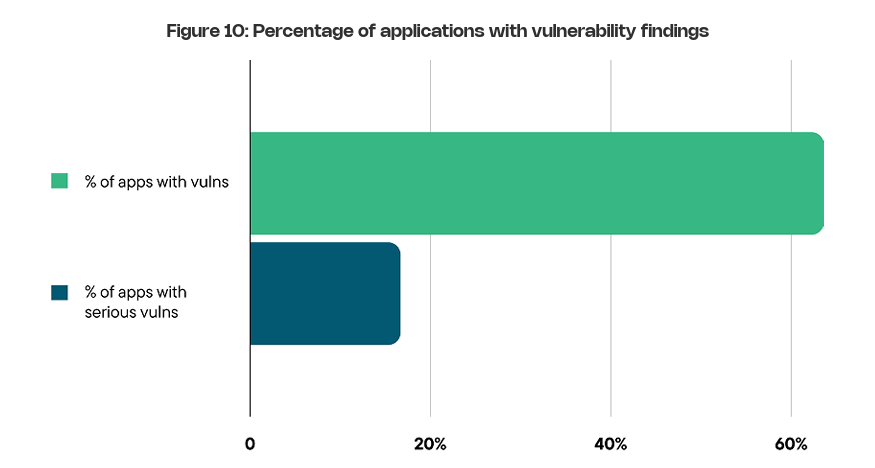

Not quite so fast, taking not only 6+ months to identify a breach, according to IBM, but also over 2 months to contain it. Arguably, the lack of runtime visibility and context at the application layer, as well as insufficient observability and metrics in the SOC due to its traditional endpoint-centric nature, contributes to organizations operating in the dark and struggling to initially identify and subsequently contain breaches. It’s worth noting that Contrast focuses on vulnerabilities that are truly reachable by attackers at runtime, going beyond not only base scores but also static scans, which don’t reflect runtime risks. Contrast brings a runtime-centric approach, coupling visibility with accuracy, unlike SAST, which generally requires interrupting code to determine how it will run and enforce policy, leading to notoriously high levels of false positives and frustrations for developers and AppSec practitioners alike. This is highlighted in the diagram below. Contrast’s report shares, showing that less than 20% of applications in the enterprise have serious vulnerabilities. This would be good news, except that organizations not using tools such as ADR and deep runtime context are blind to which applications and vulnerabilities are genuinely serious. Another challenge defenders face is akin to Sisyphus, where they feel they’re pushing a rock up a hill, only for it to roll back down over them. This is highlighted in Contrast’s report, which shows that it takes organizations an average of 84 days to remediate even critical vulnerabilities, or approximately six vulnerabilities per application per month. Compare that with the reality that the average application encounters more than 17 new vulnerabilities each month, resulting in a growing backlog of security technical debt that outpaces our ability to address it. This is why vulnerability backlogs grow exponentially year over year; some research has found that large organizations have vulnerability backlogs in the hundreds of thousands and even millions. Contrast demonstrates this challenge by discussing a “Vulnerability Escape Rate” (VER), which is “the amount of work defenders have on their plate to maintain a steady-state of risk”. This runaway vulnerability backlog is alarmingly demonstrated in Contrast’s diagram below, showing how the issue grows linearly month after month, with organizations having little to no hope of catching up. But we’ve got an EDR & a WAF!When some first hear about ADR, a common retort is that they are fine, because they have endpoint detection response (EDR) and a web application firewall (WAF). However, research demonstrates that most modern attacks evade these tools. Contrast Labs conducted comprehensive testing to explain how this happens and the gaps that EDR and WAF leave, which ADR addresses. Their research demonstrated that EDR misses many common attacks, or only alerts after attacks have progressed beyond the application and are now impacting endpoints, and, due to WAFs operating at the network perimeter, struggles to distinguish malicious activity from noise that exploits application logic, leading to downstream issues for those in the SOC and often resulting in WAF rules being disabled or alerts being ignored. Both of the above scenarios leave organizations either blind to application-level attacks or becoming aware after the damage is done and unable to mitigate the organizational impact. Closing ThoughtsAs laid out throughout the article, the rise of ADR is being driven by the realities of the software attack landscape. While we’ve made some missteps in our implementation of “shift left”, none of this is to say that attempting to inject security earlier into the SDLC and using tools such as SAST and SCA is futile, however, as I have written about extensively in other articles, it needs to be done thoughtfully, with an emphasis on context such as known exploitation, exploitation probability, reachability and business criticality. The opportunity ADR represents is the chance to bookend the SDLC process with security, building on earlier shift left efforts, but recognizing the importance of runtime production visibility at the application layer, which as we discussed has often been missing for SOC and SecOps teams due to gaps in tooling such as EDR and WAF when it comes to application layer threats. Coupling ADR with existing AppSec methodologies can bring a comprehensive defense-in-depth approach to an ecosystem where application vulnerability exploits are on the rise as a primary attack vector and close gaps for SecOps teams when it comes to application-layer visibility and monitoring. Contrast Security is one of the leading teams championing this runtime revolution in AppSec. If you want to try Contrast Security in a sandbox environment, you can do that here! Invite your friends and earn rewardsIf you enjoy Resilient Cyber, share it with your friends and earn rewards when they subscribe. |

Similar newsletters

There are other similar shared emails that you might be interested in: