Resilient Cyber Newsletter #52

- Chris Hughes from Resilient Cyber <resilientcyber+resilient-cyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

Resilient Cyber Newsletter #522025 Cyber Comp, Responsibility and Structure Results, SMB Security Budgets, Benchmarking LLMs for Alert Triage and Red Teaming, Next-Gen AI-Native Pen Testing & AWS Threat Technique CatalogWelcomeWelcome to issue #52 of the Resilient Cyber Newsletter. This marks one whole year since I embarked on the journey to begin a newsletter for the community focused on Cyber Market Dynamics, Leadership, AI, AppSec, Vulnerability Management, and Software Supply Chain Security. I have been sharing resources, thoughts, discussions, and more with the community primarily on LinkedIn for roughly a decade, but I never formalized storing all of the resources and making them available on a weekly basis—until now! If you’ve been on this journey with me for the past year, I truly appreciate your support. I hope you’ve enjoyed the resources and shared them with your network. We work in a highly dynamic, quickly changing field, especially with AI and its intersection with cybersecurity, and I’m learning alongside the community every day. Below is another excellent collection of timely and relevant resources, and I look forward to continuing to share many more in the coming weeks and years. Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below!

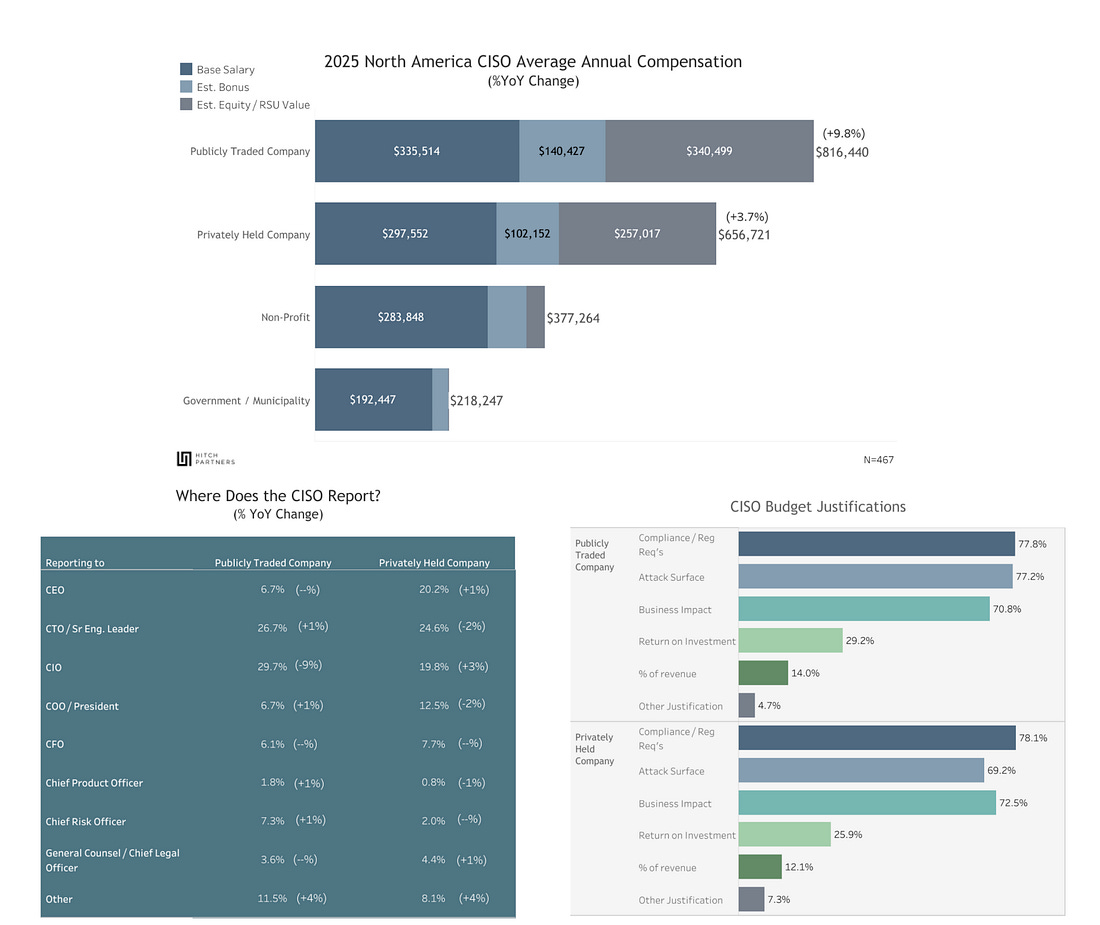

Cyber Leadership & Market DynamicsHitch Partners 2025 Security Organization Compensation, Responsibilities, and Structure Survey ResultsIf you're like me, you're always looking for insights into CISO and security leader compensation, responsibility trends, and reporting structure insights. Some key takeaways:

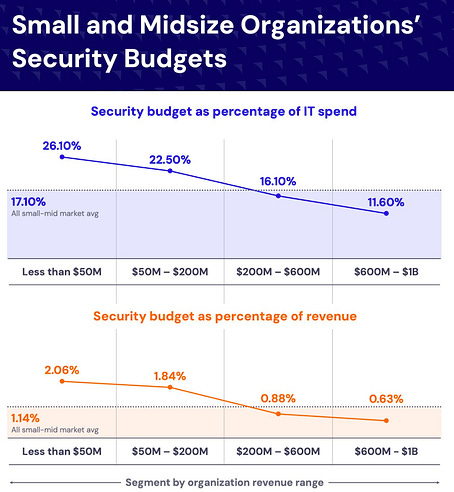

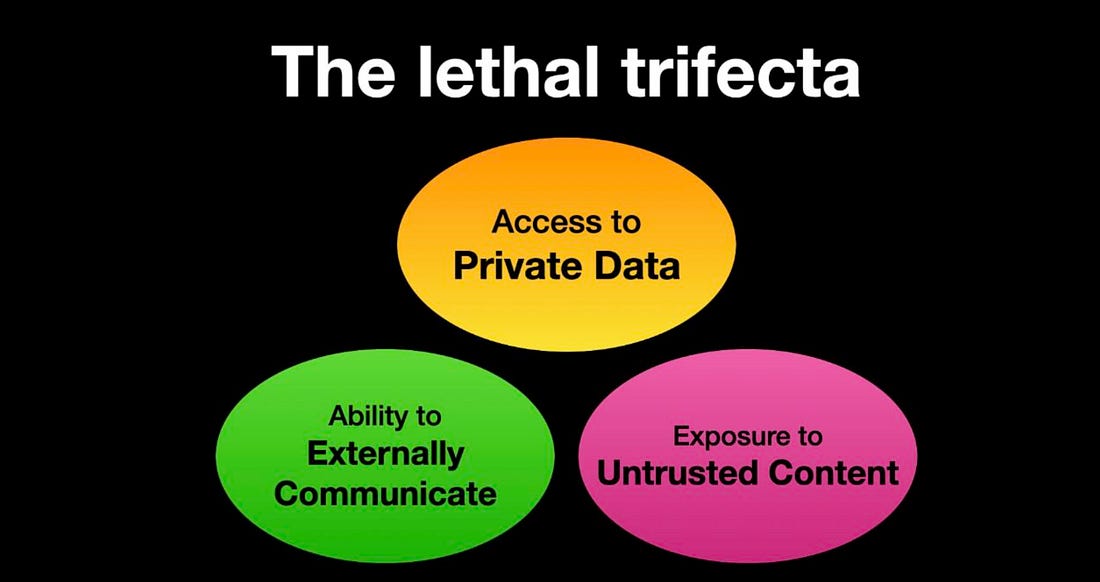

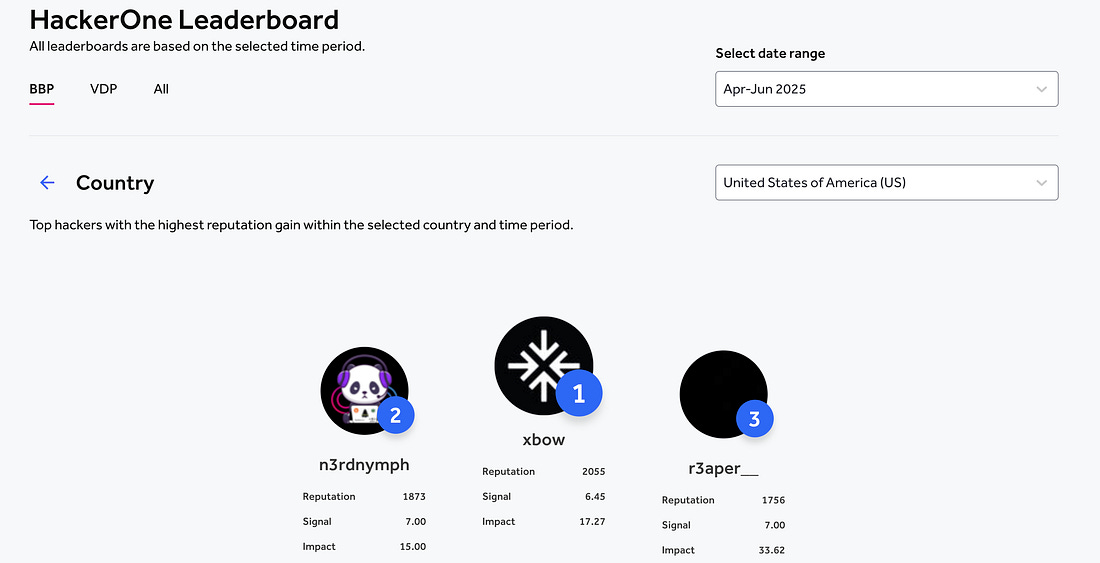

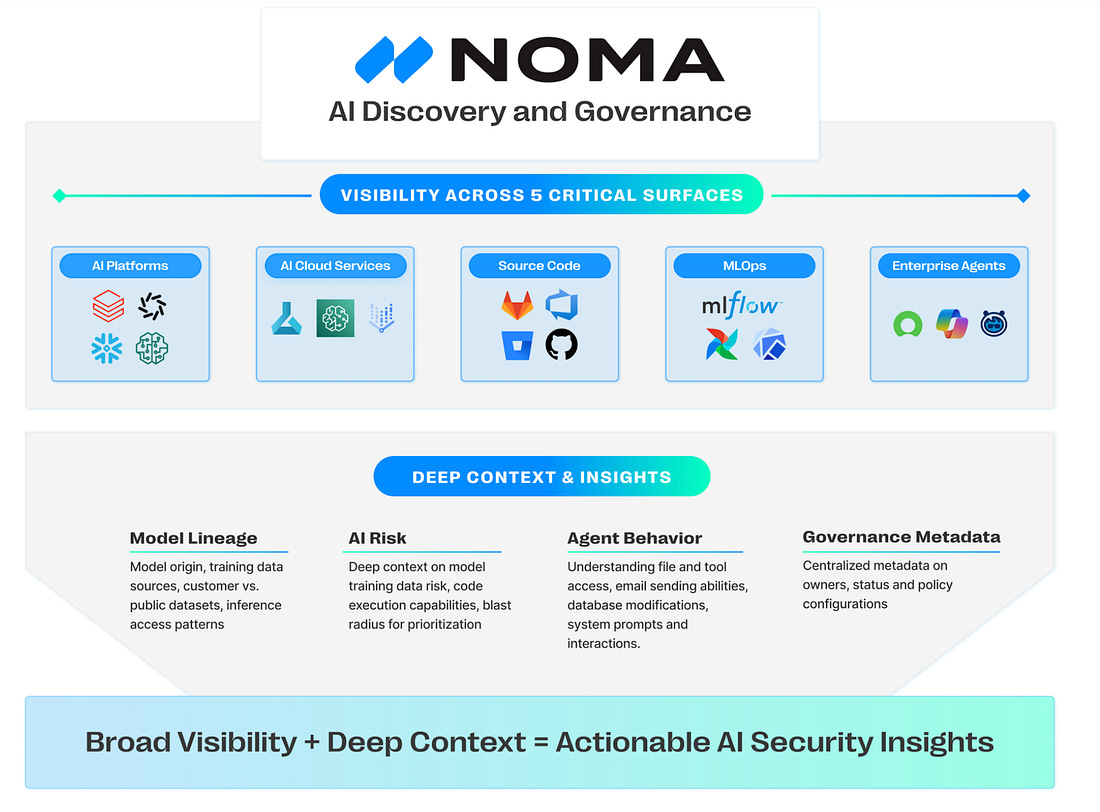

Verizon’s DBIR vs. Cyentia’s IRISI have written articles discussing Verizon’s DBIR findings this year and last, and recently did a deep dive on Cyentia IRIS with Cyentia’s CEO, Wade Baker. Industry leader and author Rick Howard recently authored a LinkedIn article comparing the two. He points out that DBIR isn’t that useful and is more news than intelligence, while IRIS has some news but is more of an intelligence report, and something you can use to make actionable decisions. I agree with him. His primary point is that IRIS better equips you to make specific decisions regarding investments, focus areas, threats, and more. Listen to the discussion I had with Wade Baker below, and you’ll likely come to the same conclusion.  Agentic Vulnerability Management Startup Maze Announces $25M Series ANews recently broke that British cloud security startup Maze announced a $25M Series A, raising a total of $31M since launching nine months ago. They are coming at vulnerability management from an interesting angle, including looking to use swarms of autonomous agents to hunt and fix vulnerabilities. They break workloads into concurrent tasks and test every possible attack path looking to hone in on specific vulnerabilities that are exploitable/reachable in cloud environments and pride themselves on being an AI-native security platform. SMB Security BudgetsI recently shared IANS report 2025 Compensation and Budget for CISOs in the SMB market. It provided insights into security budgets, compensation for CISOs and security leaders and more. One interesting insight was the percentage of security budget in terms of related to IT spend and/or percentage of revenue. Essentially the smaller the organization, the larger the proportion of IT spend and revenue security budgets represent, and then it shrinks as the organizations IT spending and revenues grow. This makes sense given larger firms have both larger IT spending and revenue but I was personally surprised to see that security budgets are nearly 20% for SMB’s under $50m, which shows just how seriously many of them take security threats, at least from a resource allocation perspective. AIBenchmarking LLMs for Autonomous Alert TriageWe continue to see the exploration of AI for security use cases, including SOCs and Alert Triage. However, we lack insight into how those models and tools perform for use cases like the SOC. AI SOC company Simbian recently attempted to tackle this gap by conducting benchmarking of LLMs for autonomous alert triage. They cite the existence of benchmarks such as CyberSecEval and CTIBench but state they aren’t as realistic and don’t have the depth needed. Simbian conducted a real world test using their Simbian AI SOC agent and an evaluation process grounded in evidence and data. As shown above, they used popular models from Anthropic, OpenAI, Google, and DeepSeek. They saw models completing over half of the investigation tasks, with results ranging from 61% to 67%. They did stress the importance of thorough prompt engineering and agentic flow engineering to be effective. Initial tests saw lower results, leading to the need to improve prompts. The Lethal Trifecta for AI AgentsWe see a lot of excitement about Agentic AI, Agents, and their potential use cases across countless industries and environments. AIRTBench: Measuring Autonomous AI Red Teaming Capabilities in LLMsSpeaking of benchmarks, another area of cyber being explored with AI is that of AI red teaming, or more broadly, AI models' abilities to autonomously discover and exploit vulnerabilities, including in AI/ML systems themselves. Ads Dawson and other researchers from Dreadnode recently shared the Arxiv paper that introduces their “AIRTBench” which focuses on measuring autonomous AI red team capabilities in LLMs. They conducted 70 realistic CTF challenges that involved the models writing Python code and interacting with and compromising AI systems. The findings are interesting, as they mention Claude-3.7-Sonnet was the clear leader, solving 43 challenges (61% of the total suite, with a 46.9% overall success rate), followed by Gemini-2.5-Pro, GPT-4.5-Preview, and DeekSeek R1. They found frontier models were far outpacing open source alternatives, with the best open source model being Llama-4-17B, which only solved seven challenges. The researchers concluded that compared to human researchers, LLMs could solve challenges with remarkable efficiency, doing in minutes what takes humans days or weeks, further validating the promise of LLMs for red teaming and security use cases. xbow Tops HackOne LeaderboardSpeaking of AI’s ability to identify and exploit vulnerabilities autonomously, news broke recently that xbow, an AI-native startup that aims to boost offensive security (OffSec) with AI, took over the top spot on the HackerOne leaderboard. This seems to be further real-world validation of the effectiveness of AI in optimizing OffSec and vulnerability identification and exploitation. That said, some have pointed out that these tools are great at finding known vulnerabilities and simple bugs fast, while others emphasize that humans will still have a role to play, especially for complex bugs involving business logic and nuance. AI Discovery OrientationMuch like other areas of cybersecurity, visibility is key, and why controls such as hardware/software asset inventory are critical. We hear that when it comes to AI security too, with discussions related to AI discovery and inventory, but what does that actually entail? This recent blog from Noma Security discusses both how AI discovery if the foundation of AI security and the importance of demanding breadth and depth for a useful AI inventory. They emphasize that breadth means complete coverage across the five critical surface areas including:

Depth on the other hand focuses on deep context to lead to actions, and involves insights for AI components such as:

Decoding the Building Blocks: MCP, A2A, and AG-UIWe continue to hear a lot of hype about Agentic AI, this includes for cyber use cases such as AppSec, GRC, and SecOps among others. Many innovative startups and existing industry leaders are working towards disrupting the SOC through AI and Agents. AppSec, Vulnerability Management and Software Supply ChainNext-Gen Pentesting: AI Empowers the Good GuysWe've seen a lot of excitement around AI, both the need to secure it and its ability to help with security use cases.

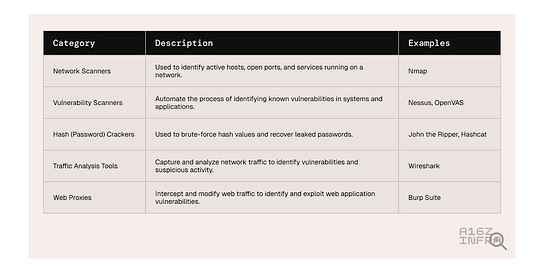

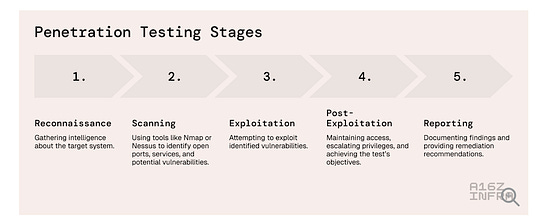

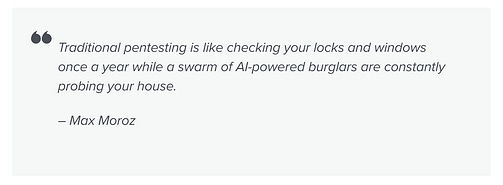

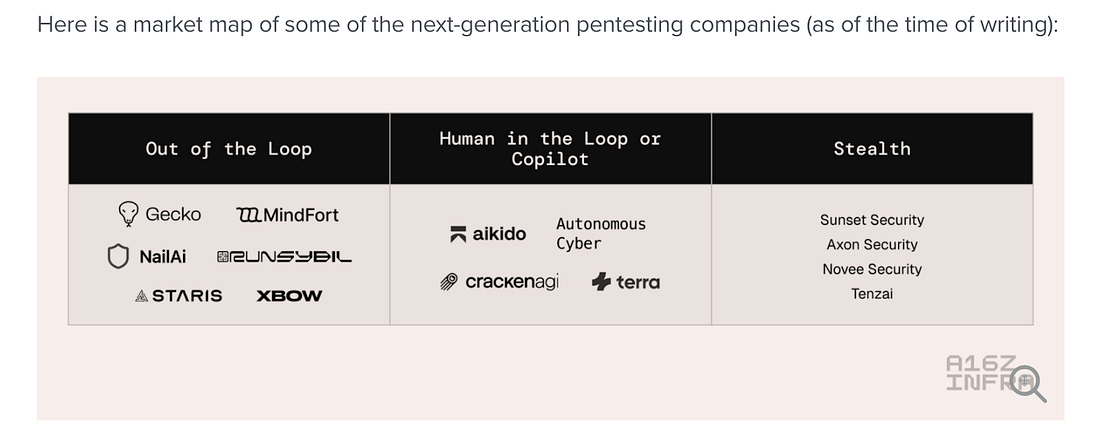

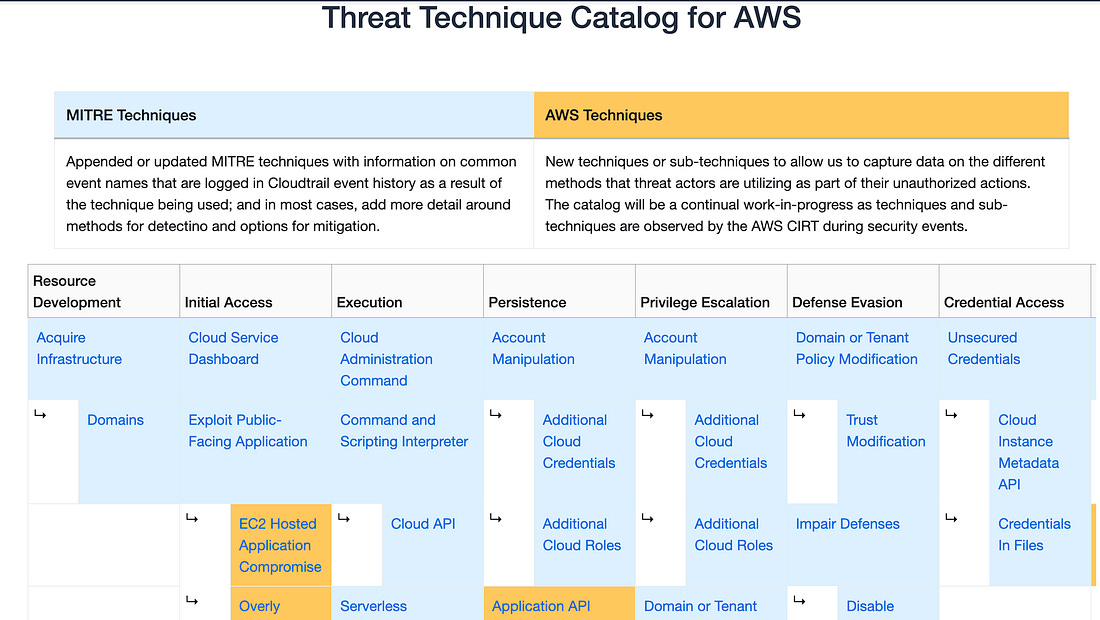

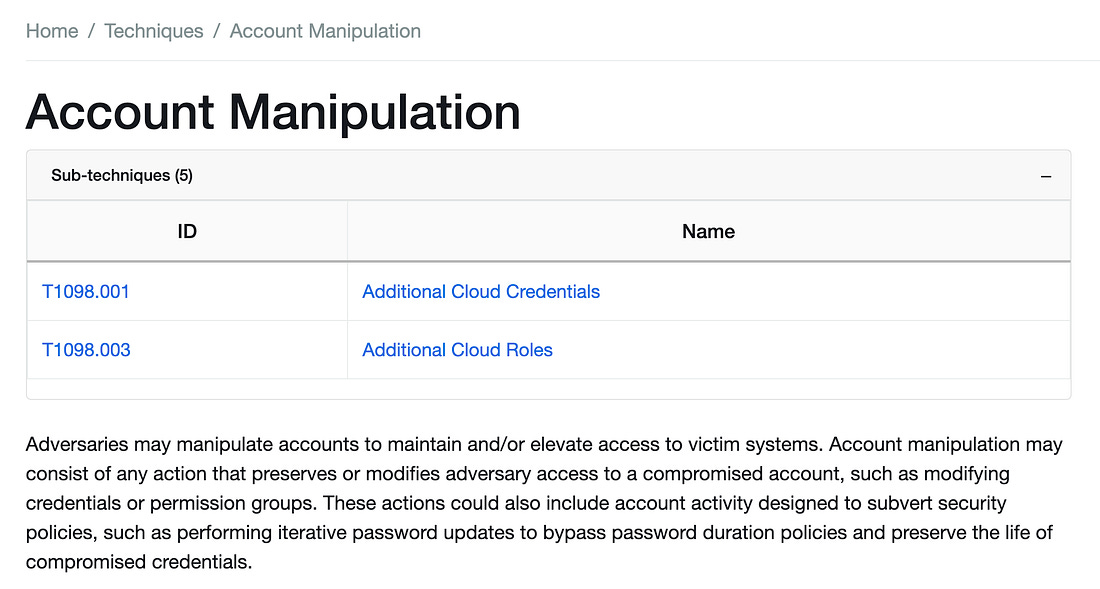

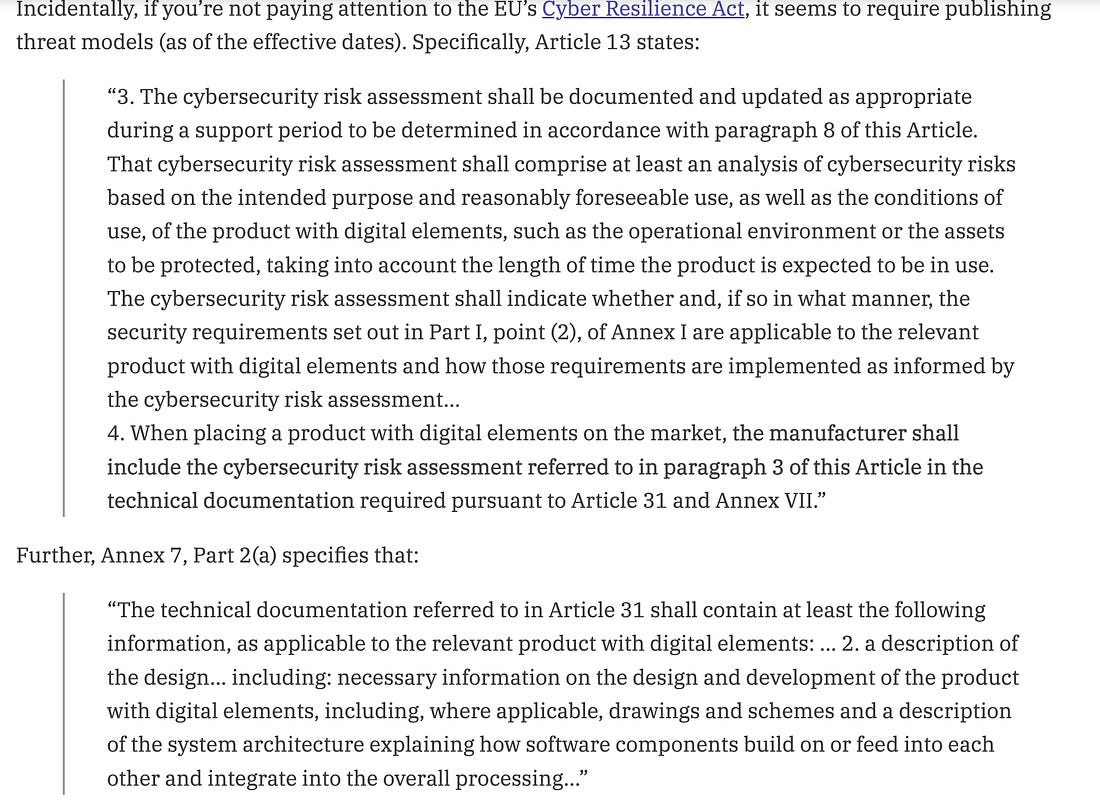

The article covers some of the traditional categories of Pentesting tools, with some examples (notice the emphasis on open source). It also discusses the typical pentesting phases from reconnaissance through reporting. It discusses why traditional pentesting is no longer enough, due to factors such as mounting unpatched vulnerability backlogs, growing numbers of CVEs, and complexities in modern environments due to Cloud, DevOps, and SaaS. I particularly liked the below quote from Max Moroz as it summarizes not just pentesting but the current state of compliance in Cyber too, which is a snapshot-in-time model with cyclical reviews and assessments, when the threat is constantly changing as well as the underlying environments being assessed. The article makes the case that pentesting of the future will shift from labor-constrained engagements to scalable AI-native systems able to match the pace of modern development through the combination of LLMs, traditional exploit tooling, real-time telemetry, and proprietary data (which is the actual moat in my opinion). The overarching theme is a model that isn’t constrained by expertise, labor, and expenses but software-first, continuous, and AI-augmented systems. They call out some of these in a market map. The article goes on to argue that AI will rewrite the pentest playbook as it thinks like a hacker, trained on real-world exploits, codebases and system behavior to identify flaws in business logic, will shorten testing cycles as it can be run continuously, aren’t constrained to legacy scopes due to human staffing and will focus on verifiable exploits as opposed to noise. While much of this remains to be seen, I certainly think AI will disrupt pentesting much like it will the broader cyber ecosystem as we lean into this technology to take advantage of it much like attackers already are. The piece does discuss limitations such as constrained depth and context that some human expertise may bring where AI tooling is still evolving, as well as problems around accountability. Shout out to folks such as Jason Haddix, who's also cited. Threat Technique Catalog for AWSAWS remains one of the most dominant CSPs in the world, where organizations store and run critical and sensitive workloads. This Threat Technique Catalog for AWS describes techniques used by threat actors to take advantage of security misconfigurations or compromised credentials on the customer side of the shared responsibility model. It’s also based on MITRE ATT&CK. They map to AWS CloudTrail Event Names when malicious actions are logged in CloudTrail and can be used to assist during incident response and investigations. Users can navigate through the various phases and lifecycle of attacks and dig into the specific techniques, such as “Account Manipulation,” and see the technique’s ID, how it can be detected, and how it can be mitigated. Publish Your Threat Model?To many, publishing threat models, risk assessments, and other potentially sensitive artifacts that may be useful to attackers seems like blasphemy. However, Adam Shostack of Threat Modeling fame makes the case that it is exactly what we should be doing. In addition to an essay arguing for the practice, Adam points to emerging compliance requirements, particularly from the compliance juggernaut, the EU, that may require it. The Cursor Moment for SecOps: How MCP and AI Coding Agents are Enabling the Next Evolution of Detection EngineeringJack Naglieri continues to post thought-provoking content about the role of AI, Agents, and MCP and its implications for the future of SecOps. His latest piece is no exception. He discusses the current state of manual detection engineering and the traditional detection engineering process, including how cumbersome and time-consuming they are. He discusses how a significant paradigm shift can occur by connecting your SIEM with leading AI tools via MCP such as Cursor, Claude, Goose, or others and asking questions about unusual patterns, insights into logs, user behavior, and more. The days of manually combing through logs and system data are quickly moving behind us as we pivot to using natural language and AI to gain insights that weren’t impossible without massive human capital. Jack walks through a practical example of having AI analyze CloudTrail logs and product reports related to activities impacting service roles, access attempts, changing IAM permissions and other potentially concerning activities. Jack discusses how MCP can function as a glue, connecting various platforms and tools within the same AI session, such as your SIEM, ticketing system, on-call rotation tooling, Slack channels, and more, into a single thread to ensure we move from signal to outcomes in the security context of mitigating organizational risks. Ironically, MCP and AI create the ever-elusive “single pane of glass”, weaving together insights across tools and platforms, minimizing the need for context switching and overload for security practitioners and analysts. Jack discusses the time savings and multiplier effects of the new AI-augmented workflow for detection engineering, offering strategic and tactical improvements to the practice. This Is How They Tell Me Bug Bounty EndsWe continue to see the rapid exploration of the art of the possible when it comes to AI, including cybersecurity use cases such as Offensive Security/Pen Testing, as I discussed above. We’ve seen promising startups such as Xbow and Ethiac demonstrate the power of automated, autonomous, AI-powered pen testing. That said, as Joseph Thacker lays out in this thought-provoking piece, the disruption to bug bounty and offensive security more broadly is likely to happen gradually rather than immediately. He discusses how these emerging tools and companies are able to find 1% of vulnerabilities on live production applications, typically simple single-step verifiable bugs, but that will grow in time to larger percentages and more complex bugs and flaws. However, that doesn’t mean bug bounty will go away, even if some leverage these tools to capture bounties and scale their findings. Joseph makes the case that there will always be a need for hackers, the creative, innovative types who grind, find nuanced problems and flaws, refuse to give up, and even leverage these tools to be more effective than they ever were. Demystifying Confidential ComputingThere’s been a lot of hype and buzz around the topic of confidential computing lately, but what exactly is it? This is a great primer from Edera, who focuson making secure computing simple and secure, with a focus on Kubernetes, multi-tenancy and AI. As they put it, it involves encryption for data “in use”, to coincide with encryption of data at-rest and in-transit, providing full lifecycle coverage of data confidentiality regardless of the state of the data. Edera states confidential computing is projected to reach a TAM of $350M by 2032. Edera emphasizes that confidential computing means that other apps, the host OS, hypervisor, system admins and even those with physical access cannot view or tamper with a running program under a confidential computing implementation. Edera states that confidential computing is primarily achieved through hard-ware based attested Trusted Execution Environments (TEE)’s, involving hardware next to the CPU that performs operations on encrypted memory. They show that there are various designs of TEE’s from different vendors and providers, each with their own considerations and security benefits. The TEE’s often involve keys generated by the TEE and involved monitoring by privileged hardware, with further isolation conducted via the Kernel and/or User Level. The blog goes on to discuss current applications and emerging use cases for confidential computing, including:

They also discuss there are costs to confidential computing, including the hardware as well as potentially higher compute costs and implications of the number of applications that can be run concurrently on a single TEE. These costs continue to decline with modern implementations and innovations as well. The blog has much more detail and insight but ultimately a renewed interest in confidential computing is being driven by increased AI adoption, virtualized workloads and regulatory compliance calling for stricter controls on segmentation, confidentiality and integrity of data, including in-use. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: