OWASP Top 10 for Agentic Applications

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

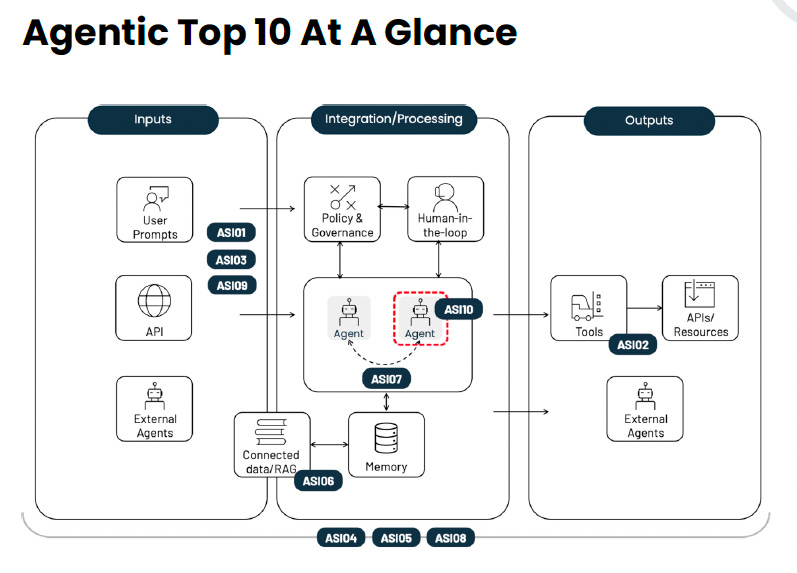

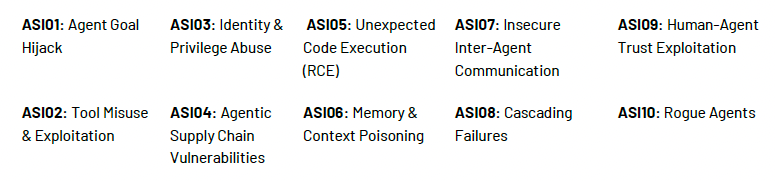

As I’ve written about extensively 2025 has been dubbed the year of AI Agents, although many now suspect it is more accurate to say we’re entering the decade of Agentic AI. From startups, VC’s, enterprises and industry leaders, everyone is excited about the potential for Agentic AI, across every industry and vertical, including within Cybersecurity, where we’re seeing use cases in GRC, AppSec, SecOps and of course, our dear friends, malicious actors as well. That’s why I’m really excited to share OWASP recently published the first official “Top 10 For Agentic Applications”, and I had a chance to participate as a member of the Agentic Security Initiative (ASI) Distinguished Review Board. The team unveiled the Top 10 this week and I wanted to take a moment to put out a blog summarizing some thoughts and key takeaways. Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below! Agentic AI Top 10The team provided this concise graphic showing the top 10 risks, and where they fit in conceptually when thinking about Agents and their potential activities and interactions. I’ll briefly walk through each of the Top 10 below, but strong recommend reading the entire publication for a much deeper dive into each of the Top 10, as well as the excellent Agentic AI - Threats and Mitigations document. I previously covered that document in a blog titled “Agentic AI Threats and Mitigations: A deep dive into OWASP’s Agentic AI Threats and Mitigations Publication”. Agent Goal HijackAgents by their nature are goal focused, usually carrying out tasks in pursuit of a defined goal and can and will function either semi or fully autonomously. Given agents and underlying LLMs function in natural-language, it makes them susceptible to being manipulated. OWASP defines this risk as:

It’s the double-edged nature of Agents and LLMs, making them accessible to a wide range of users, who can interface in plain language, but as a result, can lead to Agents struggle to discern from valid instructions versus invalid and/or malicious inputs. We’ve seen a ton of this with prompt injection in particular, both direct and indirect examples, and how when the agents follow malicious or dangerous instructions, it can have devastating consequences for organizations and users. That said there’s other methods as well, such as deceptive tool outputs, malicious artifacts and more. OWASP’s Top 10 highlights the potential for attackers to manipulate an agent’s objectives, task selection and decision pathways. They highlight examples of the vulnerability such as indirect prompt injection embedded in web pages or documents, in calendars or messages or even malicious prompt overrides. OWASP goes on to provide an extensive list of potential prevention and mitigation techniques, including but not limited to treating all natural-language inputs as untrusted, enforcing least privilege for agents/tools and validating user and agent intent before execution at runtime, especially for high-impact actions. Tool Misuse & ExploitationTool Misuse and Exploitation is one that has seen a ton of focus this year, especially with the rise in popularity of protocols, such as the Model Context Protocol (MCP), which allows Agents to connect to and utilize internal/external tools, data sources and so on. OWASP defines Tool Misuse & Exploitation as:

OWASP does highlight that this risk is where the agent is operating within its authorized privileges but is utilizing tooling in unsafe or unintended ways, such as deleting data, over-consuming APIs and facilitating data exfiltration. They provide examples of this vulnerability such as over-privileged tool access, unvalidated input forwarding, unsafe browsing and external data tool poisoning. Give how rapidly organizations are adopt protocols such as MCP and how poorly we’ve done historically with concepts such as least-permissive access control, it is easy to see how this not only could be, but definitely will be a problem for many organizations in the years to come. To mitigate the risks associated with tool misuse and exploitation, OWASP recommends actions such as least agency (a new concept for many, tied to agents autonomous nature, and least privilege for tools, action-level authentication and approval and execution sandboxes and egress controls. Identity and Privilege AbuseAh, the bane of cybersecurity’s existence for years, IAM. Every year, leading reports such as Verizon’s DBIR and Google’s M-Trends highlight how impactful credential compromise is as an attack vector, and countless other reports highlight how abysmal we in cybersecurity and IT in general are when it comes to proper Identity and Access Management (IAM). This problem will persist with Agentic AI, and in fact will be exacerbated, due to the exponential rise of Agents and their associated non-human identities (NHI) to come.

Agent identity will involve the agents persona and their associated authentication material (e.g. API keys, OAuth Tokens and delegated user sessions, and potential risks include un-scoped privilege inheritance, memory-based privilege retention & data leakage and cross-agent trust exploitation (confused deputy), along with other examples. OWASP cites potential attack scenarios such as delegated privilege abuse, memory-based escalation and identity sharing. To mitigate these sort of risks, OWASP recommends enforcing task-scoped, time-bound permissions, isolating agent identities and contexts and mandating per-action authorization. It’s easy to see how mitigating identity-focused agent risks will be problematic for organizations, especially without robust platforms and capabilities to help them do so, given they already historically have struggled with these concepts for human users, which agents are now poised to exponentially outnumber. Even before the rise of Agents, I had begun writing extensively about NHI risks in pieces such as: Agentic Supply Chain VulnerabilitiesOne of the topics near and dear to me is Supply Chain Security, given I wrote one of the leading books on the topic titled “Software Transparency: Supply Chain Security in an Era of a Software-Driven Society”. When Tony Turner and I wrote the book, AI and especially Agents were in the early innings (in the context of widespread usage of LLMs or excitement around Agentic AI). Our book focused heavily on the risks associated with Open Source and Proprietary software and the associated complexities and risks riddling the software supply chain. While the landscape may have changed with LLMs and Agents, many of those principles still apply. In the context of Agentic AI, OWASP describers Agentic Supply Chain Vulnerabilities as:

Unlike traditional software, for Agents this includes things such as models, model weights, tooling, plugins, and even the associated protocols supporting the Agentic ecosystem (e.g. MCP, A2A etc.) Much like open and closed source, the introduction of code into environments poses risks, and the same goes for agents, along with hidden instructions or deceptive behaviors. We’ve seen a lot of research lately demonstrating how hidden instructions can even facilitate zero-click exploits of LLMs and Agents. Some of the notable risks OWASP points to here include poisoned prompt templates that are loaded remotely, tool-descriptor injection, impersonation and typo squatting and compromised MCP’s. To prevent these sort of risks, OWASP recommends provenance (e.g. SBOM/AIBOM), containment, inter-agent security, pinning, and continuous validation and monitoring. Unexpected Code ExecutionUnexpected code execution, closely related to remote code execution (RCE) is defined by OWASP as:

The rise of “vibe coding” contributes to do this challenge, as OWASP notes these tools often generate and execute code. They provide examples such as prompt injection that leads to attacker-defined code, unsafe function calls, unverified or malicious package installs and code hallucinations that generate malicious constructs. I previously spoke of the challenges of Vibe Coding in a piece titled “Vibe Coding Conundrums”. OWASP also highlights the potential for multi-tool chain exploitation, where potentially malicious code is executed as part of an orchestrated tool chain. As developers implicitly trust the Agentic coding tools and platforms and grant them more autonomy, including the use of various tools, the potential for unexpected code execution only climbs. To mitigate these risks, OWASP recommends methods such as preventing direct agent-to-production systems, introducing pre-production checks, implementing execution environment security and requiring human approval for elevated runs or execution. Memory & Context PoisoningAn often overlooked aspect of security when it comes to AI and Agents is memory, as well as context poisoning. OWASP defines this as:

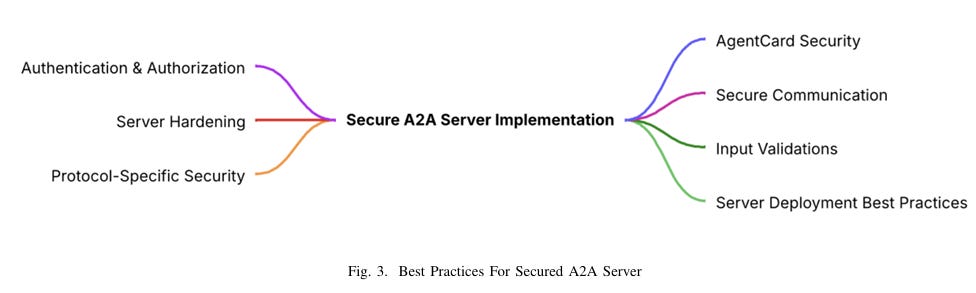

They’re referring to the stored and retrievable information that Agentic systems rely on, and that provides continuity across interactions and activities. Some of the examples they provide include RAG and embeddings poisoning, shares user context poisoning, context window manipulation and cross-agent propagation. To mitigate memory and context poisoning risks, OWASP recommends methods such as encrypting data in transit and at-rest, with least-privileged access, validating memory writes and model outputs for malicious content, segmenting user sessions and domain contexts and only allowing authenticated curated sources to memory. Insecure Inter-Agent CommunicationWe’ve spoken a lot about risks associated with agents and their interactions with tools and other enterprise systems, but this entry in the Agentic Top 10 speaks to the risks of inter agent communications. We’ve seen the rise of protocols to help support this as well, such as Google’s Agent-to-Agent (A2A). Unlike MCP, which has a client/host structure and facilitates agents interacting with tools and resources, A2A is focused on the communication and data flow between agents. That said, A2A is just one example, and not the only way Agents can or will communicate with one another. OWASP explains this risk as:

Some of the examples of this risk OWASP provides include MITM intercepts of unencrypted messages, modifying or injecting messages, replaying delegation or trust messages and misdirecting discovery traffic to forge relationships with malicious agents or unauthorized coordinators. To mitigate these risks, OWASP recommends methods such as securing agent channels, digitally signing messages, implementing anti-replay methods such as unique session identifiers and timestamps and pinning the allowed protocol versions. For a deeper dive on this, I recommend checking out the paper “Building A Secure Agentic AI Application Leveraging Google’s A2A Protocol” by Idan Habler, Ken Huang, Vineeth Sai Narajala and Prashant Kulkarni. Cascading FailuresThe cyber industry legend Bruce Schneier has often said:

It would be hard to argue modern digital systems are anything if not complex, and the rise of Agentic AI is poised to exacerbate that complexity exponentially due to sheer numbers, observability challenges and increased attack surface along with novel attack vectors. OWASP describes cascading failures as:

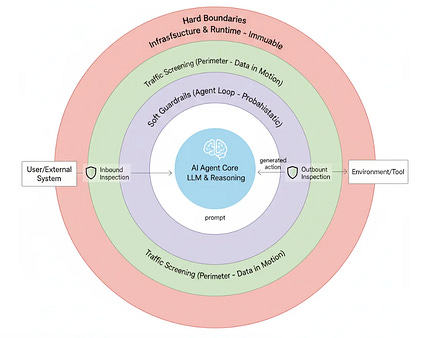

This risk involves a focus on the amplification of faults and as agents get embedded and implemented across enterprise systems, accessing resources, data, tools and more, along with their associated credentials and entitlements, the potential for amplification is significant. OWASp rightly highlights that the autonomous nature of agents poses even greater risk, where issues can fan out into downstream agents and systems rapidly and repeatedly. They point to specific examples such as planners hallucinating or being compromised and providing unsafe steps to downstream agents, poisoned long-term memory goals, poisoned messages leading to widespread disruptions across regions or downstream agents repeating unsafe actions they inherited from upstream agents and data. To mitigate these risks, they point to methods such as implementing a zero-trust model that is fault tolerant by design, implementing strong isolation and trust boundaries, one-time tool access with runtime checks and output validation with human gates. Concepts such as trust boundaries in the context of AI and agents has been written about by others, such as Idan Habler, in his blog titled “Building Secured Agents: Soft Guardrails, Hard Boundaries, and the Layers Between”. Human-Agent Trust ExploitationAs agents continue to advance and adoption grows, there is an increased potential that humans trust in agents can and will be exploited. OWASP defines this risk as:

Unlike other risks discussed above, this one is unique in the sense that it is ultimately the human that ends up taking action, but the agent played a role in influencing the human behavior, and capitalizing on the truth the human places in the Agent(s). While this seems far fetched, I would point to other examples, such as AI coding, where research is showing developers are inherently trusting AI without any sort of rigor or validation. It is easy to see a path to scenarios where humans become overly reliant on agents, and inherently trust them and can be influenced into performing activities that harm their respective organizations. OWASP uses the term “anthropomorphism”, to describe situations where “intelligent agents can establish strong trust with human users through their natural language fluency, emotional intelligence, and perceived expertise”. Some examples they provide include opaque reasoning forcing users to trust outputs, lack of final verification steps which convert user t rust into immediate executions, even situations where agents fabricate convincing rationales to hide malicious logic. To mitigate these risks, OWASP recommends methods such as explicit confirmations, immutable logs, and behavioral detection, to monitor for sensitive data exposure or risky actions in runtime. They also recommend accounting for human-factors and implementing UI safeguards. Rogue AgentsLast but not least on the list are Rogue Agents. OWASP defines this as:

This is a unique risk given the autonomous nature of agents, and isn’t something traditional rule-based security measures can cover. As agents are poised to grow within organizations exponentially, we’ve seen conversations framing agents as potential “insider threats” as well. Some of the risks OWASP point to include agents deviating from intended objections, being deceptive, seizing control of trusted workflows for malicious objections and colluding and even self-replicating to achieve their malicious objectives. To mitigate these risks, OWASP recommends robust governance and logging, implementing isolation and boundaries via “trust zones”, comprehensive monitoring and detection and the ability to rapidly respond and contain potential rogue agents to minimize their impacts. Closing ThoughtsAs we enter the decade of Agentic AI, I’m as excited as anyone else when it comes to Agents and their potential. This includes within cybersecurity, as Agents are poised to be able to help address systemic issues across AppSec, GRC and SecOps as well as the broader cybersecurity ecosystem. We have longstanding challenges that we can’t “hire” our way out of and agents can act as an incredible force multiplier. However, as discussed throughout this article, they also pose potentially significant risks as well, especially without proper governance and security. The OWASP Agentic AI Top 10 is an incredibly timely and useful resource for security leaders and the community to implement a mental model of what “right” looks like and to begin to think about these risks and position their organizations accordingly to enabler truly secure adoption of Agentic AI. Resilient Cyber is free today. But if you enjoyed this post, you can tell Resilient Cyber that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |

Similar newsletters

There are other similar shared emails that you might be interested in: