Resilient Cyber Newsletter #79

- Chris Hughes from Resilient Cyber <resilientcyber@substack.com>

- Hidden Recipient <hidden@emailshot.io>

Resilient Cyber Newsletter #792025 Cyber Almanac, 2026 AI Security Challenges, Top Agentic Shifts, 2025 CVE Trends, AI-Generated Code Risks & Why CISO’s Miss What’s Coming NextWelcome!Hello and welcome to Issue #79 of the Resilient Cyber Newsletter. 2026 has picked up at a pace that indicates just how wild of a year it is going to be, across AppSec, AI, and the cyber market more broadly. We’re living in such an exciting time with AI’s intersection with cyber as part of its broader impact on software. That’s why I wanted to briefly share a personal note that I recently have taken on a new role myself, as the VP, Security Strategy, at Agentic AI security leader Zenity. None of this will change my Resilient Cyber work and I will still be striving to bring you some of the industry’s best resources, news, analysis and tools, so no worries there!

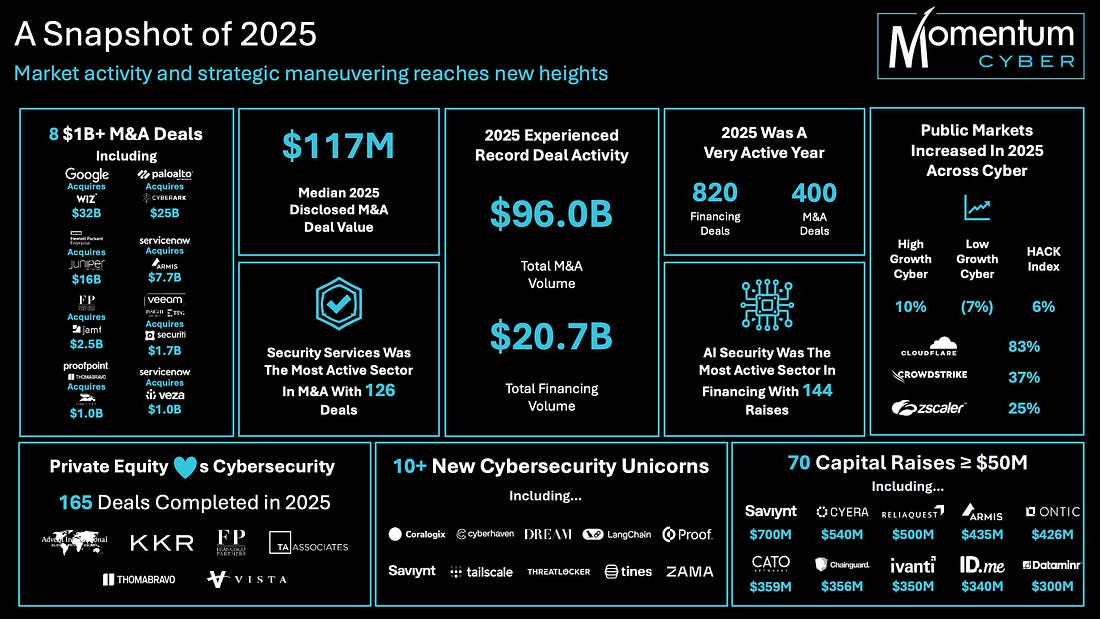

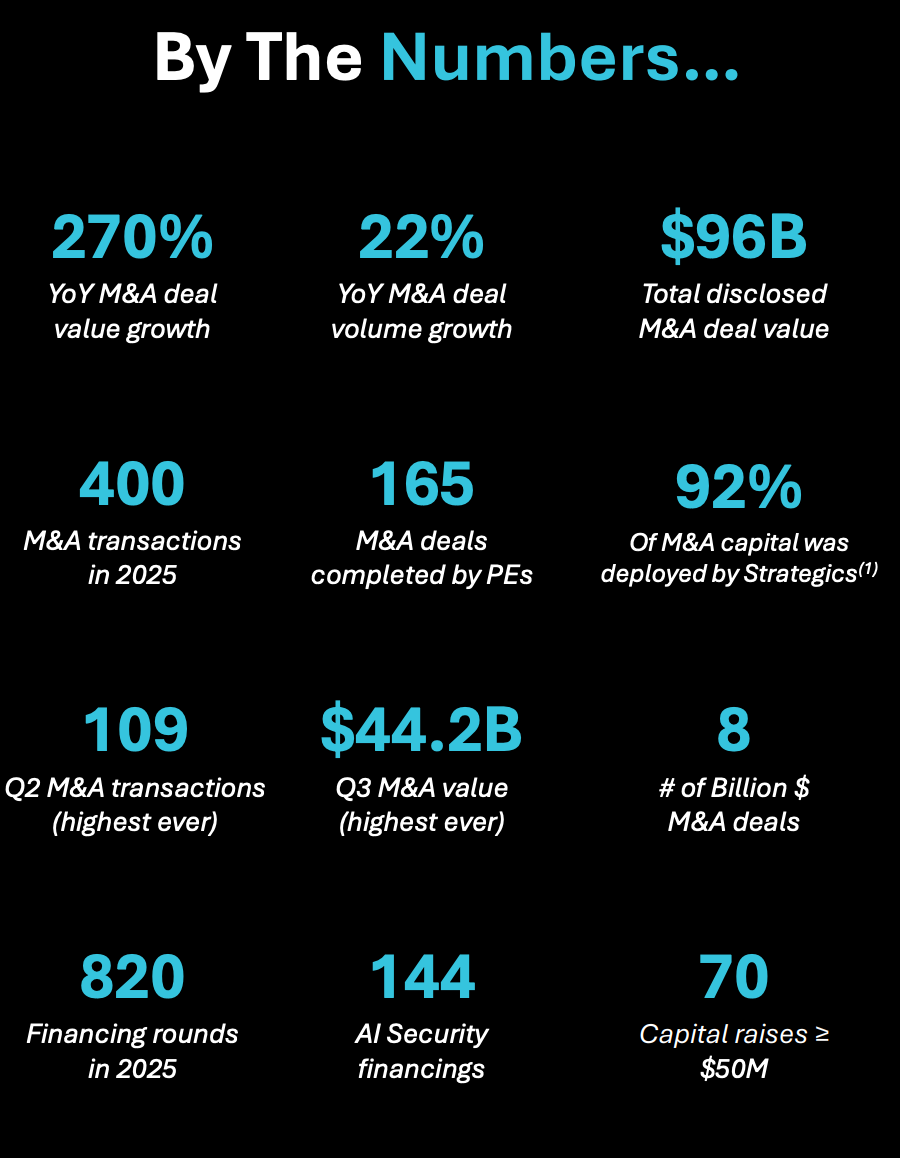

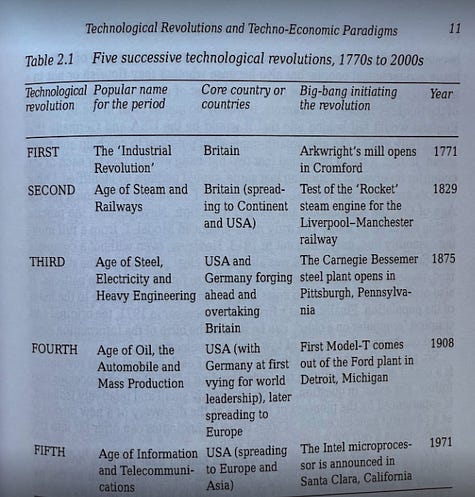

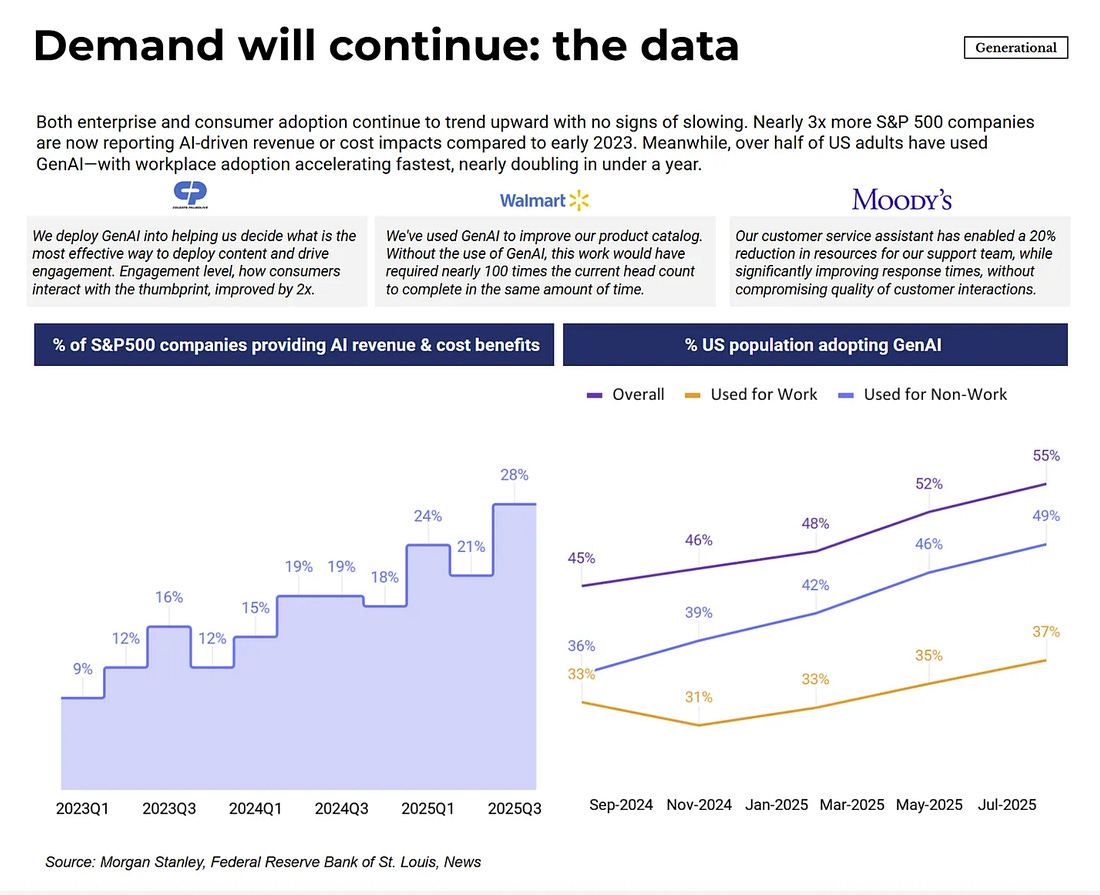

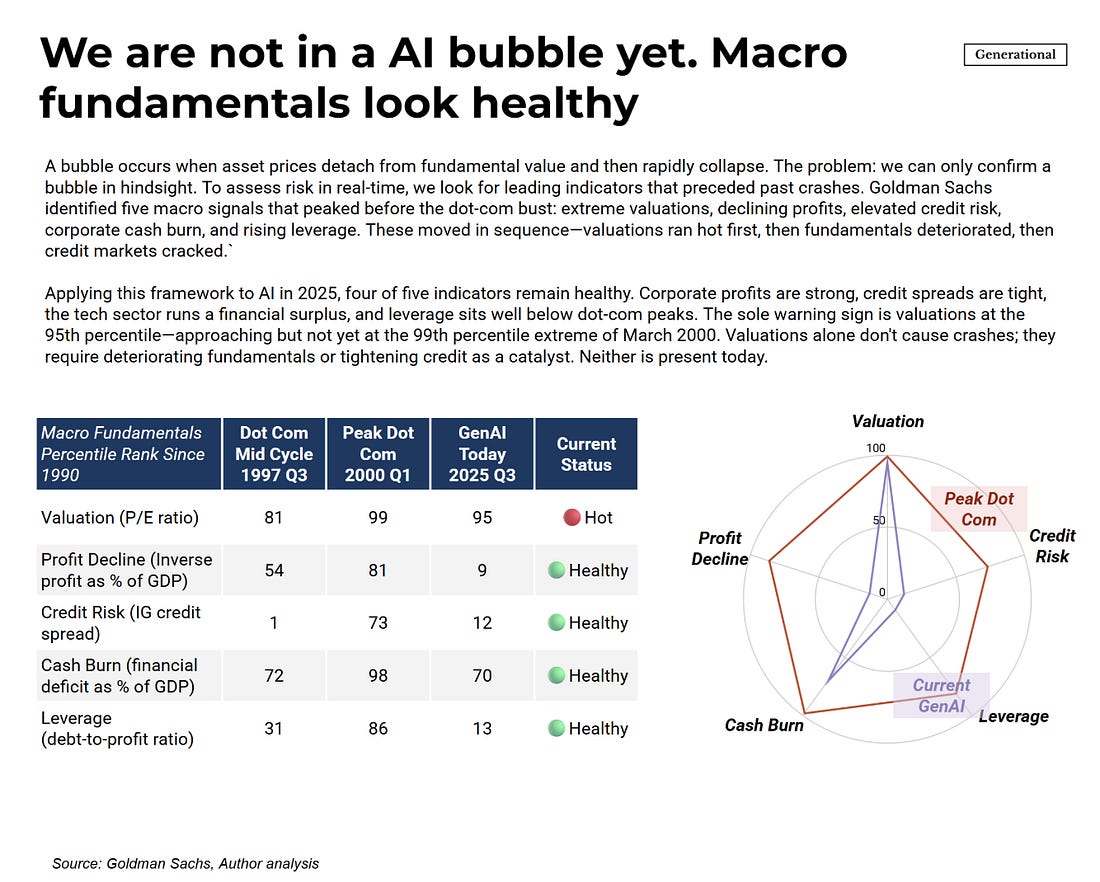

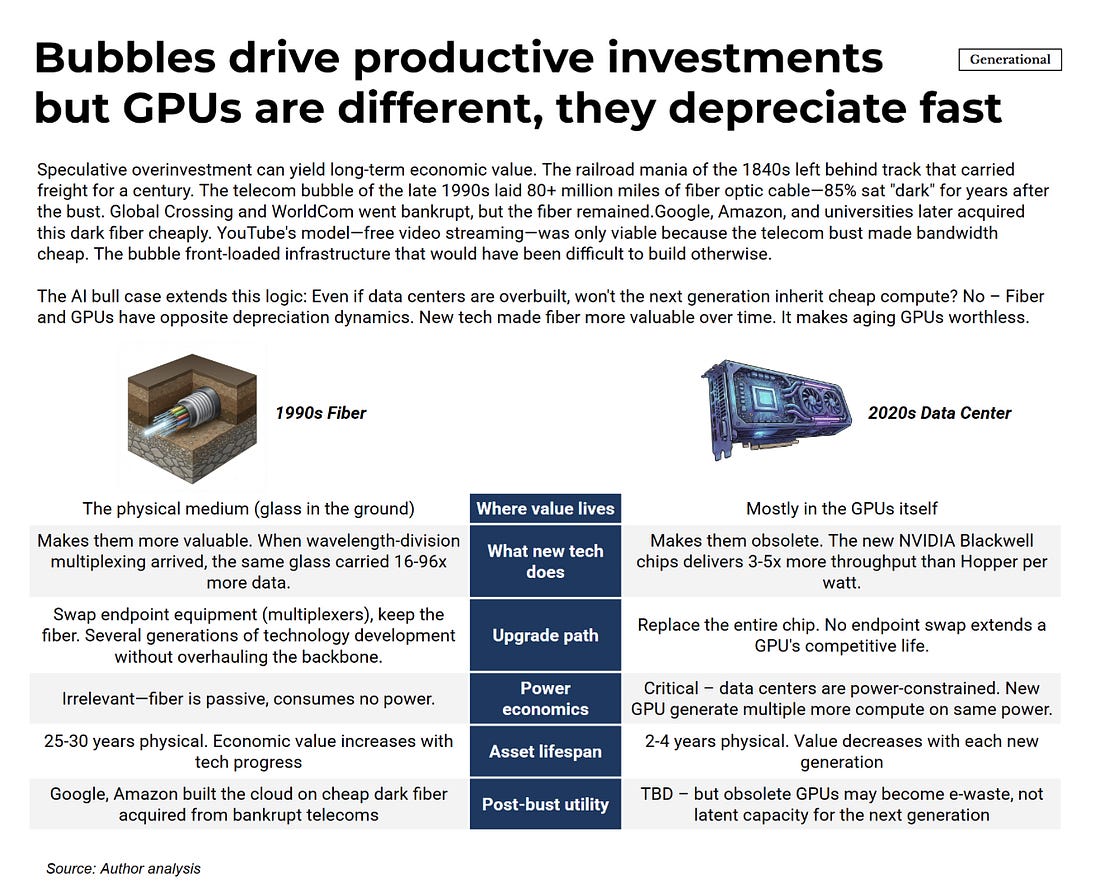

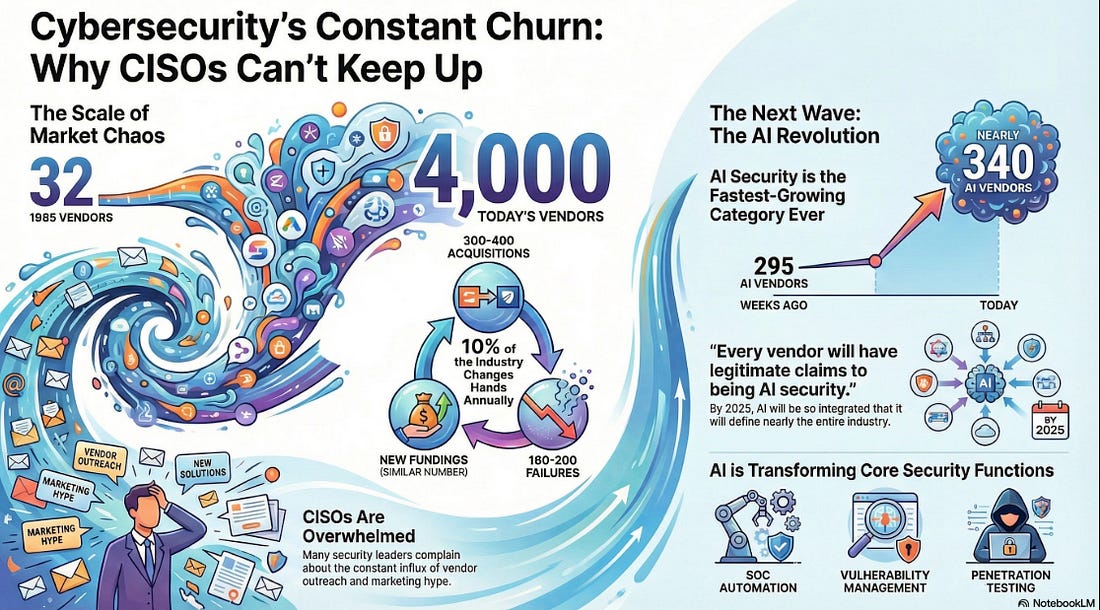

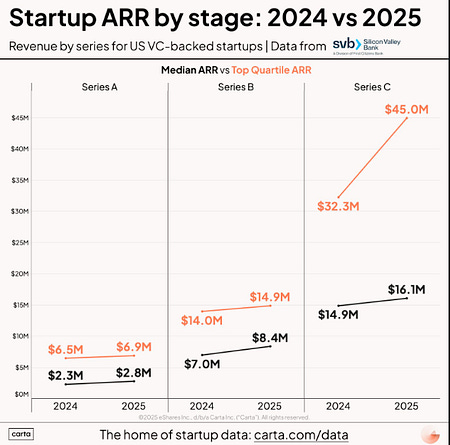

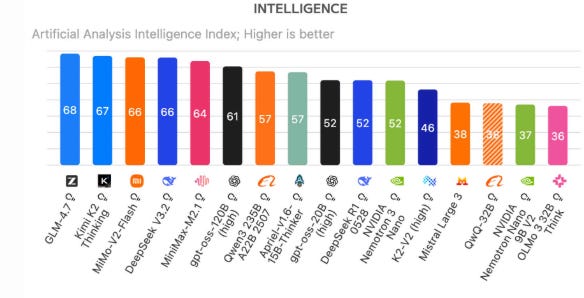

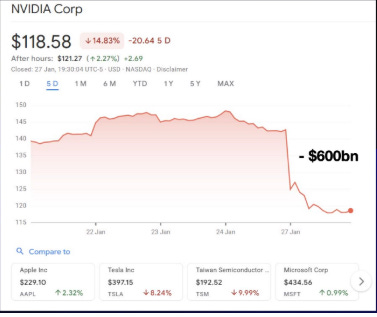

With that out of the way, let’s get down to business with this weeks issue of the Resilient Cyber Newsletter! Interested in sponsoring an issue of Resilient Cyber? This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives Reach out below! OWASP Top 10 for Agentic AI AMAAs we rounded our 2025, OWASP GenAI Security Project Agentic Security Initiative (ASI) published the first ever OWASP Top 10 for Agentic AI. I've published a deep dive blog into it, but there is a lot to discuss still. Cyber Leadership & Market DynamicsMomentum Cyber’s 2025 Cyber AlmanacA key part of understand the cybersecurity market is looking at the broader ecosystem. Vendors, startups, capital, M&A and more. That’s why so many love Momentum Cyber’s “Cyber Almanac”. The team recently published their 2025 edition and it is a comprehensive 120+ page breakdown of key findings of the cyber market the past year. The insights are excellent in terms of the figures and insights into the capital activities across the cyber ecosystem. I take some issues with how some companies are categorized but that’s a trivial complaint! See some of their key findings below, and go check out the full report. Their analysis shows just how active of a year 2025 was in terms of deal activities, volume, financing and more, with many recognizable vendors and names involved, as well as a look into the companies shaping the future of cybersecurity. And yes, AI of course played an outsized role, as it is doing well beyond Cybersecurity as well. Technological Revolutions & Financial Capital - AIHistory doesn't repeat itself, but it does rhyme. That's why I've been digging into Carlota Perez's seminal "Technological Revolutions and Financial Capital". Many state that we're experiencing the next technological revolution with Artificial Intelligence (AI). When you look at the allocation of capital, widespread potential of the technology, it's unprecedented diffusion across society, the geopolitical implications and more, it is hard to argue otherwise. While I had never read the book prior, it is arguably the best writing I've read on the nature of technological revolutions and their worldwide implications. How the AI Bubble Will Burst2025 was dominated with discussions of whether or not we’re in an AI bubble, if so, how and when it may burst, what the implications were and more. A lot of parallels have been drawn to prior technological waves, such as the ones in the image above from Carlota’s book. This piece from Kenn So of Generational on Substack is one of the most accessible writings on the topic I’ve found, especially for someone like myself without a background in macroeconomics. Kenn walks through the 5 typical factors used to assess bubbles, how the AI technological wave compares and how the real risk lies in the GPU depreciation and how things are being financed unlike prior technology waves. He has some excellent images and charts to help make it understandable as well. If you’re looking for a no nonsense quick breakdown on bubble economics tied to AI, this is it. Palo Alto Networks in Talks to Acquire Koi Security for $400MBy now, if you follow the Resilient Cyber Newsletter, you’ve heard of Koi Security. I routinely share insights from them on malicious extensions and software supply chain attacks. Surprisingly, they are just a one year old Israeli startup and are already potentially poised to see a $400M exit to Palo Alto Networks (PANW). Koi is one of the best examples I’ve seen of rapidly establishing industry credibility and branding due to their constant stream of credible and comprehensive threat intelligence and research and their fun branding that makes their content stand out. It all seems to be culminating in one of the largest cyber platform players looking to add them to the team shortly after they were founded. If true, truly incredible execution by the Koi Security team! The U.S. Looks to Play OffenseI’ve been talking recently about the forthcoming U.S. National Cyber Strategy (NCS) and how it will have a focus of going on the offensive, rather than strictly playing defense as the U.S. historically has done (at least publicly). This seems to be playing out in other realms too, given the recent capture of the Venezuelan leader, and talks of the U.S. acquiring Greenland. Nonethless, in the Cyber world, groups like the Aspen Institute, a think take focused on various topics including Cyber recently penned a piece discussing how they will be helping shape U.S. policy and offensive cyber efforts. xAI Raises $20B (with a B) Series EThe massive competition for the frontier labs and model providers continues to heat up, as Elon Musk’s xAI announced a $20B Series E. Their announcement focuses on building advanced AI and exceeded their $15B target. They highlight their key initiatives from data center buildouts with Colossus I and II, ending 2025 with over one million H100 equivalents. They also cite efforts such as the Grok 4 series, multimodel and more and as they push towards Grok 5. Why CISOs Miss What’s Coming NextTo say the role of the CISO is daunting is an understatement. Not only are you dealing with the daily firefighting, fighting for budget and headcount, incidents, and internal politics but you often need to keep up with the latest vendors, products and innovations as well. My friends over at CISO Tradecraft published a cool piece documenting how the Cyber industry went from roughly 32 vendors in 1985, to over 4,000 and counting now, with an astounding 11,400+ products across them. To produce the figures, they chatted with longtime industry analyst Richard Stiennon who stated that 10% of the cyber industry changes hands every year via M&A etc. The piece goes on to discuss the challenge and some of the methods CISOs can use to keep pace with the latest products, capabilities and trends that may matter as they build out their security tech stack. Startup ARR by Stage: 2024 vs. 2025We’ve seen the median ARR creep up in the last 4-5 years, from 2021 to 2025, and Peter Walker, Head of Insights at Carta laid it out in a recent post. He demonstrates how from 2021-2025 the median ARR across Series A-C has crept up, with the largest jump being at Series C. Peter also discusses how VCs look at growth rates, how teams define ARR, and more. Key things for founding teams and builders to keep in mind. Speaking of Peter, I will be doing a Resilient Cyber episode with him next week, so keep an eye out for that! AIAI Security Challenges 2026To say keeping up with the AI Security space in 2025 was a challenge would be an understatement. That’s why I found this piece from Hiroki Akamatsu to be so useful. They comprehensively lay out the security challenges of AI heading into 2026 while walking through the major advancements and gaps of 2025 that we will continue to wrestling heading into the new year as well as existing mitigations, including:

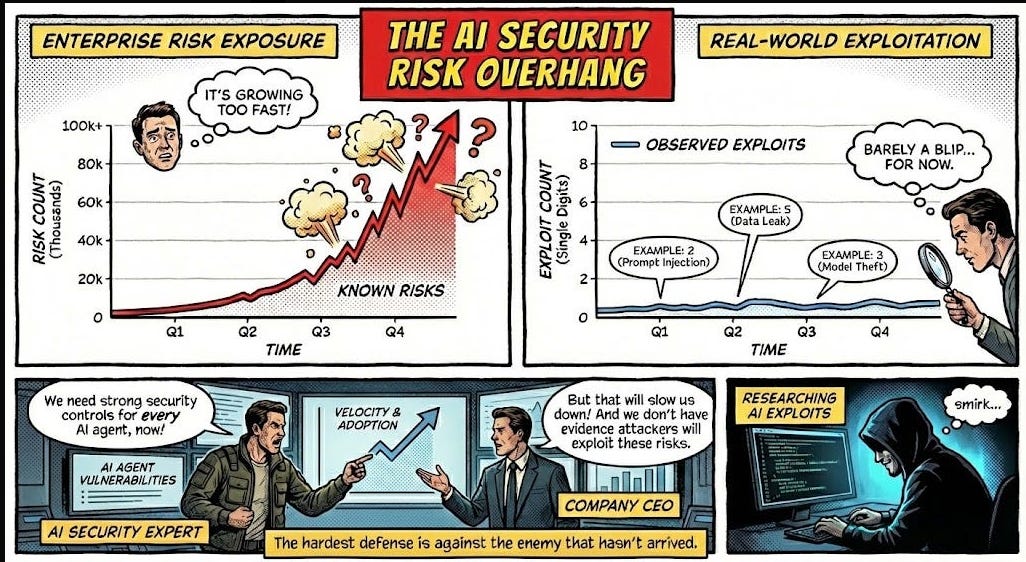

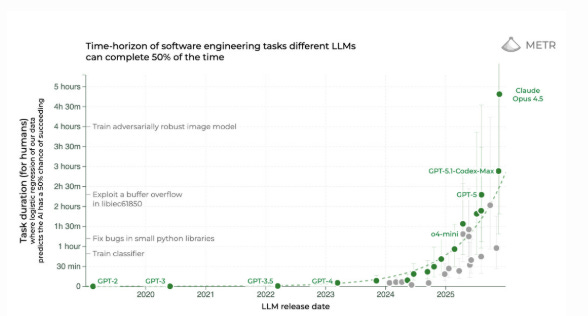

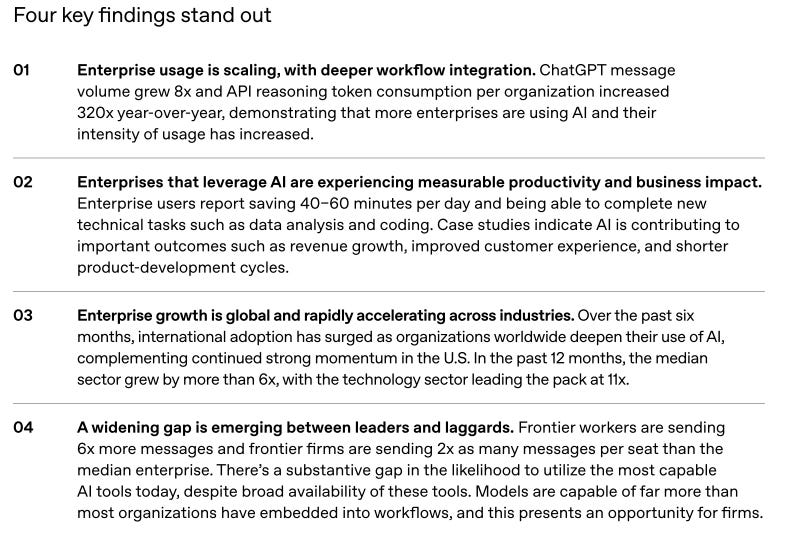

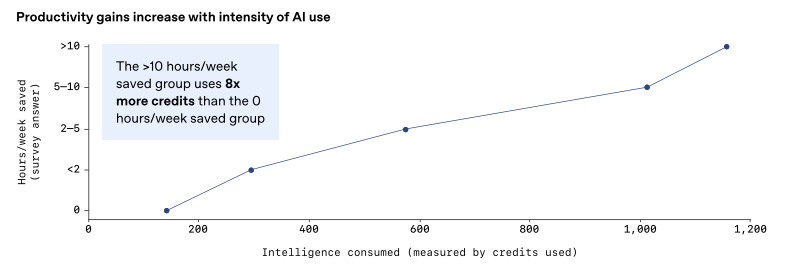

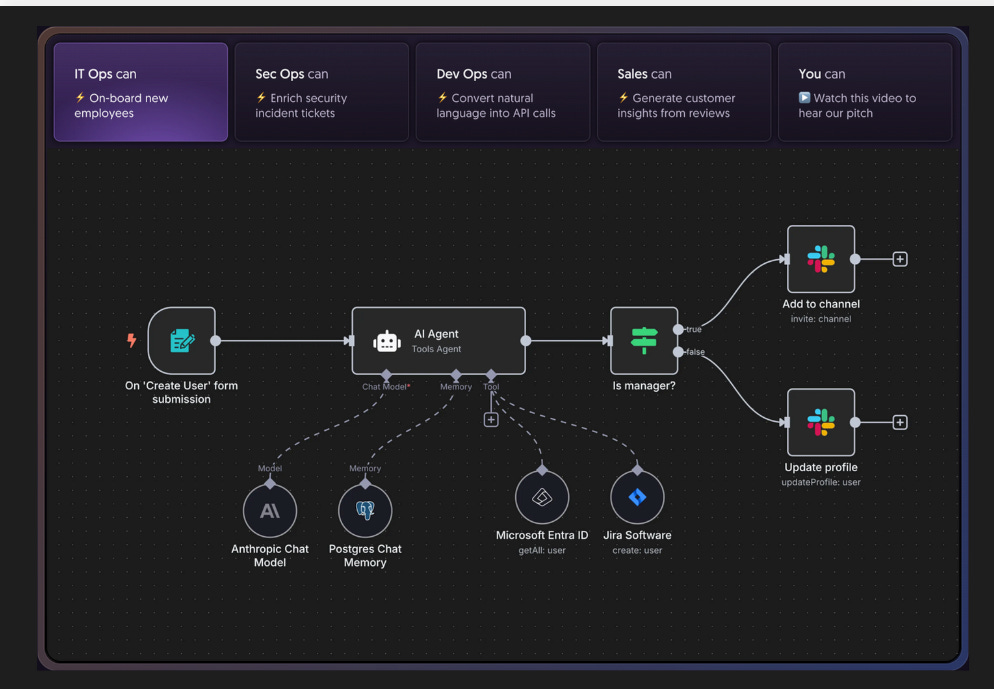

How to defend an exploding AI attack surface when the attackers haven’t shown up (yet)2025 was dominated by discussions about risks associated with AI, and 2026 will be no different. And, for good reason, as an industry we’ve learned out lessons of bolting in rather than building in security and many have looked to raise flags early about the potential risks and threats AI introduces to our systems and organizations. However, despite all of the conversations about AI native and novel risks such as model poisoning etc. many of them actually haven’t materialized quite yet on the scale of things such as ransomware. At least that is the case Joshua Saxe makes in this excellent blog where he discusses the “AI Security Risk Overhang” as he calls it. I tend to agree, and this overhang has implications for AI security startups and the significant capital allocations associated with them. Many who have fixated on some of the novel risks you mentioned which haven't materialized may face headwinds as a result. It is much more likely that the real challenges and incidents will be tied to age old problems but associated with the new technologies, platforms, tools etc. Top AI/Agentic Shifts (for Security) 2025There was a lot to try and keep up with in 2025 when it comes to AI and Agentic Security. I found this LinkedIn blog from Steve Giguere to be a good recap of some of the major events and their security implications. He stated that 2024 was the year we had AI that answers, 2025 became the year that AI does, via agents MCP and the ability to use tools and take actions. He covered key activities such as ChatGPT’s “agent mode”, Claude Code, Meta’s multi-model LLama 4 and OWASP’s Agentic AI Top 10 (which I had a chance to contribute to). 2025: The Year in LLMsIf you're like me, you try your best to keep up with all things AI, but damn if it isn't hard. Just LLMs alone saw significant advancements, evolutions and news that spanned not just technical but economics, politics and more. This piece from Simon Willison is incredibly comprehensive and covers the major trends in 2025 around LLMs and is a great primer as we kick off 2026. The State of Enterprise AIOpenAI recently shared their 2025 State of Enterprise AI report and there's a lot of great insights here, including from the security perspective. At a high level, they report exponential increases in enterprise usage, with ChatGPT messages growing 8x YoY and 320x increases in reasoning token consumptions. Ni8mare - Unauthenticated RCE in n8nAs we continue to see agents proliferate exponentially along with supporting frameworks and tools, we will inevitably see impactful vulnerabilities grow alongside them. This example from the team at Cyera dubbed “Ni8mare” is a 10.0 CVE, officially CVE-2026-21858. It allows attackers to take over locally deployed instances of n8n and impacts an estimated 100,000 servers globally, with users needing to upgrade to versions 1.121.0 or later to fix it. For those unfamiliar with n8n, it is a low code no code (LCNC) option for agentic workflows, with drag and drop interfaces and many integrations, making it accessible to not just engineering and developers but what are often called “non technical” or “citizen developers” too. See the image below for an example from Cyera: AppSecPrioritization Is the Only Way To Survive 2026I recently shared the CVE recap from Jerry Gamblin, one of my favorite followers for vulnerability management research. He recently published a brief image demonstrating the growth of CVEs in 2025. As he points out, the volume of CVEs in 2025 blew up to 48k+, being driven by not just the usual operating system findings but third party plugins and open source as well.

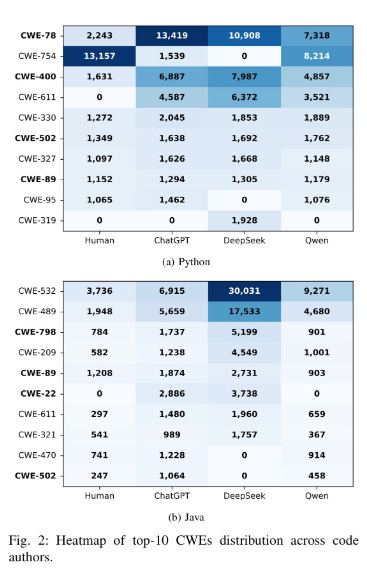

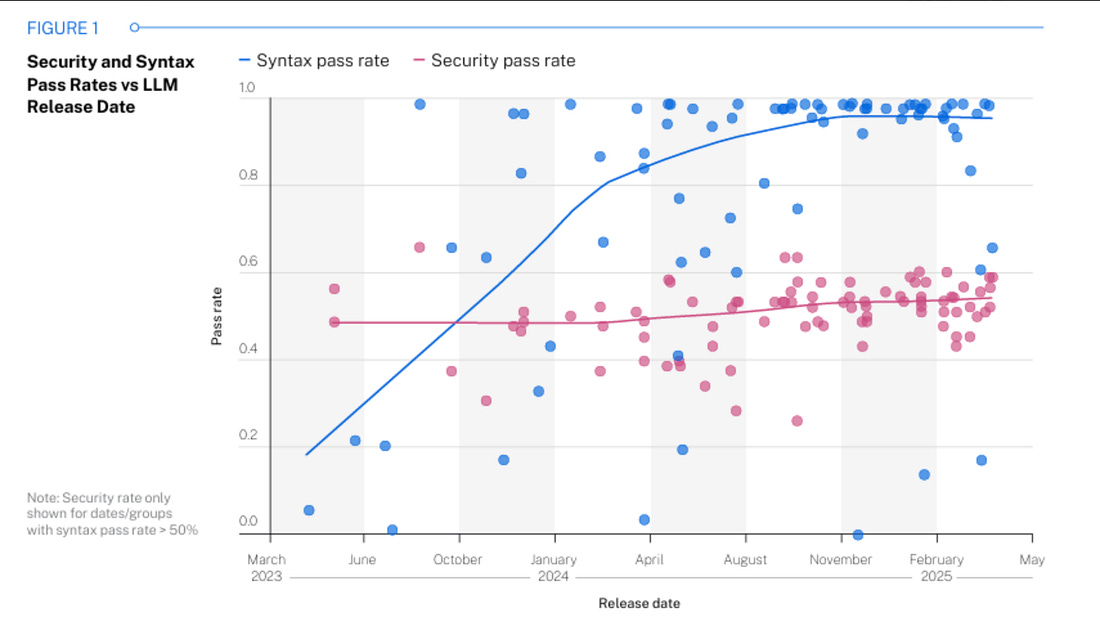

AI-generated Code and Systemic Security IssuesAs 2025 demonstrated, we’re well on our way to AI both fundamentally transforming modern software development and writing outsized portions of software in the future. This is amazing news from the productivity and velocity perspective, but from the security perspective, problems persist. The Upsides and Downsides of LLM-Generated Code I’ve been writing and speaking a ton about both the productivity benefits of AI coding, as well as the inherent risks involved as well. In fact Ken Huang and I will have something coming for the community this year diving really deep into the trend of “Vibe Coding” as well as broader AI-driven development. Veracode is a team that has been putting out some helpful studies and insights into LLM-generated code and its risks, and I recently covered a report of theirs in my article “Fast and Flawed” where I used their 2025 GenAI Code Security Report. This latest talk from industry legend Chris Wysopal. He highlights benefits such as accelerated coding velocity, syntactical correctness and even remediation capabilities once vulnerabilities are identified. However, as expected, Chris also points out that we aren’t seeing improvements in code security, something academic research and publications support, as well as the fact that the training data (e.g. large open source data sets) have implicit vulnerabilities which get perpetuated and that we’re seeing rapid rises in vulnerability volume, which is a problem the industry couldn’t deal with prior to AI, let alone after. As I have been arguing, to fix these systemic issues, Cyber will need to leverage the same tools as developers and attackers - AI itself, to try and get out of the hole we’re in which is only getting deeper in terms of vulnerability velocities and volumes in backlogs. Invite your friends and earn rewardsIf you enjoy Resilient Cyber, share it with your friends and earn rewards when they subscribe. |

Similar newsletters

There are other similar shared emails that you might be interested in: