Prompting isn’t the future. Creating is.

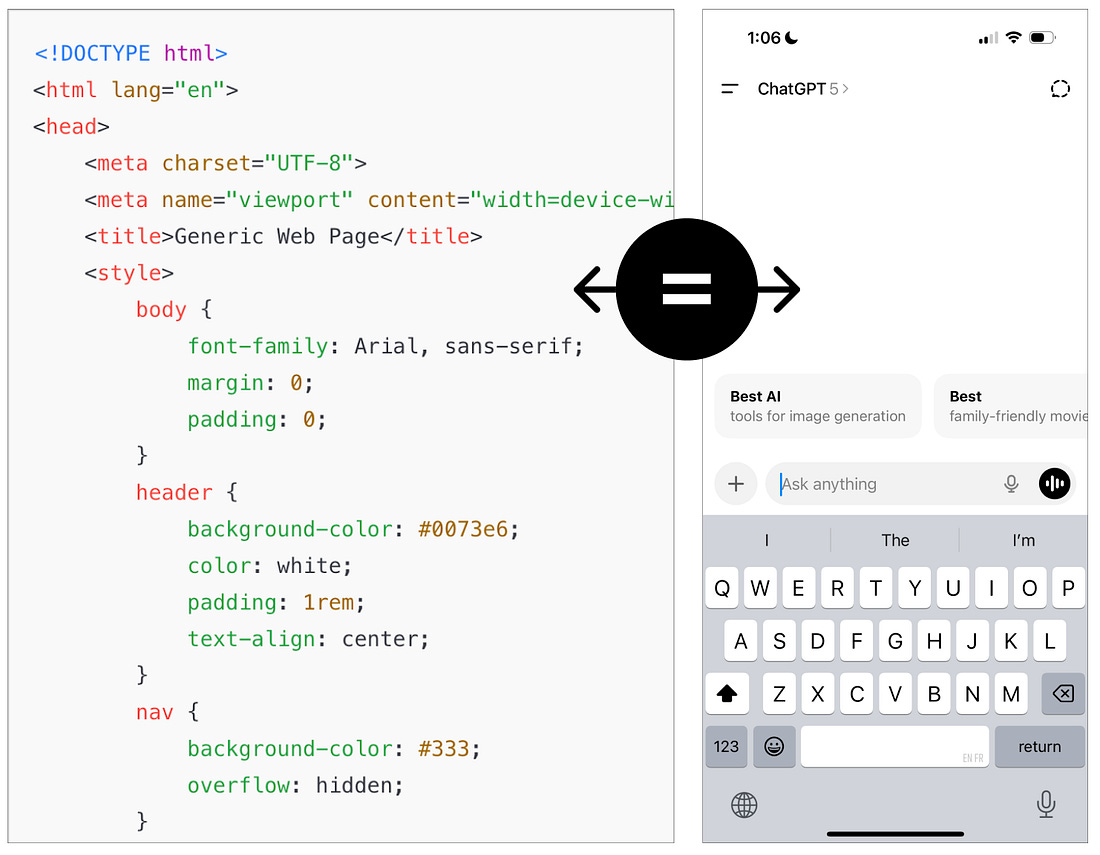

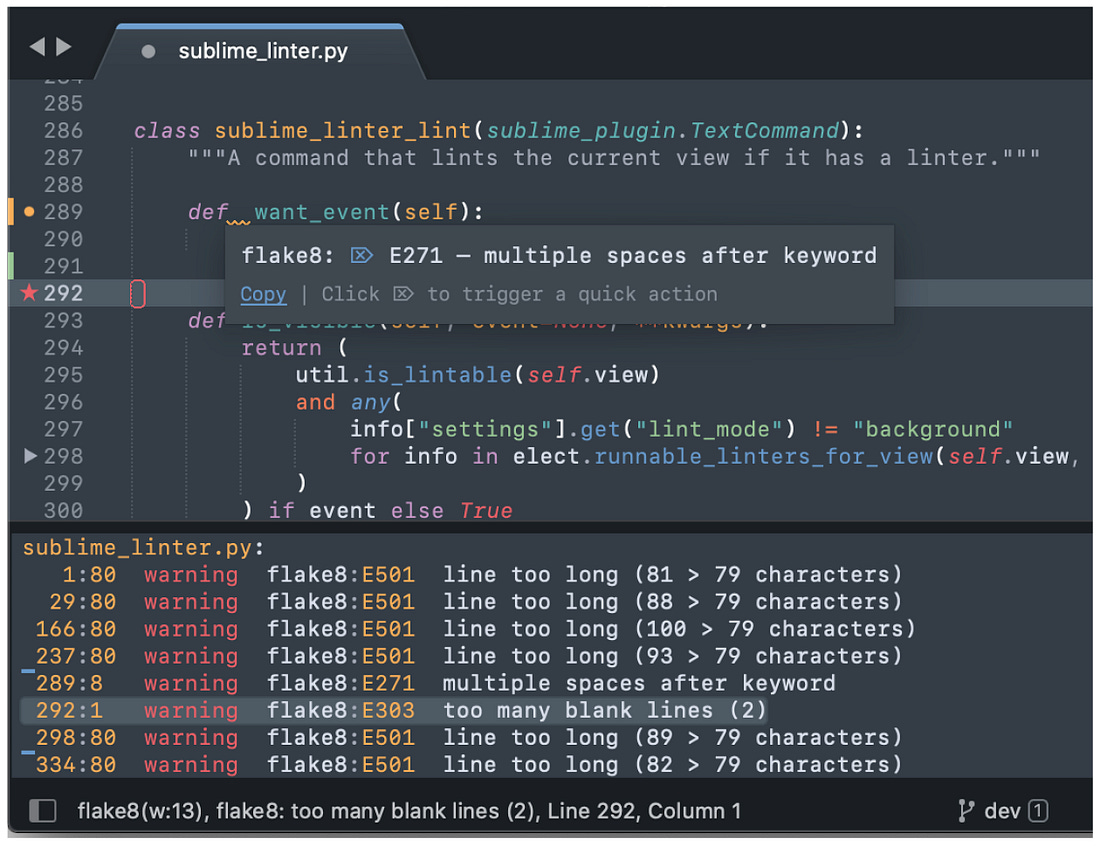

Writing prompts today is like writing HTML in the mid-90s. But AI text prompting is still in it’s pre-WYSIWYG era. Chat seems simple and intuitive. But it still requires repetitions, tenacity, and nuanced knowledge to be successful. So while anyone can write a text prompt, most people still experience a massive gap between what they can imagine relative to what they can create. Why isn’t conversational UI the future?Conversational UI is a critical part of the future. It is powerful, expressive, and unconstrained. But just like HTML, it takes patience and skill to master, and that’s not for everyone. Experts will always find raw text to be most powerful and versatile — just like developers prefer to hand-code in HTML + CSS. But history shows the masses adopt tools that make creation faster, easier, and more intuitive. Why shouldn’t people just get better at text prompting?People will get better at text prompting — just like we all got better at writing Google queries. But the real opportunity isn’t just training people to “prompt harder.” It’s shaping UIs that guide and teach people in real time — like linting in code editors, where hints and feedback help you learn by doing. We are already seeing glimpses of this:

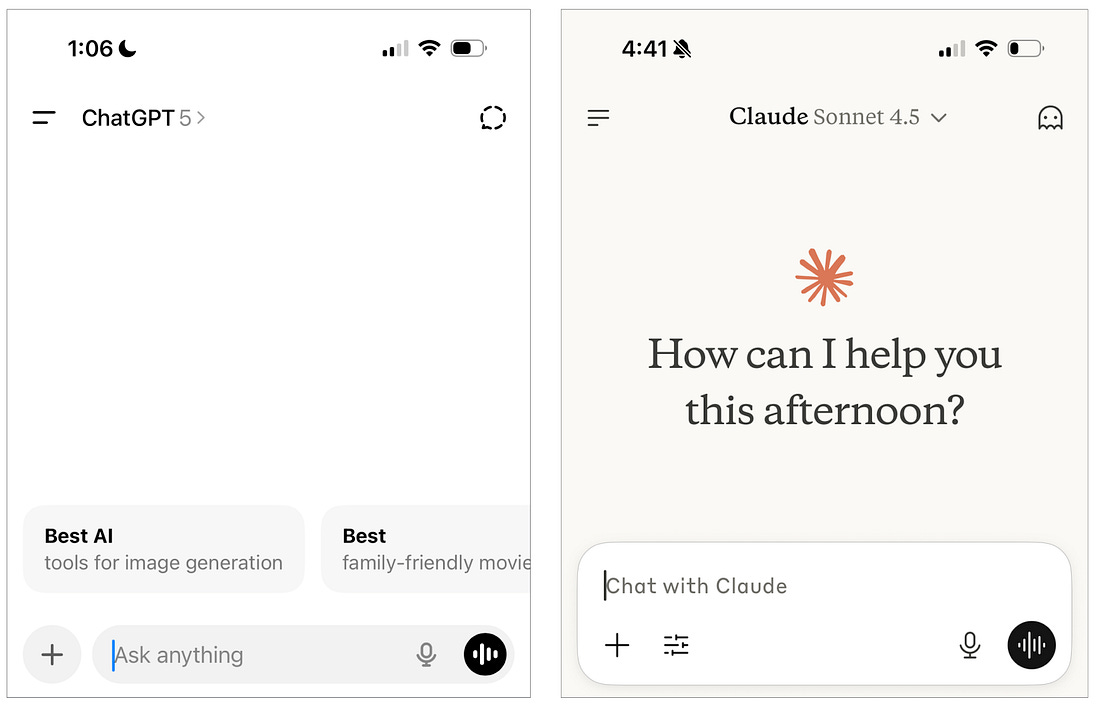

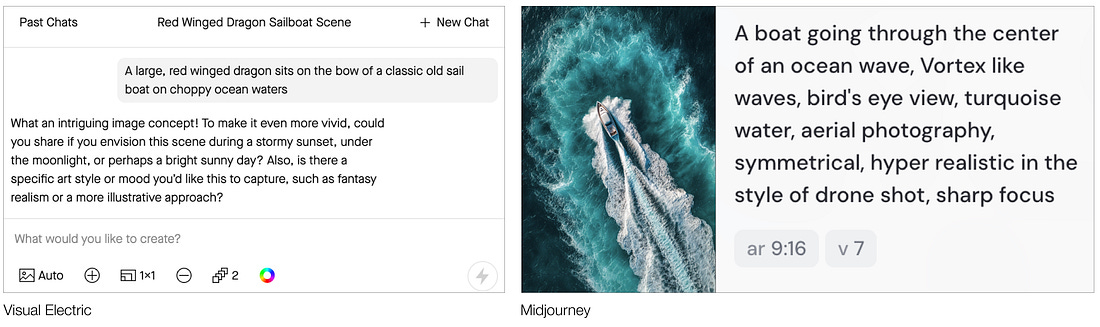

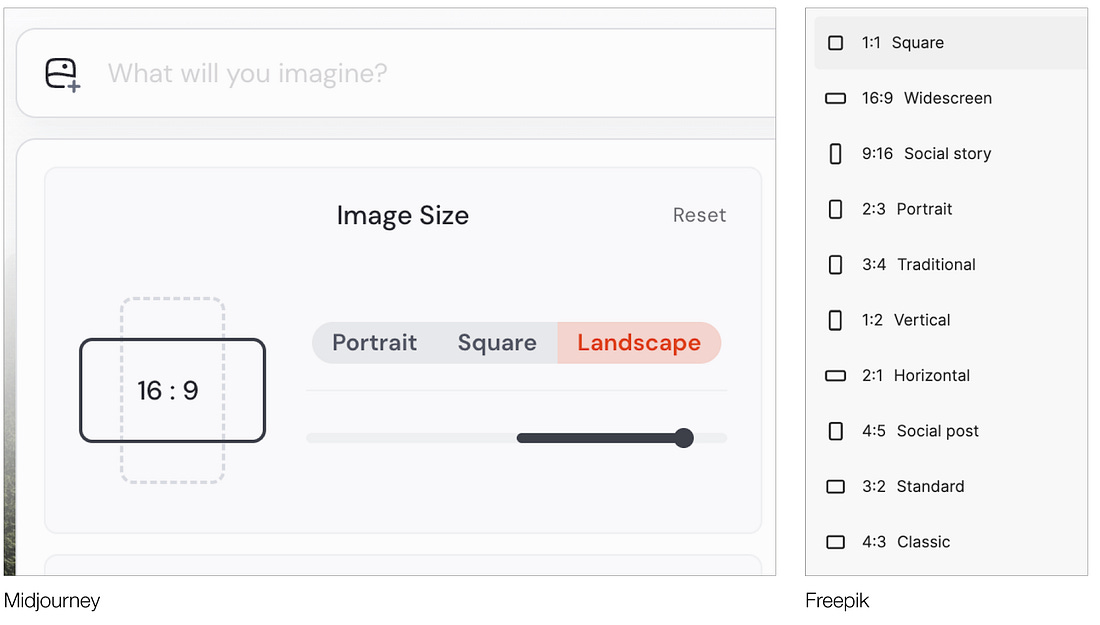

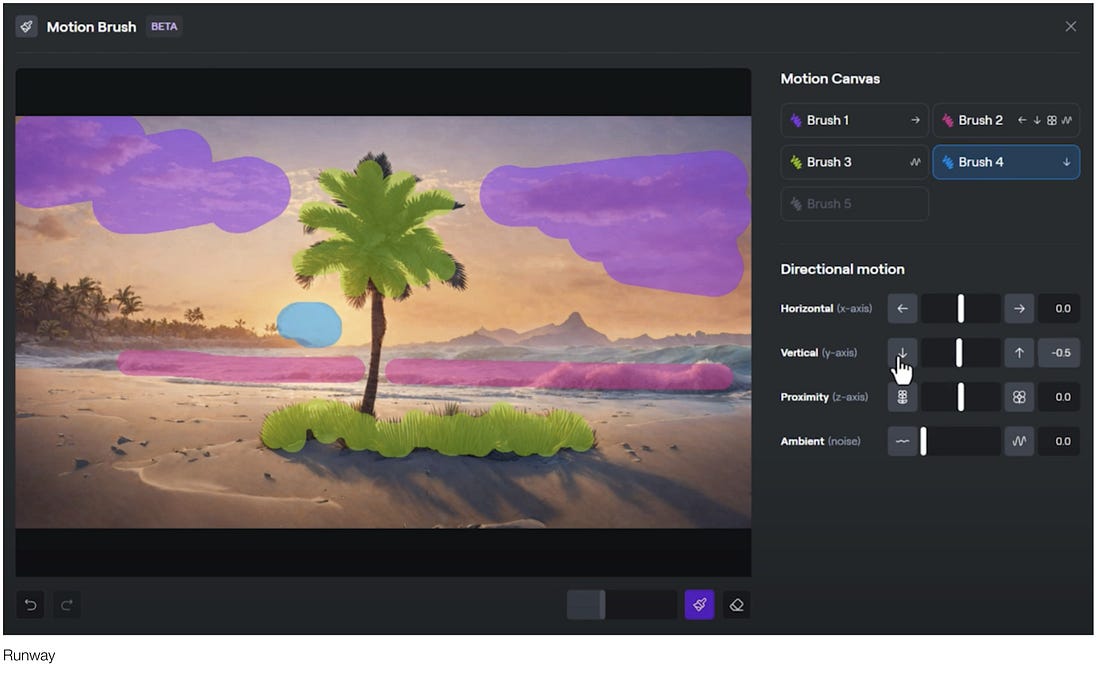

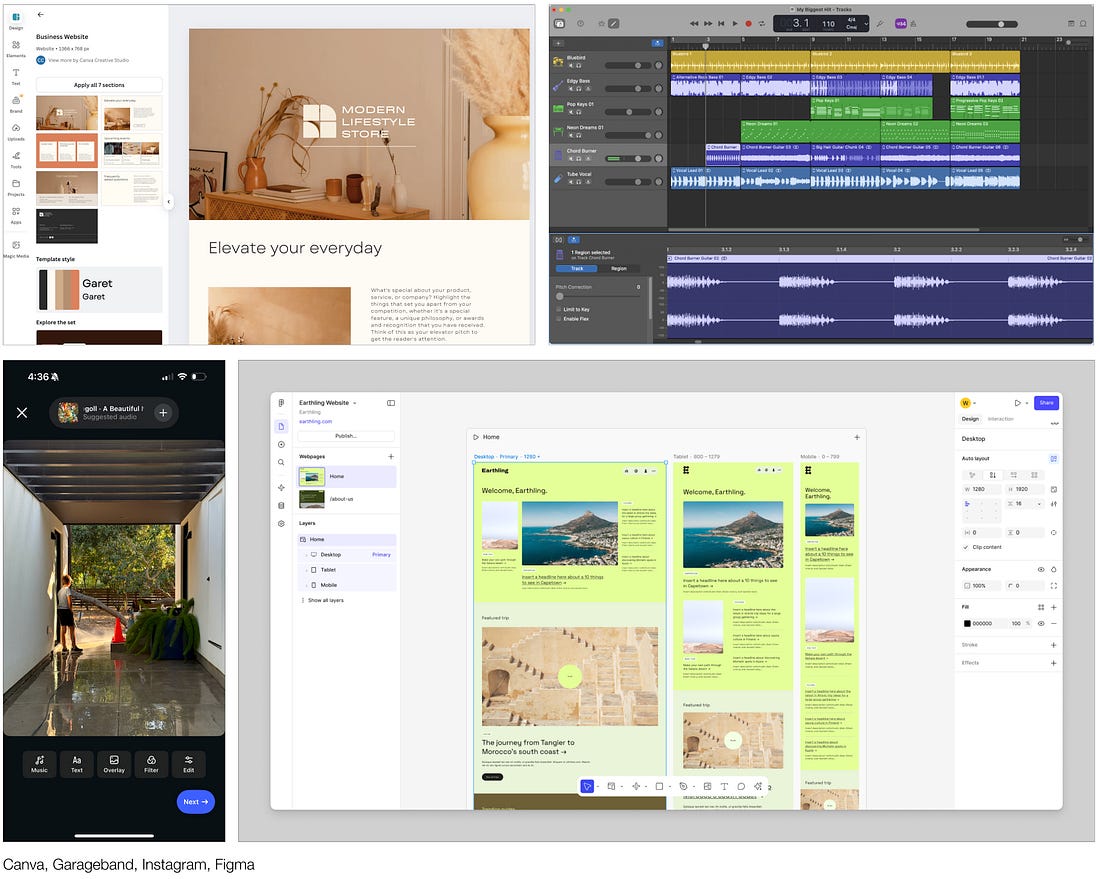

Still, not all tasks fit neatly into a chat box. Try describing a specific image in your head in words alone. It’s hard to know where to start or what to include and feels clumsy. This where combining open-ended inputs with structured controls matters. An open text box gives freedom; structured inputs give precision. Together, they put people firmly in the driver’s seat. What would a better GUI look like?This is where things get exciting. Parts and pieces of multimodal UIs are emerging. Rather than chat being the sole solution for everything, products are starting to lift some of the cognitive load and help people generate better outputs. This is especially important when using AI to co-create something (like an image, an app, a spreadsheet analysis, etc.). At one end of the spectrum, we have pure chat UIs like ChatGPT or Claude. These are simple and open-ended, but difficult to steer. Next, some products guide prompt construction in real time. Visual Electric asking clarifying questions through the chat interface, Midjourney shows reverse-prompting for previously-generated images. Beyond that, UIs start to combine open prompts with structured controls (like knobs, sliders, drop downs). For example, MidJourney and Freepik allow users to select the aspect ratio from a set of options. Advanced users can still include “--ar 16:9” in their prompt, but for most people, seeing the available options helps them understand what is possible and quickly select one. Eventually we get to prompts paired with direct manipulation. People can sketch, select, drag, or edit content directly. For example, instead of just describing “a red sun fading on the horizon,” Runway’s multi motion brush makes it possible for a user to specify exactly how the sun should fade. All of these examples start with an unstructured text field, but provide increasingly sophisticated controls to help users guide AI outputs toward what they envisioned. Where to next?The real breakthrough won’t come from smarter chat boxes. It will come when prompting feels like play — when you can sketch, drag, remix, and layer ideas the way you do in Figma or GarageBand. That’s when AI stops being a command line and starts being a true creative medium. Just as WYSIWYG unlocked the web, new interfaces will unlock AI. |

Similar newsletters

There are other similar shared emails that you might be interested in: