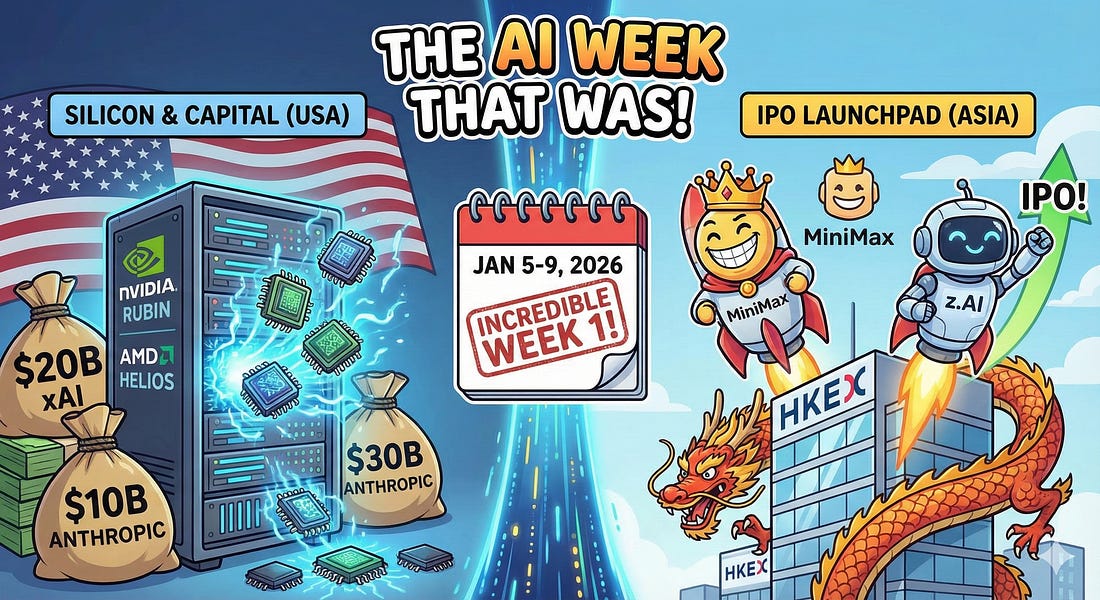

The Sequence Radar #787: Rubin, Raises, and Returns: 2026 Starts Fast

Was this email forwarded to you? Sign up here The Sequence Radar #787: Rubin, Raises, and Returns: 2026 Starts FastIncredible first week of the year.Next Week in The Sequence:Our series about synthetic data generation continues with a quick exploration of the top frameworks in the space( you cant miss that). The AI of the week discusses MIT’s crazy paper about recurvise language models that everyone is talking about. The opinion section will discuss how AI evaluations need to move from static to dynamic based on environments. Subscribe and don’t miss out:📝 Editorial: Rubin, Raises, and Returns: 2026 Starts FastThe first full week of 2026 has been incredibly active, marked by a density of significant developments that sets a high bar for the rest of the year. From Las Vegas to Hong Kong, we saw a simultaneous rollout of critical infrastructure and financial milestones. The news cycle was dominated by major hardware reveals at CES, substantial capital injections for US labs, and the first public market tests for China’s AI startups. Rather than signaling a shift in direction, the events of this week underscore the sheer velocity and scale at which the ecosystem is currently operating. Here is the signal in the noise. 1. The Silicon Wars: From Chips to “AI Factories”At CES 2026, the pretense that the GPU is a standalone component finally ended. NVIDIA unveiled the Rubin platform, and the headline isn’t the chip itself, but the architecture. Jensen Huang’s team introduced the Rubin GPU (featuring 288GB of HBM4 memory) paired with the new Vera CPU, designed to function not as discrete units but as a single “AI supercomputer in a rack.” With a claimed 5x jump in inference speed over Blackwell, NVIDIA is pushing the “rack-scale” paradigm (NVL72) as the new unit of compute. AMD, however, refused to play second fiddle. Lisa Su announced the “Helios” platform, a rack-scale answer delivering 3 AI exaflops, alongside the consumer-focused Ryzen AI 400 series (boasting 60 TOPS). While NVIDIA dominates the data center training narrative, AMD’s aggressive push into “yotta-scale” computing and local AI inference signals that the hardware moat is being contested on both the jagged edge of supercomputing and the localized edge of consumer devices. 2. The Capital Cannonball: $30B in 48 HoursThe numbers are becoming abstract. xAI confirmed a massive $20 billion Series E on Tuesday, fueling its “Colossus” cluster ambitions. Not to be outdone, Anthropic is reportedly closing a $10 billion round at a staggering $350 billion valuation—a figure that suggests the market now views them not just as a model builder, but as a foundational utility for the global economy. The timing is not coincidental. As training costs for GPT-6/Claude-5 class models approach the $10B mark, the table stakes for staying in the frontier model race have effectively priced out everyone but the hyper-scalers and the sovereign-backed entities. Anthropic’s new partnership with Allianz (announced Friday) further cements this transition from research lab to enterprise infrastructure. 3. The Dragon Rises: The Hong Kong Litmus TestWhile the US fights the private capital war, China’s AI giants are testing the public markets. This week marked a historic pivot with the IPOs of MiniMax and z.AI (Zhipu AI) in Hong Kong. The results were electrifying. z.AI, the creator of the GLM models, debuted Thursday with a solid performance, raising ~$558M and proving that open-weight model strategies can find public market validation. But MiniMax stole the show on Friday. The “Chinese Character.AI” saw its stock nearly double on day one, rocketing to a valuation over $13 billion. This is a critical signal. For years, the question has been whether China’s application-layer AI companies could exit. MiniMax’s explosive debut answers that with a resounding “yes,” suggesting that while US firms focus on AGI infrastructure, Chinese firms are finding faster routes to monetization and liquidity in consumer apps. The VerdictWe are witnessing a bifurcation of the AI ecosystem. In the West, the focus is on capital-intensive “heavy industry”—building the god-like infrastructure required for AGI. In the East, the focus has shifted rapidly to public liquidity and consumer application dominance. 2026 has barely started, and the rules of the game are starting to change. 🔎 AI ResearchRECURSIVE LANGUAGE MODELS, MIT CSAIL The authors propose Recursive Language Models (RLMs), a general inference strategy that treats long prompts as an external environment, enabling an LLM to programmatically manage and recursively process context through a Python REPL. This approach allows models to handle inputs vastly exceeding their native context windows and dramatically outperforms existing long-context methods on diverse tasks while keeping costs comparable. WebGym: Scaling Training Environments for Visual Web Agents with Realistic Tasks, Microsoft, UIUC, CMU WebGym is introduced as a large-scale training environment for visual web agents, offering nearly 300,000 diverse tasks and a high-speed asynchronous rollout system for efficient reinforcement learning. The study demonstrates that training on this extensive dataset allows a standard vision-language model to significantly outperform proprietary models like GPT-4o on unseen web tasks. Agentic Rubrics as Contextual Verifiers for SWE Agents, Scale AI This paper introduces Agentic Rubrics, a verification method where an expert agent explores a code repository to generate context-specific checklists for grading candidate software patches without requiring code execution. Experiments on SWE-Bench Verified show that this approach yields scalable, interpretable verification signals that outperform traditional execution-free baselines and align closely with ground-truth test results. Token-Level LLM Collaboration via Fusion Route, Meta AI, Carnegie Mellon University, University of Chicago, University of Maryland: The authors propose FUSION ROUTE, a token-level collaboration framework that uses a lightweight router to dynamically select the most suitable expert model at each decoding step while simultaneously providing complementary logits to refine the output. This approach addresses the theoretical limitations of pure expert selection and is shown to outperform existing collaboration and model-merging baselines across diverse benchmarks in mathematics, coding, and instruction following. GDPO: Group reward-Decoupled Normalization Policy Optimization for Multi-reward RL Optimization, NVIDIA, HKUST: This paper identifies a “reward collapse” issue in Group Relative Policy Optimization (GRPO) where distinct combinations of multiple rewards result in identical advantage values, reducing the resolution of training signals. To resolve this, the authors introduce GDPO, which decouples the normalization of individual rewards to preserve their relative differences, leading to improved convergence and stability in multi-objective tasks like tool calling and mathematical reasoning. VideoAuto-R1: Video Auto Reasoning via Thinking Once, Answering Twice, Meta AI, KAUST: The authors introduce VideoAuto-R1, a video understanding framework that employs a "reason-when-necessary" strategy by generating an initial direct answer and only proceeding to complex Chain-of-Thought reasoning if confidence is low. This approach allows the model to achieve state-of-the-art performance on reasoning-heavy benchmarks while significantly reducing computational costs and latency on simpler perception tasks. 🤖 AI Tech ReleasesLFM2.5Liquid AI released LFM2.5, their next wave of on-device AI models. ChatGPT HealthOpenAI launched ChatGPT Health, a dedicated space for conversations about your health. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: