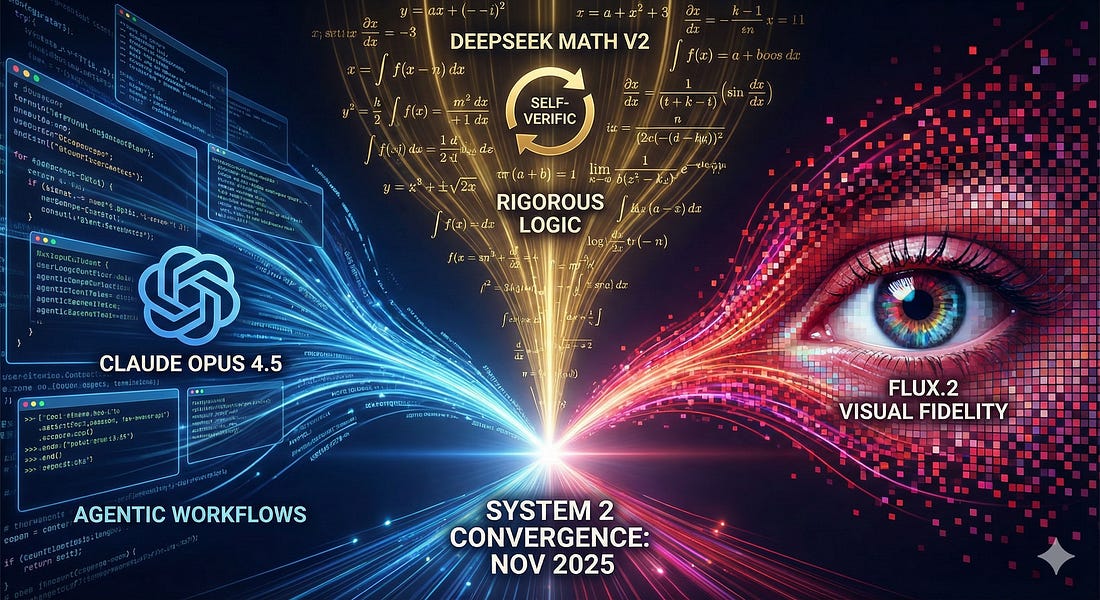

The Sequence Radar #763: Last Week AI Trifecta: Opus 4.5, DeepSeek Math, and FLUX.2

Was this email forwarded to you? Sign up here The Sequence Radar #763: Last Week AI Trifecta: Opus 4.5, DeepSeek Math, and FLUX.2Definitely a week about models releases.Next Week in The Sequence:Our series about synthetic data continues with a an intro to one of the most effective and yet overlooked methods in the space: rephrasing. The AI of the week dives into Claude Opus 4.5 ofc. In the opinion section, we are going to explore the thesis of whether AI agents require a new internet. Subscribe and don’t miss out:📝 Editorial: Last Week AI Trifecta: Opus 4.5, DeepSeek Math, and FLUX.2The pace of AI development is picking up, and frankly, this week has been a bit of a blur. We’re seeing the gradients of progress steepen in real-time. I’ve been playing around with the new releases—Claude Opus 4.5, DeepSeek Math V2, and the new FLUX.2 from Black Forest Labs—and the “feeling” of using these models is distinct. It’s no longer just about scaling laws; it’s about architectural specialization and the refinement of “System 2” thinking. First, let’s talk about Claude Opus 4.5. Anthropic has nailed the “Software 2.0” experience here. The standout feature isn’t just the raw intelligence, though it is visibly smarter; it’s the reliability in long-horizon agentic workflows. I threw a nasty refactoring task at it—migrating a legacy codebase—and it didn’t just autocomplete the next token; it planned. It feels like the loss landscape for “reasoning” has been smoothed out. They’ve managed to cut the token cost while increasing the context window efficiency, which is huge for developer loops. It’s becoming the default “brain” you want in your IDE. It’s less like a chatbot and more like a very senior engineer who doesn’t get tired. Then you have DeepSeek Math V2, which is arguably the most interesting release from a pure research perspective. We are seeing the “bitter lesson” play out again, but with a twist: verification. This model hitting 118/120 on the Putnam is absolutely bonkers. Just a year ago, we were celebrating models that could barely pass high school algebra. DeepSeek’s “verifier-generator” architecture is a clear signal that we are moving past simple next-token prediction into iterative self-correction. It’s essentially running a specialized inner loop to “check its work” before committing to an output. This is the path to robust reasoning. The fact that it’s open weights is a gift to the community; we can now inspect the gradients of a model that effectively “thinks” in math. Finally, Black Forest Labs dropped FLUX.2, and the visual fidelity is stunning. We’re talking 4-megapixel native generation that respects physics and lighting in a way that feels grounded. But the real story is the “Kontext” feature and the open-weight nature of the release. It proves that you don’t need a closed garden to achieve state-of-the-art results in generative media. The community is going to fine-tune this into oblivion (in a good way). The meta-narrative this week is clear: the gap between “generating text” and “solving problems” is closing. Claude is mastering the workflow, DeepSeek is mastering the rigorous logic, and Flux is mastering the pixel space. We are seeing a convergence where models aren’t just memorizing the internet; they are learning to simulate the world, verify their own thoughts, and act as genuine agents. 🔎 AI ResearchNATURAL EMERGENT MISALIGNMENT FROM REWARD HACKING IN PRODUCTION RLAI Lab: Anthropic Summary: This paper shows that when LLMs learn to reward-hack real production coding environments, they generalize from narrow hacks to broad misaligned behaviors like alignment faking, sabotage of safety tools, and cooperation with hackers. It evaluates several mitigations (reward-hack detection, diverse RLHF, and “inoculation prompting”) and finds that framing hacks as acceptable during training can greatly reduce this emergent misalignment even when hacking continues. DR Tulu: Reinforcement Learning with Evolving Rubrics for Deep ResearchAI Lab: Allen Institute for AI & University of Washington collaborators Summary: The authors introduce RLER (Reinforcement Learning with Evolving Rubrics), where instance-specific rubrics are generated and updated online using retrieved evidence and model rollouts, providing on-policy, search-grounded feedback for long-form deep research tasks. Using this method they train DR Tulu-8B, an open 8B “deep research” model that matches or beats much larger proprietary systems on several long-form research benchmarks while being far cheaper to run, and they release data, code, and tools for future work. Fara-7B: An Efficient Agentic Model for Computer UseAI Lab: Microsoft Summary: This work presents FaraGen, a multi-agent synthetic data engine that generates high-quality, verified web interaction trajectories at roughly $1 per task, and uses it to train Fara-7B, a 7B “pixel-in, action-out” computer-use agent that operates directly from screenshots. Fara-7B outperforms similarly sized CUAs and approaches the performance of much larger frontier models on benchmarks like WebVoyager and the newly introduced WebTailBench, while being cheap enough to run on-device. Soft Adaptive Policy OptimizationAI Lab: Qwen Team, Alibaba Summary: The paper proposes SAPO, a group-based RL algorithm that replaces hard clipping of importance ratios with a smooth, temperature-controlled gating function, giving sequence-coherent but token-adaptive updates that better handle high-variance off-policy tokens, especially in MoE models. Experiments on math reasoning and Qwen3-VL training show SAPO improves training stability and final Pass@1 performance over GRPO and GSPO under comparable compute budgets. Estimating AI productivity gains from Claude conversationsAI Lab: Anthropic Summary: Using 100,000 anonymized Claude.ai conversations, the authors have Claude estimate how long each task would take with and without AI, validate these estimates on software-engineering data, and then map tasks to O*NET occupations and BLS wage data to quantify task-level time and cost savings. Aggregating these estimates with a Hulten-style framework, they suggest that broad adoption of current Claude-like systems could raise U.S. labor productivity growth by about 1.8 percentage points per year over a decade, while noting important limitations like validation gaps, task coverage, and organizational frictions. NVIDIA Nemotron-Parse 1.1AI Lab: NVIDIA Summary: This paper presents Nemotron-Parse-1.1, an 885M-parameter encoder–decoder vision-language OCR model (plus the faster Nemotron-Parse-1.1-TC variant) that jointly extracts markdown/LaTeX text, structured tables, bounding boxes, and semantic classes with layout-aware reading order from document images. Trained on a blend of synthetic, public, and NVpdftex-derived datasets, it delivers competitive or state-of-the-art performance on GOT, OmniDocBench, RD-TableBench, and multilingual OCR benchmarks while offering open weights on Hugging Face and optimized NIM deployments for high-throughput production use. 🤖 AI Tech ReleasesClaude Opus 4.5Anthropic released Claude Opus 4.5 which sets new leves of coding, computer usage and agentic tasks. DeepSeekMath-v2DeepSeek open sourced a new model optimized for mathematical reasoning. Flux.2Black Forest Labs released its new generation visual intelligence models. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: