How HelloBetter Designed Their Interview Process Against AI Cheating

- Gregor Ojstersek and Anna J McDougall from Engineering Leadership <gregorojstersek@substack.com>

- Hidden Recipient <hidden@emailshot.io>

How HelloBetter Designed Their Interview Process Against AI CheatingWith the new process, they improved their time to hire, minimized the risk of AI cheating and focused more on practical programming fundamentals!Korbit AI (Sponsored)This week’s newsletter is sponsored by Korbit AI. Korbit is an AI-powered code review and engineering insights tool that helps software development teams deliver better code, faster. It automates pull request (PR) reviews, identifies bugs and issues, and provides actionable feedback and recommendations → just like a senior engineer, but instantly and at scale. Key Features:

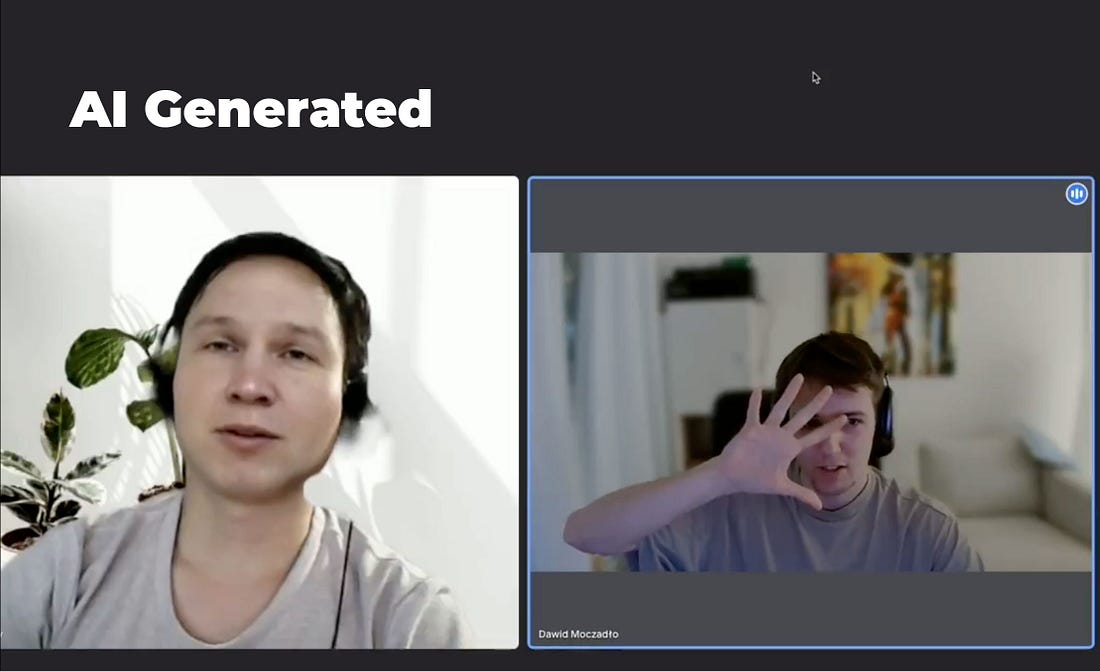

Korbit seamlessly integrates with GitHub, Bitbucket, and GitLab, making it a plug-and-play AI assistant that fits naturally into your workflow → no setup required. Thanks to Korbit AI for sponsoring this newsletter. Let’s get back to this week’s thought! IntroThere is a rise in AI-assisted tooling to help with job submissions and interviews. Here is an example, posted by Dawid Moczadło not so long ago, where the interviewee actually changed their appearance with AI and responded to questions with answers generated by ChatGPT. Dawid also mentioned that he could feel the GPT-4 bullet point-style responses. The way he exposed the candidate was by asking them to take their hand and put it in front of their face, which would likely remove the AI filter. The candidate refused to do that and he ended the interview shortly after. Such “attempts” are going to just be more common in my opinion and it’s important to design your interview process in a way that will minimize the success of such acts. To help us with this, I am happy to have Anna J McDougall, Software Engineering Manager at HelloBetter, as a guest author for today’s newsletter article. She is sharing with us the process she has led on re-structuring their interview process against AI cheating. Let’s hand it over to Anna! 1. A Fairer, More Modern Approach to Technical InterviewsAs someone obsessed with tech careers, business growth, and engineering management, I am constantly coming up against the boogeyman of technical interviews. In many ways, tech companies are stuck in the thinking of 20–30 years ago: whiteboards, algorithms, logic puzzles, and tasks that revolved around an in-person interview space. We’re in a new era, where 99% of engineering interviews are done virtually and the candidate’s workspace is uncontrollable by the interviewing team. We need to adapt to this, not by simply porting previous methods across to an online platform, but by fundamentally rethinking the skills we expect 21st-century software engineers to have. Naturally, opinions about what is wrong with technical interviews are prevalent both from employers and (potential) employees. For example, leetcode-style assessments have long been derided as being a bad test of abilities. “Instead,” opponents argue, “you should use take-home tests or projects that the candidate can explain back to you”. Ah, but you see, not everyone can afford to let their evenings and weekends disappear into these take-home tests or side projects. Due to this inconveniently linear construct called “time”, more experienced engineers start having or caring for families, adopting pets, or (lord forbid) doing sports and hobbies. It’s also no big secret that the majority of housework and care duties still tend to be shouldered by women. To put your best foot forward as a candidate, you will create the best possible solution, no matter how many hiring managers tell you to “only spend 2–3 hours on a solution”. Therefore, take-home or “own project” technical interviews tend to put older candidates and women candidates at a disadvantage. One other problem popping up nowadays is the prevalence of AI tools for engineers to pass technical interviews, ranging from manually entering prompts into ChatGPT (now a bit old-school), all the way through to having someone else take the technical round for you and have your face artificially plastered onto theirs. Going back to take-home tests for a moment, these are all but obsolete in the age of AI, in large part because all the solutions seem to be eerily similar to each other… 👀 So what do you do when…

Here, I share our own journey at Berlin mental health DTx startup HelloBetter in transforming our technical interview round into a solution that not only mirrors the real work, but also doesn’t require an unreasonable amount of candidate time.

BackgroundIn November 2024, we began overseeing changes to our hiring process by first defining what our current issues were. Most of these will probably seem familiar to you:

Non-Technical Hiring ChangesFirstly, let’s look at the solutions we implemented that aren’t related to the technical interview:

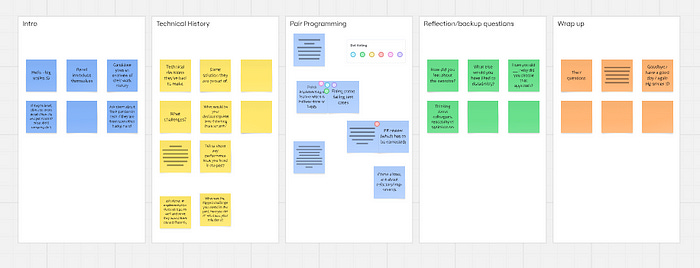

For our most recent hire, I made a point to stay in close contact with our recruiter, sharing at least 1–2 Slack messages every day to stay on top of the process, candidates, and next steps. We further had a half-hour sync meeting every week. Most of the changes above were done in a collaboration between the Engineering Managers and Recruitment. I volunteered to take on the challenge of defining changes to the technical interview, in large part because I had a clear vision of how it could be done better. We further had a half-hour sync meeting every week. Most of the changes above were made in collaboration between the Engineering Managers and Recruitment. I volunteered to take on the challenge of defining changes to the technical interview, in large part because I had a clear vision of how it could be done better. Technical Interview Round: Workshop PhaseI wanted to ensure this was something involving all of engineering. Since the engineers are the ones conducting the technical interview round, it made sense to have them drive the ideation and narrowing of the precise structure, based on my criteria. In Slack, I asked for volunteers to assist me, and got 6 takers. By chance, they managed to neatly divide into two engineers each for mobile, web frontend, and backend. The engineers in question were Julia Ero, Mohamed Gaber Elmoazin, Andrei Lapin, Caio Quinta, Euclides dos Reis Silva Junior, and Chaythanya Sivakumar. I set up a Miro board and defined the goals/guidelines for what we wanted the technical round to achieve. In two sessions of about 1 hour each, I guided the engineers through questions and discussions about their own experiences both as candidates and as interviewers. As you can see in the image, I provided a few guiding principles:

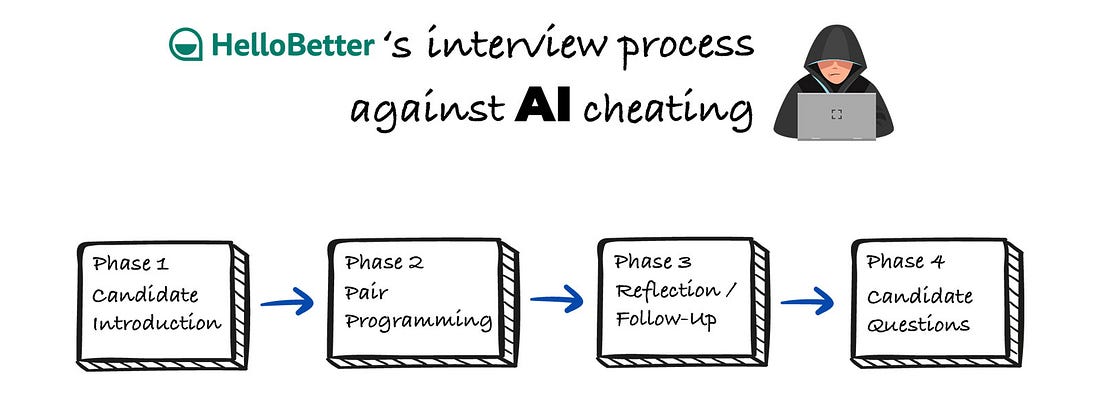

In the first session, we covered the engineers’ own experiences. The goal here was to ground their ideas in lived experience and to ensure that their framing began from the candidates’ perspective. Each person was given their own post-it to fill out, and after each section, I would summarise what points seemed to be most common or agreed-upon. From there, we devised the basic structure with a timeline of approximately 75–90 minutes total. 2. Introducing the McDougall MethodIn this 90-minute interview structure, we assess the abilities of the candidate in a real-world scenario, which prioritises a fair process for the candidate with the pragmatic reality of a technical assessment. The structure can be broken down into four phases:

Separating each of these stages out, we had a combined brainstorming of what questions we could ask for each stage, and for Step 2 (Pair Programming), how we could best achieve the goals laid out above. Each of these phases is important and serves a crucial purpose. Let’s look at each in turn. A quick note on naming…The term “McDougall Method” is my own label for the framework I led, developed and documented to address these technical interview challenges. I chose the name it’s more convenient than saying “our new technical interview method” or “HelloBetter’s updated technical interview round structure” on repeat. However, like most things EMs take credit for, it was not actually a solo effort, and is thanks to the input of the six engineers mentioned above, as well as thanks to HelloBetter CTO Amit Gupta, and fellow EMs Leonardo Couto and Garance Vallat — who provided me with the freedom to drive this initiative. Phase 1: Candidate Introduction and Technical BackgroundThis phase is pretty common, but nevertheless, it’s important to understand why this phase exists in most technical interviews, rather than jumping straight into a coding exercise or a quiz. This stage only includes two engineers, to avoid the candidate being overwhelmed but also to avoid any potential individual bias playing too big of a role. In short, we want the candidates to feel as relaxed as possible. We will never have fully relaxed candidates in this situation, but if there’s any way for us to reduce stress, we know that will lead to better performance. The brain does not operate at its best when it’s in fight-or-flight mode. Here, we smile, introduce ourselves, make a bit of small talk about our roles at the company and maybe even a fun fact about ourselves. We then give the candidate an opportunity to introduce themselves with the same basic questions they face in most interview stages, which should provide some ‘easy wins’ for them:

…and so on. We explicitly don’t want this stage to go on too long, again because of stress.

As such, we want enough introductory conversation to ‘grease the wheel’, but not so much that they start getting jittery about when the hard part will actually start. For us, we set this limit at about 10–15 minutes. Phase 2: Pair ProgrammingThis is probably the part of the post you came here to read, so let’s dive into it. Firstly, I earlier stipulated that the coding portion of the interview should mimic the real job as closely as possible. For that, we required three things:

The candidate is sent read-only access to the repository one hour before the interview, to allow them to look over it and get a feel for the code, how it’s structured, etc. However, they should not actively implement anything in that hour. Once in the interview, the candidate pairs with one of the interviewing engineers, and together they work out how to solve (potentially) five problems of increasing difficulty. The “Problem” of AI“But Anna!” I hear you cry from behind your screen, “This doesn’t solve the problem of cheating with AI! They could have another screen, or another device, and be using AI to solve the problems!” Well, we’ve managed to overcome this challenge by welcoming our new robot overlords, because the McDougall Method explicitly allows AI tools to be used. That’s right. The candidate shares their screen, and is told (both in earlier communication and in the interview itself) that they can use Google, Stack Overflow, GPT, Copilot, Cursor, whatever they want. Most importantly, they can ask any questions they like of the interviewers, and should.

If we accept that we want our engineers to use AI tools for productivity increases, then we have to view AI use as a skill. If our technical interview rounds are for assessing technical skill, then AI use must therefore be allowed. Dos and Don’ts for the CodebaseI want to take a small pause here to cover some Dos and Don’ts for this phase. Do:

Don’t:

On this last point, GitHub does not yet offer the feature of scheduling repository access. My hope is that this post and the ensuing avalanche of teams switching to the McDougall Method might inspire them to offer it as an option. At present, the only way to do this is to schedule a reminder for yourself (or one of the interviewing engineers) one hour before the interview. Technical Grading of the ExercisesI mentioned above that we use five different ‘levels’ of problem for each codebase, for example, a styling change as Level 1 and a full functional refactor at Level 5. These levels should roughly correspond with a technical grading for the candidate. I include here some examples based on React as a framework:

Why? Because again, our goal here is not to see who our ‘best’ candidate is, but to see if the level we’re hiring into matches the candidate. This can also potentially avoid situations where a candidate underlevels themselves (again statistically more likely to occur with women), and ensures that we accurately determine what role to hire them into, which in turn assures fair pay, and lower mid- to long-term turnover.

Why 40 minutes?Put simply, because it’s enough time. You aren’t going to get much more insight on a candidate that you haven’t gotten in 40 minutes of pair programming with them. In truth, our final guidelines indicate a minimum of 30 and a maximum of 45, but 40 minutes seems to be the sweet spot. Assessment CriteriaI’ve already indicated that coding ability and effective information sourcing (e.g. asking the colleague questions, using Google/AI, etc.) is the main goal of this round. However, also being assessed is their ability to communicate their ideas about how they’re solving the problem. Here we ask the interviewing engineers to answer four questions:

We also give a free text area where they can go into more detail, for example about the tools used, any major errors or points of confusion, etc. As much as I enjoy the structure of the first three questions, the free text box is usually where the most valuable information pops up, for example:

After that, we move into the next phase, which is directly related to the pair programming task. Phase 3: Reflection / Follow-Up QuestionsAlmost as important as the pair programming session itself are the questions the team asks afterwards. In a traditional technical interview round, the coding exercise itself is supposed to reveal the technical knowledge of the candidate. In the McDougall Method, the pair programming session is more about assessing how they work. In Phase 3, the team has the job of asking questions, allowing the candidate to display how well they understand what they implemented. As you can see, this phase itself has two steps. ReflectionFirstly, we allow the candidate to reflect on how they feel. We know nerves are on edge, tensions are high, and candidates almost never perform at their best in an interview. So, let’s give them a chance to say so. The first question every interviewing engineer should be asking before anything else in Phase 3 is: How did you feel about that exercise? This is an open question which allows them to explain their emotions, worries, fears; or even what they’re proud of. From there, questions around what they would have liked to do differently, or what other tasks they would have liked to do, can arise. Follow-Up QuestionsAfter this initial reflection, follow-up questions should be next. These revolve around why they chose a certain approach, what pitfalls might occur, how certain implementations would/could scale or be maintained; or perhaps questions about naming conventions, readability, or memory management. In our latest round of interviews, it was here where good “implementers” had their knowledge gaps revealed. This is not necessarily a bad thing for the candidate because, as stated earlier, it is the hiring manager’s prerogative to match the candidate to the role. For example, if you’re hiring a junior or a lower mid-level candidate, then you won’t expect many deep answers here, but knowing the limits of your hire will help with forming a development plan for their first year in the company.

Note: This is the section in which the engineers require the most EM guidance in terms of accurately defining the expected level of analysis/understanding. Assessment CriteriaDue to how much this section can change, we opted to keep one question about candidate openness to feedback, but again, mainly relying on a free text summary for the interviewers to describe their approach, strengths, weaknesses, etc. Phase 4: Candidate Questions and Wrap-UpI don’t have much unique insight to add here, but I would say this is a crucial step to any interview. Some guidelines I provided to the engineers were:

Assessment CriteriaAs with Phase 1, we keep the assessment criteria for text information about what questions they asked and any final notes. Providing Feedback About the CandidateIn addition to the fields and questions outlined above, our HR software Personio offers a “Negative”, “Neutral”, and “Positive” option for every candidate. Our instructions for this final rating are as follows:

In short, the final rating should act as a way to give us a ‘vibe check’. Personally, being aware of some of the issues around cultural evaluations, particularly for neurodivergent candidates, I would accept any candidate with Neutral or Positive if the other criteria are met. Overall Evaluation FieldThe last field is an open text field where each interviewing engineer can summarise their thoughts. In both our Confluence documentation and in the training sessions we emphasise the need to note both strengths and weaknesses in this field. In the case of someone who really can’t complete the exercises or is totally underqualified, I would also expect the engineers to outline that here. 3. Outcomes of the McDougall MethodImproved time to hireAll of the changes taken above led to our “Days to Fill” metric being 30% of its usual in our latest hiring round. Naturally, this time equals money. This metric was influenced by the streamlined interview process, the close work with our recruiter, and the engineers being trained to submit predictable, standardised evaluations. In addition to the hours saved by moving the interview process along quickly, we also save time and mental energy from the context-switching of having to keep candidates in an overly-long pipeline: this goes both for the hiring manager and the interviewing engineers. Lower chance of using AI to cheat and misrepresent their abilitiesAlthough this is still technically possible, the separation of the coding phase from the analytical phase makes it harder to use AI. Questions are based around feelings, hypotheticals, and preferences, many of which have no right or wrong answer. The ability to openly use AI during the pair programming exercise removes the element of ‘shame’ from using it as a tool. Practical programming fundamentals are keyAs a candidate, the McDougall Method requires zero memorisation or cramming of factoids. Instead, practical programming fundamentals become key. If you’ve worked as a mid-level candidate at a company for the last three years, you will likely be levelled as a mid-level candidate with the McDougall Method, regardless of how recently you inverted a binary tree, how many JavaScript concatenation quirks you can recite, or how many HTTP status codes you can memorise. The focus is on levelling candidates, not on pass/failThe emphasis of this method is on levelling candidates and determining their strengths and weaknesses, NOT on giving them a pass/fail grade based on the interviewing engineers’ assumptions. A candidate could be technically strong, but the cultural tradeoff might not be worth it when compared to a more technically-flawed candidate who is an excellent team player. Furthermore, within the method itself, there is space for a difference between those who are implementers and those who are knowledge-havers. We all have a balance of both, and the structure allows us to see who is strong where, and what areas still need to be built up — crucial to building a development plan for a new starter.

Future Action PointsThere are still some ways to improve this method:

These two points lead me to believe that a new framework for the devising and assessment of this phase is required. For example, through some sort of question matrix or pre-hiring questionnaire to assess the relative importance of different technical insight areas and levels of knowledge per position. Call to ActionLet’s keep things simple. My call to action is this… Try it. Take it to your EMs or your team and talk about it. What are the pros and cons? What might be missed? If this resonates, share it with your team. I’m especially keen to hear what you’d do differently, whether here in the comments or on LinkedIn, where I regularly post about engineering management, tech careers, and my own observations about the industry and healthtech in particular. Last wordsSpecial thanks to Anna for sharing her insights on this important topic with us! Make sure to follow her on LinkedIn and also check out her awesome talk on How to Turn Engineering Work into a Promotion, I highly recommend watching it if you are interested in growing in your career. We are not over yet! How to Thrive in Your First 90 Days in a New Role as an EngineerYour first 90 days are crucial and can define your overall experience in your next position as an engineer. Learn how to nail your first 90 days as an engineer in my latest video!  New video every Sunday. Subscribe to not miss it here: The Daily ByteEverything you need to ace your coding interviews, from curated problems to real-time feedback and expert guidance. If you’re looking to level up for coding interviews, this would be a great place to start. My friend Kevin has recently quit his job at Google to focus on this! Liked this article? Make sure to 💙 click the like button. Feedback or addition? Make sure to 💬 comment. Know someone that would find this helpful? Make sure to 🔁 share this post. Whenever you are ready, here is how I can help you further

Get in touchYou can find me on LinkedIn, X, YouTube, Bluesky, Instagram or Threads. If you wish to make a request on particular topic you would like to read, you can send me an email to info@gregorojstersek.com. This newsletter is funded by paid subscriptions from readers like yourself. If you aren’t already, consider becoming a paid subscriber to receive the full experience! You are more than welcome to find whatever interests you here and try it out in your particular case. Let me know how it went! Topics are normally about all things engineering related, leadership, management, developing scalable products, building teams etc. You're currently a free subscriber to Engineering Leadership. For the full experience, upgrade your subscription. |

Similar newsletters

There are other similar shared emails that you might be interested in: