Reasoning Models are a huge part of AI community discussions and debates, gaining more and more attention every day since the appearance of OpenAI’s o1 model and then open-sourced DeepSeek-R1. It seems that this topic has already been picked apart from every angle, which marks that we are dealing with a true breakthrough. As Nathan Lambert claims in his blog “this year every major AI laboratory has launched, or will launch, a reasoning model” because these models can solve “the hardest problems at the frontier of AI” far better than previous generation models. | While we already have so much information about Reasoning Models and every week a lot of papers introduce their approaches for improving “reasoning” capabilities of models, surprisingly, we still don’t have a solid definition of what exactly a Reasoning Model is. The main debate is whether reasoning models even deserve to be a separate category. But let’s call things what they are. | Today, we’re going to take a deep dive into what exactly counts as a Reasoning Model, which models fall into that category, and where this field might be headed in the future to unlock the upcoming AI possibilities. So let’s get started. | | In today’s episode, we will cover: | Controversial nature of Reasoning Models What makes Reasoning Models different? Models which fall into the “Reasoning” category Limitations: The problems of overthinking and more Future perspectives Conclusion Sources and further reading

| Controversial nature of Reasoning Models | The rise of Reasoning Models has sparkled lively debate in the AI field: Should these “thinking” models be considered a separate category of AI, distinct from plain LLMs? Or are they essentially the same core technology with some clever add-ons? Well, let’s look at both sides of this issue. | Proponents argue that models optimized specifically for reasoning, represent a qualitative leap from LLMs despite being built on them. Thanks to new concepts, such as special reinforcement learning (RL) phase used for training and increased inference-time compute, reasoning models unlock new capabilities, which are used as arguments for placing them in a separate category: | Performance leaps: Models like OpenAI’s o1, o3 outperform traditional LLMs on hard and long tasks, like solving 20-step math problems or generating complex code. Smaller Reasoning Models outperforming larger LLMs on reasoning benchmarks. Many major AI labs released reasoning-focused models in 2024–2025. Branding shift: Models are explicitly marketed as “reasoners”, emphasizing that they are different from general chatbots. These models are designed not just for output generation, but for reasoning, planning, and tool use – the early foundations of agentic AI.

| Nathan Lambert, who emerged as a key explainer of Reasoning Models, suggests calling them “Reasoning Language Models (RLMs)” and notes that their emergence has “muddied” the old taxonomy of pre-training vs fine-tuning. He also emphasized how RLMs redefined the post-training landscape and how Reinforcement Learning with Verifiable Rewards (RLVR) has led to main advancements in model capabilities. (check the Sources section for all links) | While Andrej Karpathy hasn’t explicitly argued for or against a new category, his observations highlight that something qualitatively different is happening inside these RL-trained models: “It's the solving strategies you see this model use in its chain of thought. It's how it goes back and forth thinking to itself. These thoughts are emergent (!!!) and this is actually seriously incredible,” – he wrote in his post on Twitter, on January 27, 2025. | Many academic studies, like “Reasoning Language Models: A Blueprint” echo the idea of dividing Reasoning Models from LLMs, describing them as a “transformative breakthrough in AI, on par with the advent of ChatGPT” moving AI closer to general problem-solving and possibly AGI. | On the other side, many remain cautious about overhyping Reasoning Models as something fundamentally new. Under the hood, they are still transformer-based language models using the same next-token prediction objective. Their improved performance comes not from architectural breakthroughs but from methods like supervised fine-tuning, reinforcement learning, chain-of-thought prompting, longer inference runs, and carefully curated training data. | So the main counterpoints include: | No architectural change:

Reasoning ability is a product of optimized training regimes (e.g., SFT, RLHF, ReFT), inference-time scaffolding (like CoT and majority voting), and increased compute – not new model designs. They are still standard autoregressive LLMs. Limited generalization:

High performance in math, code, or logic puzzles doesn’t generalize to open-ended, commonsense, or causal reasoning. Models often fail on tasks that require real-world understanding, novel abstraction, or reasoning under uncertainty. ARC, counterfactuals, and long-horizon tasks remain challenging. Branding vs. substance:

Terms like “reasoner” risk overstating capabilities and fueling AGI hype. Narrow domains:

Some reasoning-tuned models underperform on general language tasks like storytelling, open-ended dialogue, or real-world question answering. Their specialization can lead to regressions in fluency, creativity, or common sense – casting doubt on how “general” their reasoning really is.

| Melanie Mitchell, Professor at the Santa Fe Institute, question whether reasoning models reflect real understanding or simply mimicked heuristics: “The performance of these models on math, science, and coding benchmarks is undeniably impressive. However, the overall robustness of their performance remains largely untested, especially for reasoning tasks that, unlike those the models were tested on, don’t have clear answers or cleanly defined solution steps” (from the article “Artificial intelligence learns to reason”) | But does we really need a completely new architecture with the best capabilities in all domains to define model as a new type? Maybe we should admit that sometimes new concepts, new paradigms and new view on what we already have can lead to opening a new family of models that can be specified for certain tasks. | | From the Turing Post side, we do define Reasoning Language Models (RLMs) as one of the models types. Here is why it makes sense. | Note: In the post below, we called Reasoning Models LRM, or Large Reasoning Models, but taking into account the variation of size of Reasoning Models that already exist, it’s better to define the entire group as “Reasoning Language Models”, or RLMs. |  | TuringPost @TheTuringPost |  |

| |

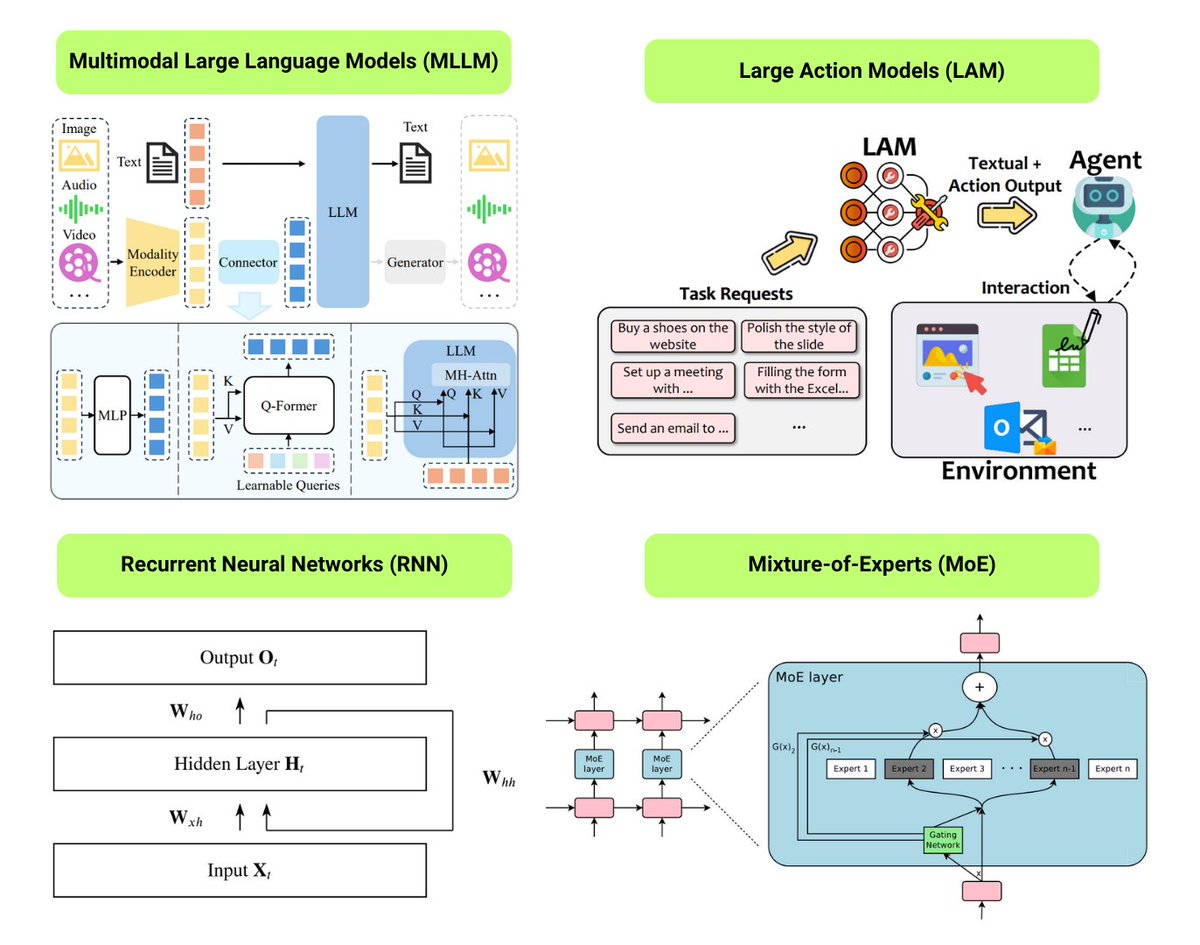

12 Foundational AI Model Types ▪️ LLM

▪️ SLM

▪️ VLM

▪️ MLLM

▪️ LAM

▪️ LRM

▪️ MoE

▪️ SSM

▪️ RNN

▪️ CNN

▪️ SAM

▪️ LNN Save the list and check this out for explanations and links to the useful resources: huggingface.co/posts/Kseniase… | |  | | | 12:24 PM • Jun 8, 2025 | | | | | | 1.1K Likes 263 Retweets | 8 Replies |

|

| What makes Reasoning Models different? | If we gather the main features of RLMs in one definition we would say that they are advanced AI systems specifically optimized for multi-step logical reasoning, complex problem-solving, and structured thinking. RLMs incorporate test-time scaling, RL post training, Chain-of-Thought reasoning, tool use, external memory, strong math and code capabilities, and more modular design for reliable decision-making. | Let’s break down what stands behind each feature step by step: | | Join Premium members from top companies like Microsoft, Google, Hugging Face, a16z, Datadog plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Simplify your learning journey 👆🏼 |

|

| It’s an important topic – make a choice if this article should be open to public. If we get more than 100 votes, we will open this article to the broader discussion. Vote→ | Shall we make this post public to invite broader discussion? | |

|

|

|