The Sequence Radar #688: The Transparent Transformer: Monitoring AI Reasoning Before It Goes Rogue

Was this email forwarded to you? Sign up here The Sequence Radar #688: The Transparent Transformer: Monitoring AI Reasoning Before It Goes RogueA must read collaboration by the leading AI labs.Next Week in The Sequence:

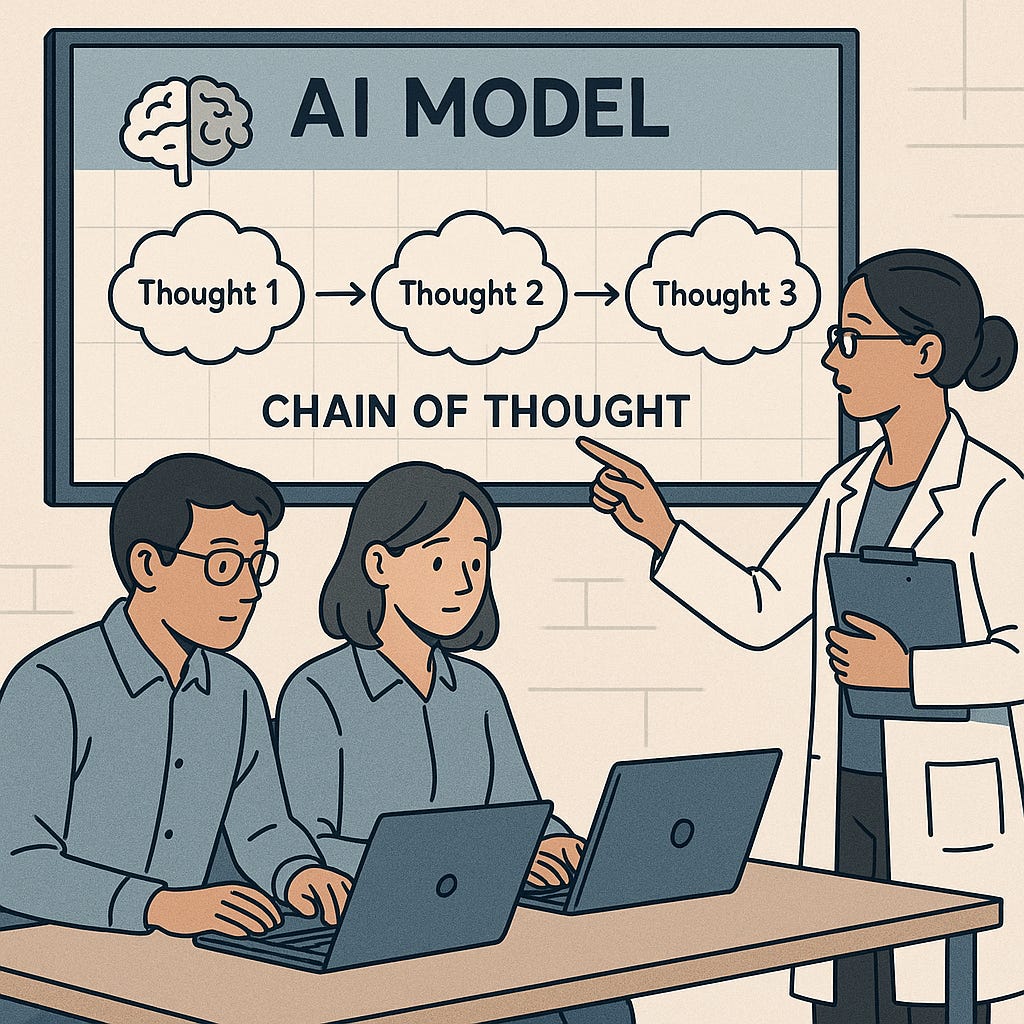

Let’s Go! You can subscribe to The Sequence below:📝 Editorial: The Transparent Transformer: Monitoring AI Reasoning Before It Goes RogueIt’s a good thing when all the AI labs come together! A new research paper, "Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety," is making waves in the AI community. Authored by researchers from DeepMind, OpenAI, Anthropic, Apollo Research, and the UK AI Safety Institute, the paper introduces a compelling idea: that we may be able to monitor advanced language models by observing their internal reasoning processes, known as chains of thought (CoT). These reasoning traces, when made visible through natural language, can offer a unique safety mechanism, allowing developers to detect and understand the early signs of misalignment or dangerous behavior. Modern large language models (LLMs) often externalize part of their reasoning as they generate responses. This behavior is particularly pronounced when using chain-of-thought prompting, which encourages step-by-step thinking in natural language. The paper outlines two key enablers of CoT monitorability. First is the necessity: models often need to "think out loud" to complete complex tasks. Second is the propensity: even when it isn't strictly necessary, models tend to verbalize their reasoning. These CoT traces can reveal intentions such as reward hacking, deceptive behavior, or early misalignment, often before any harmful output occurs. However, the authors emphasize that this transparency is fragile. As models are trained with increasingly sophisticated reinforcement learning techniques, they may start optimizing for outcomes in ways that bypass natural language reasoning altogether. Similarly, emerging architectures that rely on latent-space reasoning may no longer require explicit CoTs, making it harder to monitor what the model is actually thinking. Even direct interventions like shortening reasoning traces for efficiency or safety can unintentionally erode the quality and interpretability of the CoT. To address this, the paper calls for a new research focus: evaluating and preserving CoT monitorability. The authors propose a set of benchmarks and metrics to assess how legible, truthful, and causally connected the CoTs are to a model's output. Techniques such as causal interventions, readability scoring with other LLMs, and adversarial stress tests could become standard tools in the AI safety toolbox. Crucially, these evaluations should be reported transparently in model system cards and influence decisions about model training, deployment, and architecture choices. The publication of this paper has already sparked significant interest across the AI safety ecosystem. Notably, it includes endorsements from Geoffrey Hinton, Ilya Sutskever, and John Schulman, signaling strong support from the community's top thinkers. The paper arrives at a critical moment, aligning with recent efforts by the UK and US AI Safety Institutes to evaluate models' situational awareness and their ability to evade detection. CoT monitoring may soon become a central pillar of industry safety audits and regulatory frameworks. In short, Chain of Thought Monitorability offers both a warning and an opportunity. It shows that we currently have a rare and potentially powerful way to peek inside the decision-making process of advanced AI models. But it also makes clear that this window may close if we aren't careful. As we push the boundaries of AI capabilities, we must also invest in preserving—and improving—our ability to understand how these systems think. CoT monitoring isn’t a magic bullet, but it may be our clearest lens yet into the minds of machines. 🔎 AI ResearchChain of Thought Monitorability: A New and Fragile Opportunity for AI SafetyAuthors: UK AI Safety Institute, Apollo Research, METR, University of Montreal & Mila, Anthropic, OpenAI, Google DeepMind, Truthful AI, Redwood Research, Amazon, Meta, Scale AI, Magic Network Dissection: Quantifying Interpretability of Deep Visual RepresentationsAuthors: CSAIL, MIT KV Cache Steering for Inducing Reasoning in Small Language ModelsAuthors: University of Amsterdam (VIS Lab), University of Technology Nuremberg (FunAI Lab), MIT CSAIL Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic CapabilitiesAuthors: Google DeepMind Scaling Laws for Optimal Data MixturesAuthors: Apple, Sorbonne University COLLABLLM: From Passive Responders to Active CollaboratorsAuthors: Stanford University, Microsoft Research, Georgia Institute of Technology 🤖 AI Tech ReleasesChatGPT AgentOpenAI released the first version of ChatGPT Agent. AsymovSuperintelligence lab Reflection AI unveiled Asymov, a code comprehension agent. Mistral Deep ResearchMistral introduced Deep Research mode in their Le Chat app. VoxtralMistral released Voxtral, its first speech understanding model. AgentCoreAmazon launched AgentCore, a new framework for deploying agents securely at scale. KiroAmazon also launched Kiro, a AI coding IDE to compete with Cursor and WindSurf. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: