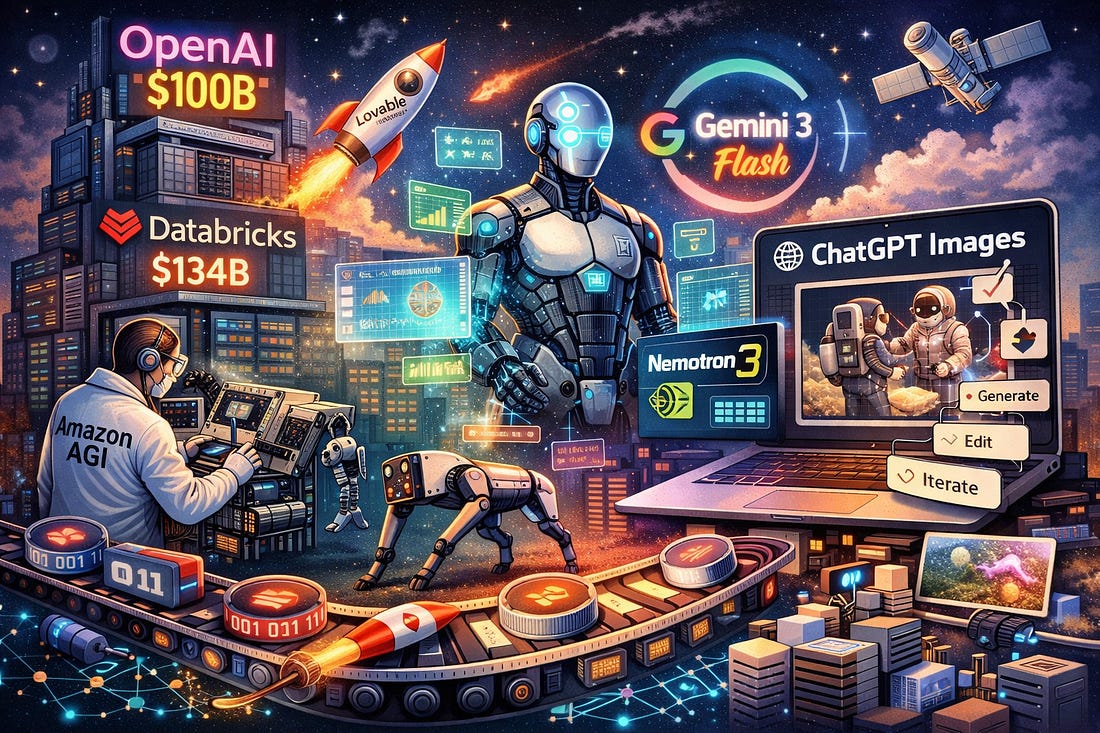

The Sequence Radar #775: Last Week in AI: Tokens, Throughput, and Trillions

Was this email forwarded to you? Sign up here The Sequence Radar #775: Last Week in AI: Tokens, Throughput, and TrillionsNVIDIA, OpenAI, Google releases plus massive funding news.Next Week in The Sequence:Our series about synthetic data continues with an exploration of RL trajectories for synthetic data generation. In the AI of the week edition, we discuss NVIDIA’s amazing Nemotron 3 release. In the opinion section we discuss some new ideas in AI research that might unlock new waves of innovations. Subscribe and don’t miss out:📝 Editorial: Last Week in AI: Tokens, Throughput, and TrillionsThis week’s AI story didn’t arrive as one dramatic demo; it arrived as a synchronized upgrade across the stack—capital, platforms, and product surfaces all moving in lockstep. Start with funding, because it quietly sets the ceiling on everything else. OpenAI’s reported fundraising discussions—potentially up to $100B at an eye-watering valuation—signal that frontier AI is now as much an infrastructure buildout as it is a research program. At that scale, “model roadmap” becomes a question of power budgets, data-center buildouts, and how cheaply you can serve intelligence at global latency. The winners won’t just be the teams with the smartest models; they’ll be the teams who can industrialize them into reliable, low-friction services. The enterprise layer tightened too. Databricks’ new multi-billion-dollar round reinforces a platform thesis: whoever sits closest to the governed data plane becomes the default runtime for analytics and AI apps. Enterprise AI is less about picking a single model and more about closing loops—secure retrieval, permissions-aware pipelines, evaluation harnesses, observability, and telemetry that converts production traces into better prompts, better policies, and better fine-tunes. The platform that owns those loops becomes the operating system. Alongside the data giants, the “software is changing shape” story got its own funding exclamation point: Lovable’s latest round is a bet that intent-to-app workflows (“vibe coding,” for better or worse) are becoming a mainstream interface for building software. Whether you see that as democratization or risk, the direction is clear: less time in scaffolding, more time in iteration. Then come the releases, which rhyme with the same thesis in engineering terms: optimize agentic throughput per dollar, then ship it where people actually build. NVIDIA’s Nemotron 3 line is positioned as an open-model family built for agentic systems—workloads where you care less about a single perfect answer and more about sustained, multi-step execution under tight cost and latency constraints. The subtext is important: open models aren’t only chasing benchmark glory anymore; they’re chasing “tokens moved through a workflow” at predictable unit economics, because that’s what scales multi-agent deployments from demos to systems. Google’s Gemini 3 Flash pushes the “fast path” model into the default experience. This is the product philosophy shift of the year: latency is now a capability. If a model is smarter but feels slow, it loses mindshare—and mindshare is the new moat. Flash-class models aim to be always-on, cheap enough to call constantly, and good enough that users rarely feel the need to escalate to heavier tiers. That’s how intelligence becomes ambient: not by being miraculous once, but by being consistently available. Finally, OpenAI’s ChatGPT Images update turns multimodal output into something closer to an editable workflow artifact. Image generation has existed for a while, but it often lived in a separate tool mindset: prompt, generate, download, repeat. When images are generated and iterated inside the chat loop—with stronger instruction following, more reliable edits, and better preservation of important details—they stop being a novelty and start behaving like a creative IDE. The difference is subtle but decisive: iteration becomes the core feature, not the first render. Put together, the week reads like a blueprint. Fund the infrastructure. Own the data plane. Make building conversational. Ship models optimized for throughput and latency. Turn outputs into editable artifacts. AI isn’t just getting smarter—it’s getting more deployable. 🔎 AI ResearchOLMo 3AI Lab: Allen Institute for AI (AI2) Summary: OLMo 3 introduces fully-open 7B and 32B language models aimed at long-context reasoning, tool/function calling, coding, instruction-following, chat, and knowledge recall. The release is “fully-open” in the strong sense: it ships the entire model flow (data, code, intermediate checkpoints, and dependencies), with Olmo 3.1 Think 32B positioned as the strongest fully-open thinking model at the time of release. Adaptation of Agentic AIAI Lab: Academic consortium (UIUC, Stanford, Princeton, Harvard, UW, Caltech, UC Berkeley, UCSD, Georgia Tech, Northwestern, TAMU, Unity), Summary: This survey unifies the fast-growing “agentic AI adaptation” literature into a single framework spanning agent adaptation and tool adaptation, and breaks the space into four paradigms: A1 (tool-execution–signaled), A2 (agent-output–signaled), T1 (agent-agnostic tool adaptation), and T2 (agent-supervised tool adaptation). It then maps representative methods into that taxonomy, compares trade-offs (cost, modularity, generalization), and surfaces open directions like co-adaptation, continual/safe adaptation, and efficiency. Efficient-DLM: From Autoregressive to Diffusion Language Models, and Beyond in SpeedAI Lab: NVIDIA Summary: This paper studies how to convert pretrained autoregressive LMs into diffusion LMs via continuous pretraining, arguing that block-wise attention conditioned on clean context best preserves AR capabilities while enabling efficient KV-cached parallel decoding. It also introduces position-dependent token masking to better match diffusion inference behavior, yielding Efficient-DLM models with improved accuracy–throughput trade-offs versus AR and prior diffusion baselines. QwenLong-L1.5: Post-Training Recipe for Long-Context Reasoning and Memory ManagementAI Lab: Tongyi Lab (Alibaba Group) Summary: QwenLong-L1.5 proposes an end-to-end post-training recipe for long-context reasoning, combining a scalable synthesis pipeline for multi-hop, globally-grounded tasks with stabilized long-context RL (including task-balanced sampling, task-specific advantage estimation, and AEPO). It also adds a memory-agent framework to handle ultra-long inputs (beyond the native window, up to multi-million tokens), reporting substantial gains over its base model and competitiveness with top proprietary systems on long-context benchmarks. Google Research 2025: Bolder breakthroughs, bigger impactSummary: A year-in-review post highlighting Google Research’s 2025 breakthroughs and how they translated into real-world impact across products, science, and society—including work to make generative models more efficient, factual, multilingual/multi-cultural, plus new agentic tools to accelerate scientific discovery. It also spotlights major thrusts like generative UI (dynamic, interactive interfaces), quantum computing progress (e.g., “verifiable quantum advantage” work), and multi-agent systems like the AI co-scientist. NVIDIA Nemotron 3: Efficient and Open IntelligenceAI Lab: NVIDIA Summary: This white paper describes the Nemotron 3 family (Nano, Super, Ultra), centered on a hybrid Mamba–Transformer Mixture-of-Experts design for high throughput and very long context (up to 1M tokens). It highlights techniques like LatentMoE, NVFP4 training, multi-token prediction, and multi-environment RL post-training, alongside plans to release weights, recipes, and eligible data. 🤖 AI Tech ReleasesGemini 3 FlashGoogle released Gemini 3 Flash, a faster and more cost efficient version of its marquee model. ChatGPT ImagesOpenAI released ChatGPT Images, a new set of image editing capabilities integrated into ChatGPT. Nemotron 3NVIDIA released Nemotron 3, a new series of open models optimized for efficiency and agentic workflows. SAM AudioMeta AI released SAM Audio, a model for prompt and audio separation. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: