The Sequence Radar #727: Qwen’s One‑Week Gauntlet

Was this email forwarded to you? Sign up here The Sequence Radar #727: Qwen’s One‑Week GauntletAlibaba Qwen is pushing new models at an incredible pace.Next Week in The Sequence:

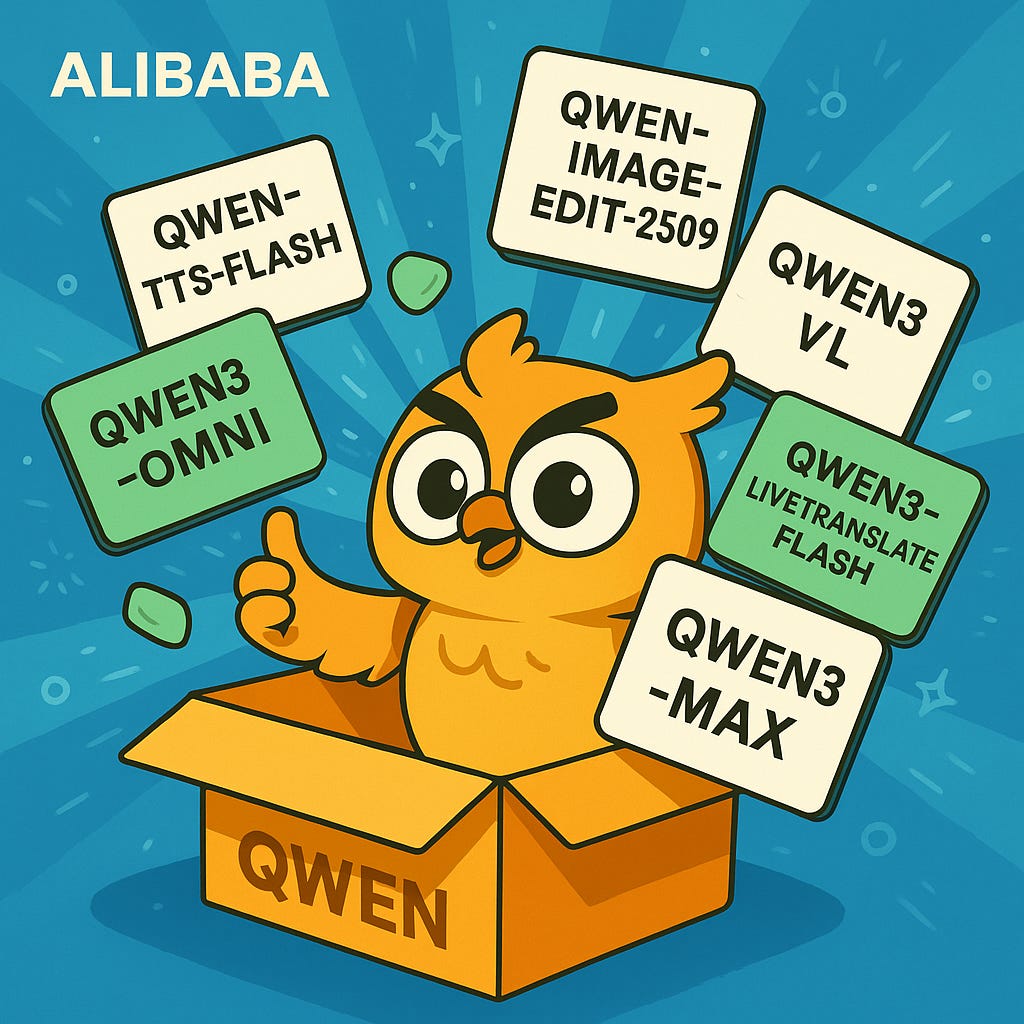

Subscribe Now to Not Miss Anything:📝 Editorial: Qwen’s One‑Week GauntletThe AI world has never seen a release spree like this. Some labs launch models; Qwen launched a supply chain. In the span of a few days, it dropped components that cover the whole arc of modern AI products—perception, speech, translation, editing, safety, and frontier reasoning—like a company speed‑running a platform roadmap. The vibe is less “press cycle” and more “assembly line”: you can build a complete experience from one family without waiting for third‑party glue or quarter‑end reveals. The week’s cast reads like a storyboard. It opens with Qwen3‑TTS‑Flash, a fast, multi‑timbre text‑to‑speech engine built for real‑time UX—voices that sound alive rather than dutiful. Then Qwen3‑Omni arrives as the natively end‑to‑end core, a single streaming interface for text, image, audio, and video; alongside it, Qwen‑Image‑Edit‑2509 brings identity‑preserving, high‑consistency edits that turn “can you tweak this?” into “already done.” The sightlines sharpen with Qwen3‑VL, an upgraded vision‑language line that pairs perception with deliberate reasoning. To bridge rooms and languages, Qwen3‑LiveTranslate‑Flash offers low‑latency interpretation tuned for meetings, classrooms, and streams—places where patience is not a feature. Guardrails keep pace via Qwen3Guard, a safety layer that classifies and moderates in real time so the rest of the stack can sprint without tripping legal tripwires. And the capstone, Qwen3‑Max, sets the ceiling height: a frontier LM aimed at agents, coding, and long context. In a single cluster you get input, understanding, transformation, speech, translation, safeguards, and frontier reasoning—see → think → edit → speak → translate → protect → plan—delivered as one family. That’s not a menu; that’s a product skeleton, ready for teams to skin with brand, data, and workflow. The impressive part is the choreography. Shipping Omni and VL alongside safety (Guard) and creator tools (TTS, Image‑Edit), then capping the set with a frontier tier (Max), collapses the distance from demo to deployment. Builders don’t need to wait for a missing modality or a pending guardrail; the pieces arrive together and are designed to click. In a market where headline scores converge, time‑to‑composition becomes the moat: how fast can you assemble something trustworthy and delightful from compatible parts? Read between the releases and you can see the process change: fewer heroics, more pipelines. When base models, multimodal branches, and safety layers iterate on a shared substrate, you get compounding returns—data re‑use, unified APIs, common post‑training recipes. The practical outcome is fewer brittle integrations, faster experiments, and that mildly intoxicating feeling that your roadmap just got shorter. (Do hydrate.) There are sensible caveats because we’re adults. Pace must meet proof: latency, cost curves, eval transparency, and deployment ergonomics will decide which of these models become habits rather than headlines. Safety has to keep up with Omni‑style expressivity, which is a marathon, not a patch. And the frontier tier earns its keep only if it plays nicely with smaller, cheaper siblings in real workloads. Still, as strategies go, this one is refreshingly legible: build the orchestra, then hand developers the baton. Call it audacity by design. In four days, Qwen didn’t just add SKUs; it rehearsed a muscle memory for platform releases. If that muscle holds, expect a new tempo for the industry: fewer solitary crescendos, more coordinated symphonies. If you’re building, the practical takeaway is simple—prototype with the family now. The conveyor belt is moving; it would be a shame to stand still. 🔎 AI ResearchSynthetic Bootstrapped Pretraining (SBP)AI Lab: Apple & Stanford Summary: Introduces a three-step pretraining recipe that learns inter-document relations and synthesizes a large corpus, yielding consistent gains over repetition baselines when training a 3B model up to 1T tokens. SBP captures a large fraction of an oracle’s improvement (≈42–49% across scales) and admits a Bayesian view where the synthesizer infers latent concepts to generate related documents. In-Context Fine-Tuning for Time-Series Foundation ModelsAI Lab: Google Research (with UT Austin) Summary: Proposes an “in-context fine-tuning” approach that adapts a time-series foundation model (built on TimesFM) to consume multiple related series in the prompt at inference, enabling few-shot-style domain adaptation without task-specific training. On standard benchmarks, this method outperforms supervised deep models, statistical baselines, and other time-series FMs—and even matches or slightly exceeds explicit per-dataset fine-tuning, with reported gains up to ~25%. SWE-Bench Pro: Can AI Agents Solve Long-Horizon Software Engineering Tasks?AI Lab: Scale AI Summary: Presents a contamination-resistant, enterprise-grade benchmark of 1,865 multi-file, long-horizon issues (avg ~107 LOC across ~4 files) from 41 repos, including private commercial codebases. Frontier agents score <25% Pass@1 (e.g., GPT-5 23.3%), with detailed failure-mode analysis highlighting semantic, tool-use, and context-management gaps. Qwen3-Omni Technical ReportAI Lab: Qwen Summary: Unifies text-vision-audio-video in a Thinker–Talker MoE architecture that matches same-size unimodal performance while achieving open-source SOTA on 32/36 audio(-visual) benchmarks and strong cross-modal reasoning. It supports 119 text languages, 19 speech-input and 10 speech-output languages, handles ~40-minute audio, and delivers ~234 ms first-packet latency via streaming multi-codebook speech generation. Towards an AI-Augmented TextbookAI Lab: Google (LearnLM Team) Summary: Proposes “Learn Your Way,” an AI pipeline that personalizes textbook content by grade level and interests, then transforms it into multiple representations (immersive text, narrated slides, audio lessons, mind maps) with embedded formative assessment. In a randomized study of 60 students, the system significantly improved both immediate and three-day retention scores versus a standard digital reader. Code World Model (CWM): An Open-Weights LLM for Research on Code Generation with World ModelsAI Lab: Meta FAIR CodeGen Team Summary: Introduces CWM, a 32B parameter dense decoder-only LLM mid-trained on Python execution traces and agentic Docker environments to embed executable semantics into code generation. Beyond achieving strong results on SWE-bench Verified (65.8% with test-time scaling), LiveCodeBench, and math tasks, CWM pioneers “code world modeling” as a research testbed for reasoning, debugging, and planning in computational environments. 🤖 AI Tech ReleasesChrome DevTools MCPGoogle released Chrome DevTools MCP which allows agents to control a Chrome web browser. ChatGPT PulseOpenAI released ChatGPT Pulse, a new experience for proactive updates. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: