The Sequence Radar: From Model to Team: Several Models are Better than One: Sakana’s Blueprint for Collective AI

Was this email forwarded to you? Sign up here The Sequence Radar: From Model to Team: Several Models are Better than One: Sakana’s Blueprint for Collective AISakana's new model combines different models seamlessly at inference time.Next Week in The Sequence:

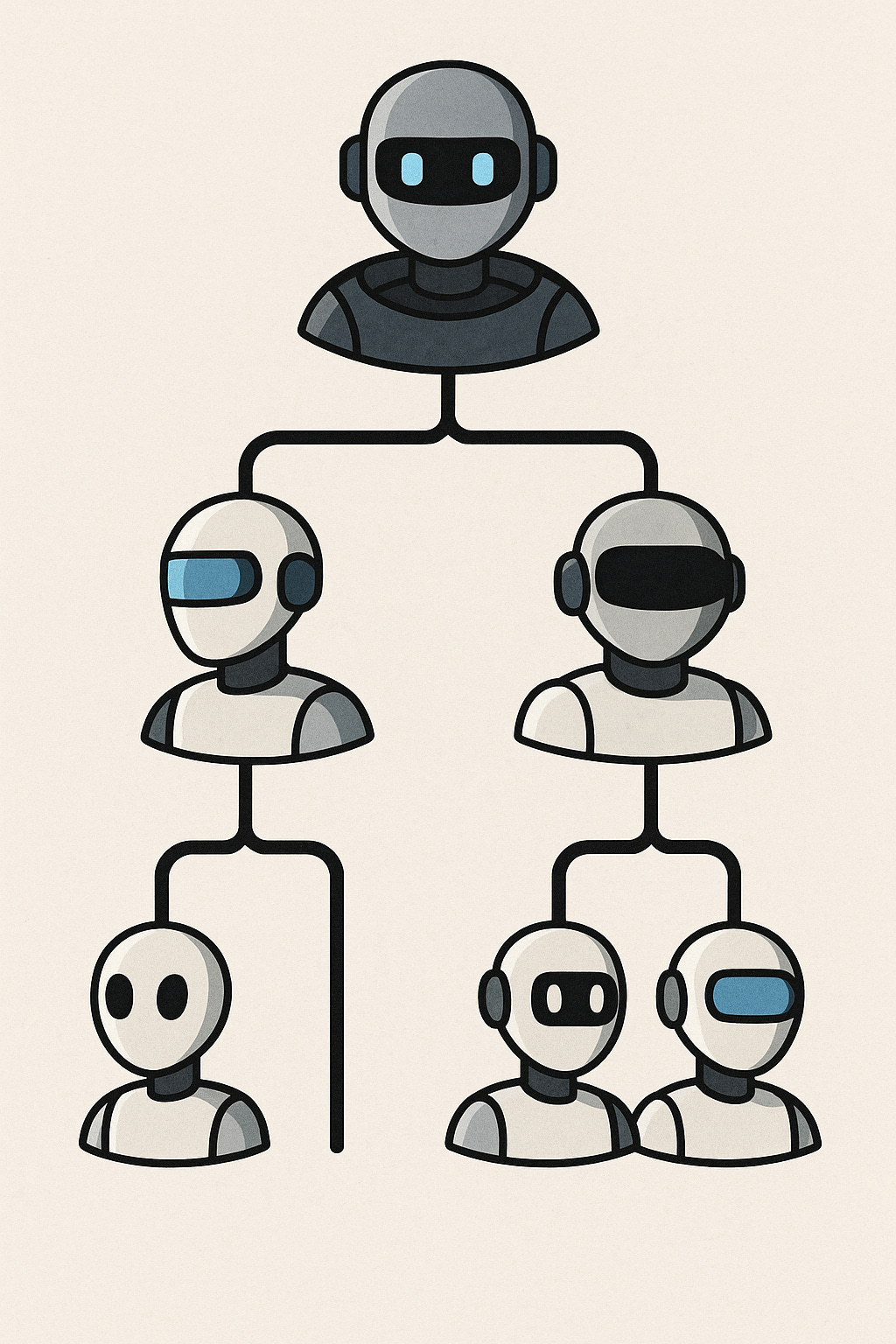

Let’s Go! You can subscribe to The Sequence below:📝 Editorial: Several Models are Better than One: Sakana’s Blueprint for Collective AISakana AI has rapidly emerged as one of my favorite and most innovative AI research labs in the current landscape. Founded by former Google Brain and DeepMind researchers, the lab has already made headlines with its work on evolutionary methods, model orchestration, and adaptive reasoning. Now they have launched a new, impressive model. In the latest evolution of inference-time AI, Sakana AI has introduced a compelling framework that pushes beyond traditional single-model reasoning: Adaptive Branching Monte Carlo Tree Search, or AB-MCTS. At its core, AB-MCTS reflects a philosophical shift in how we think about large language model (LLM) reasoning. Rather than treating generation as a flat, linear process, Sakana’s approach reframes inference as a strategic exploration through a search tree—navigating between depth (refinement of existing ideas), width (generation of new hypotheses), and even model selection itself. The result is a system that begins to resemble collaborative, human-like thinking. AB-MCTS is an inference-time algorithm grounded in the principles of Monte Carlo Tree Search, a method historically associated with planning in board games like Go. Sakana adapts this mechanism to textual reasoning by using Thompson Sampling to decide whether to continue developing a promising response or branch into an unexplored avenue. This adaptive process means the system is no longer bound to fixed temperature sampling or deterministic prompting. Instead, it engages in a kind of probabilistic deliberation, allocating its computational resources to the most promising parts of the solution space as determined in real-time. But the real breakthrough lies in the extension: Multi-LLM AB-MCTS. In this paradigm, multiple LLMs—including frontier models like OpenAI’s o4-mini, Google DeepMind’s Gemini 2.5 Pro, and DeepSeek’s R1—are orchestrated into a dynamic ensemble. At each point in the reasoning tree, the system not only decides what to do next (go deeper or go wider) but also who should do it. This introduces a novel third axis to inference: model routing. Initially unbiased, the system learns to favor models that historically perform better on certain subtasks, effectively turning a collection of models into a coherent team. The implications for real-world AI systems are profound. By decoupling capability from a single monolithic model, AB-MCTS provides a path to compositional reliability. Enterprises can now imagine deploying systems where reasoning chains are distributed across specialized models, dynamically assigned at runtime based on contextual performance. This not only improves robustness but opens up opportunities for cost optimization, interpretability, and safety. Moreover, Sakana has open-sourced the framework—dubbed TreeQuest—under Apache 2.0, inviting both researchers and practitioners to integrate it into their pipelines. What Sakana has achieved with AB-MCTS is a blueprint for how we might scale intelligence not just by increasing parameters or data, but by scaling the search process itself. It borrows from the playbooks of both biological evolution and algorithmic planning, combining breadth, depth, and diversity in a structured, learnable way. In doing so, it reframes LLMs as components of larger reasoning ecosystems—systems that can adapt, deliberate, and even self-correct. The age of collective intelligence at inference-time may just be getting started. 🔎 AI ResearchTitle: SciArena: An Open Evaluation Platform for Foundation Models in Scientific Literature TasksAI Lab: Allen Institute for AI & Yale University Title: Does Math Reasoning Improve General LLM Capabilities? Understanding Transferability of LLM ReasoningAI Lab: Carnegie Mellon University, University of Washington, University of Pennsylvania, The Hong Kong Polytechnic University Title: Thinking Beyond Tokens: From Brain-Inspired Intelligence to Cognitive Foundations for Artificial General Intelligence and its Societal ImpactAI Lab: Multi-institutional collaboration including University of Central Florida, Cornell, Vector Institute, Meta, Amazon, Oxford, and others Title: Zero-shot Antibody Design in a 24-well PlateAI Lab: Chai Discovery Team Title: Wider or Deeper? Scaling LLM Inference-Time Compute with Adaptive Branching Tree SearchAI Lab: Sakana AI Title: Fast and Simplex: 2-Simplicial Attention in TritonAI Lab: Meta AI and University of Texas at Austin 🤖 AI Tech ReleasesErnie 4.5Baidu released the newest version of its marquee Ernie model. DeepSWETogether AI open sourced DeepSWE, a new coding agent based on Qwen3. 🛠 AI in ProductionAgents Auditing at SalesforceSalesforce discusses the architecture powering the Agentforce auditing capabilities. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Similar newsletters

There are other similar shared emails that you might be interested in: