In July 2025 Kimi K2 from Moonshot AI was the most talked-about massive Mixture-of-Experts (MoE) model with the special focus on Agentic Intelligence. When we compared it to other Chinese open models back then, we noted: pick Kimi K2, if you want a well-rounded, strong open base with agentic plus long-context strength. Among DeepSeek-R1, Qwen3, and GLM-4.5, this one was the most universal. | And now, in November 2025, we have Kimi K2 Thinking – the newest and most capable version of Moonshot’s open-source thinking model. It’s a reasoning agent that solves problems step-by-step and uses a wide range of external tools, like a Python interpreter or web search. Remarkably, it can make 200–300 tool calls in sequence (!) and outperforms top models like GPT-5 and Claude Sonnet 4.5 (Thinking) on many benchmarks. | Kimi K2 Thinking continues Moonshot’s global vision of building the strongest agentic models with lossless long context. So let’s explore how Moonshot defines this strategy – and what K2 Thinking contributes to pushing the company one step ahead of other models on its path toward true agentic intelligence. | | In today’s episode, we will cover: | Moonshot AI’s global strategy What is Kimi K2 Thinking? Tech spec What about Agentic performance Not without limitations Early cases of implementation Conclusion Sources and further reading

| Moonshot AI’s Global Strategy | Before we’ll discuss what is new in Kimi K2 Thinking model, let’s look back at Moonshot AI’s global idea and see how their previous release – Kimi K2, and the fresh Kimi K2 Thinking fit into their strategy. | Moonshot AI’s strategy centers on the lossless long context. They aim to create models that can process and recall massive amounts of text without losing information or performance. It’s a path to making personalization and contextual understanding possible without fine-tuning, using a model’s memory of entire conversations. | Another main focus of Moonshot AI is (obviously) AGI. Yang Zhilin, the founder of Moonshot, outlined their roadmap toward AGI in three layers: | Layer 1: Scaling laws and next-token prediction. It’s the current industry standard. Layer 2: Overcoming data and representation bottlenecks, enabling self-evolving systems that learn continuously. Layer 3: Advanced capabilities, such as long-context reasoning, multi-step planning, multimodal understanding, and agentic behavior. Moonshot sees its opportunity to lead the field at this third layer.

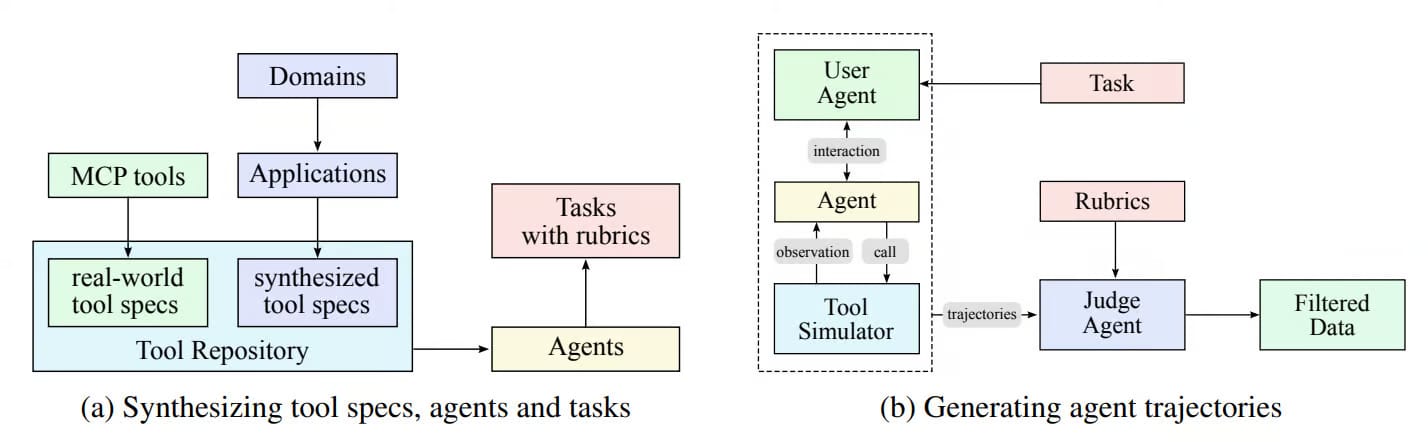

| The company decisively moves beyond static models toward AI agents that can plan, reason, use tools, and critique themselves – it’s what they also call “Agentic Intelligence.” | In July 2025, Kimi K2 became the cornerstone of Moonshot’s long-context and agentic AI vision, and the first to truly reflect the “Agentic Intelligence” concept. It is a massive 1.04 trillion-parameter Mixture-of-Experts (MoE) model trained on 15.5 trillion tokens that integrates multiple innovations: | The MuonClip optimizer for stable large-scale training. A Self-Critique Rubric Reward system for self-evaluation on open-ended tasks. A synthetic data pipeline that rephrases and diversifies knowledge sources. For its agentic abilities, Kimi K2 trains on a large agentic data pipeline. Kimi Team built a system with 20,000 virtual tools and thousands of agents solving tasks through them and generating detailed agent trajectories.

|  | Image Credit: Kimi K2 original paper |

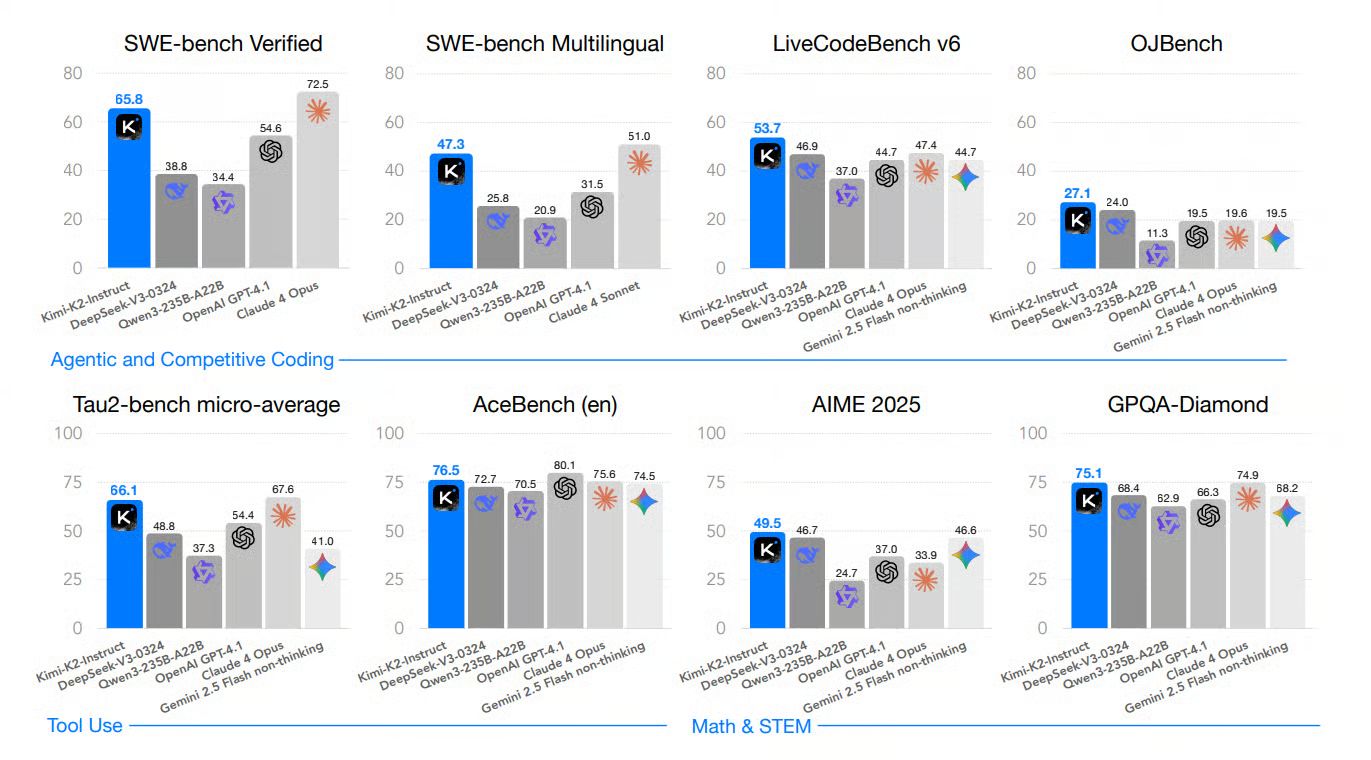

| This is how Kimi K2 anchored Moonshot’s “lossless long-context” strategy and became a foundation for their AGI Layer 3. In July, it was the most capable open-weight LLM to date, that rivaled proprietary frontier models and set new standards in real-world, agentic applications. |  | Image Credit: Kimi K2 original paper |

| But now it’s time to shine for the Moonshot’s newest model – Kimi K2 Thinking, built on the Kimi K2’s solid agentic foundation with mixed large-scale training, improved tool-use and self-evaluation. | Kimi K2 Thinking is the company’s next step toward true agentic intelligence, extending Kimi K2 into a reasoning and tool-using “thinking agent,” that reasons, plans, and acts autonomously over hundreds of steps. So let’s unpack → | What is Kimi K2 Thinking? | Kimi K2 Thinking is an open-source “thinking” model, essentially an AI agent that can reason step-by-step and use tools like a calculator, code interpreter, or web browser. It can make 200–300 tool calls in sequence keeping consistency, and handles long reasoning chains and complex tasks without human help. | As an agentic model Kimi K2 Thinking plans, check its own work, and refines answers over hundreds of reasoning steps. It switches smoothly between steps like “thinking → searching → reading → coding → thinking again”. This helps it to plan long-term, adapt to new information, and build coherent answers. | What is especially remarkable, through Kimi K2 Thinking, Moonshot AI demonstrates their specific approach to test-time scaling – they improve intelligence by expanding both the amount of thinking (tokens) and the number of actions (tool calls) during inference. | For example, K2 Thinking’s Heavy Mode runs eight reasoning paths in parallel and merges them for more reliable results, similar to GPT-5 Pro’s configuration. | You can try Kimi K2 Thinking now on kimi.com in a lighter chat mode for speed, using fewer tools and shorter reasoning chains. The full agent mode, with autonomous tool use and full multi-step reasoning, will be available soon. The model is also accessible via the Kimi K2 Thinking API. Both the code and model weights are released under the Modified MIT License, allowing open use and research development – which is another cool part about Kimi K2 Thinking. | But before you start playing with it, let’s look what’s inside K2 Thinking. It’s quite impressive → | Tech Spec | | Join Premium members from top companies like Microsoft, Nvidia, Google, Hugging Face, OpenAI, a16z, plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Learn the basics and go deeper👆🏼 |

|

|

|